Python 3.7 documentation

I would also like to highlight the following quote from the Python threading documentation:

CPython implementation detail: In CPython, due to the Global Interpreter Lock, only one thread can execute Python code at once (even though certain performance-oriented libraries might overcome this limitation). If you want your application to make better use of the computational resources of multi-core machines, you are advised to use multiprocessing or concurrent.futures.ProcessPoolExecutor. However, threading is still an appropriate model if you want to run multiple I/O-bound tasks simultaneously.

This links to the Glossary entry for global interpreter lock which explains that the GIL implies that threaded parallelism in Python is unsuitable for CPU bound tasks:

The mechanism used by the CPython interpreter to assure that only one thread executes Python bytecode at a time. This simplifies the CPython implementation by making the object model (including critical built-in types such as dict) implicitly safe against concurrent access. Locking the entire interpreter makes it easier for the interpreter to be multi-threaded, at the expense of much of the parallelism afforded by multi-processor machines.

However, some extension modules, either standard or third-party, are designed so as to release the GIL when doing computationally-intensive tasks such as compression or hashing. Also, the GIL is always released when doing I/O.

Past efforts to create a “free-threaded” interpreter (one which locks shared data at a much finer granularity) have not been successful because performance suffered in the common single-processor case. It is believed that overcoming this performance issue would make the implementation much more complicated and therefore costlier to maintain.

This quote also implies that dicts and thus variable assignment are also thread safe as a CPython implementation detail:

Next, the docs for the multiprocessing package explain how it overcomes the GIL by spawning process while exposing an interface similar to that of threading:

multiprocessing is a package that supports spawning processes using an API similar to the threading module. The multiprocessing package offers both local and remote concurrency, effectively side-stepping the Global Interpreter Lock by using subprocesses instead of threads. Due to this, the multiprocessing module allows the programmer to fully leverage multiple processors on a given machine. It runs on both Unix and Windows.

And the docs for concurrent.futures.ProcessPoolExecutor explain that it uses multiprocessing as a backend:

The ProcessPoolExecutor class is an Executor subclass that uses a pool of processes to execute calls asynchronously. ProcessPoolExecutor uses the multiprocessing module, which allows it to side-step the Global Interpreter Lock but also means that only picklable objects can be executed and returned.

which should be contrasted to the other base class ThreadPoolExecutor that uses threads instead of processes

ThreadPoolExecutor is an Executor subclass that uses a pool of threads to execute calls asynchronously.

from which we conclude that ThreadPoolExecutor is only suitable for I/O bound tasks, while ProcessPoolExecutor can also handle CPU bound tasks.

Process vs thread experiments

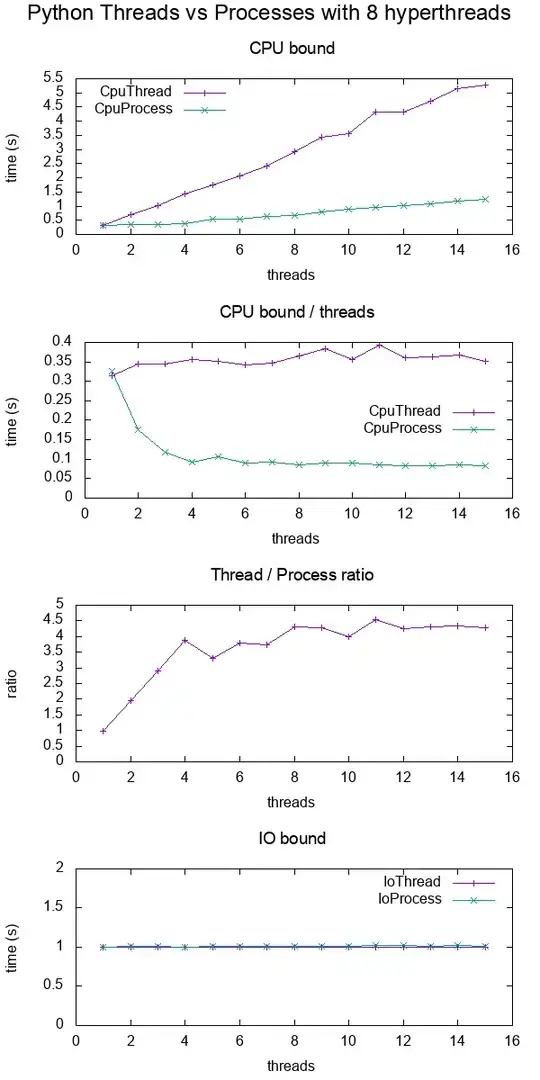

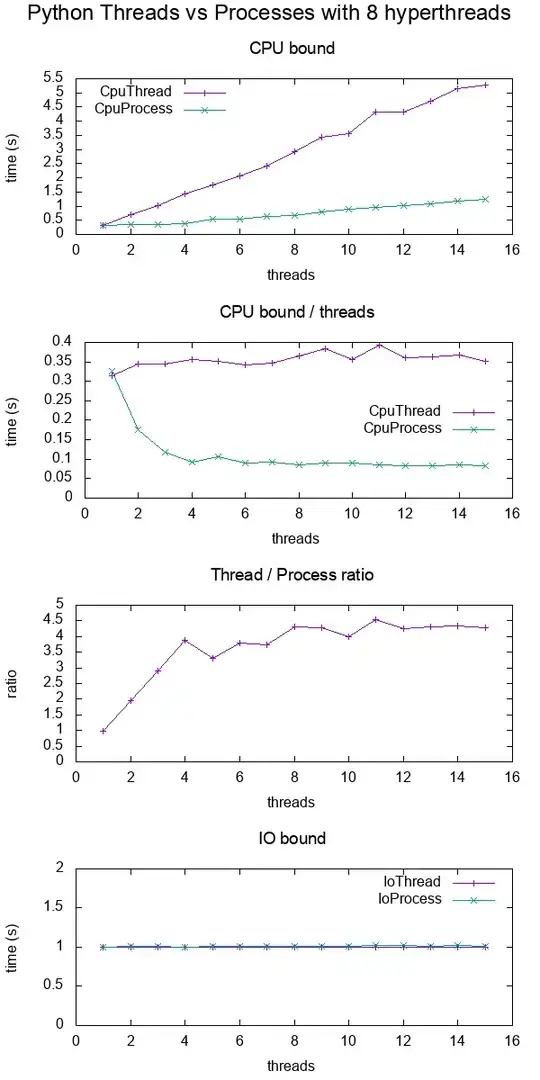

At Multiprocessing vs Threading Python I've done an experimental analysis of process vs threads in Python.

Quick preview of the results:

In other languages

The concept seems to exist outside of Python as well, applying just as well to Ruby for example: https://en.wikipedia.org/wiki/Global_interpreter_lock

It mentions the advantages:

- increased speed of single-threaded programs (no necessity to acquire or release locks on all data structures separately),

- easy integration of C libraries that usually are not thread-safe,

- ease of implementation (having a single GIL is much simpler to implement than a lock-free interpreter or one using fine-grained locks).

but the JVM seems to do just fine without the GIL, so I wonder if it is worth it. The following question asks why the GIL exists in the first place: Why the Global Interpreter Lock?