I'm using this library to implement a learning agent.

I have generated the training cases, but I don't know for sure what the validation and test sets are.

The teacher says:

70% should be train cases, 10% will be test cases and the rest 20% should be validation cases.

edit

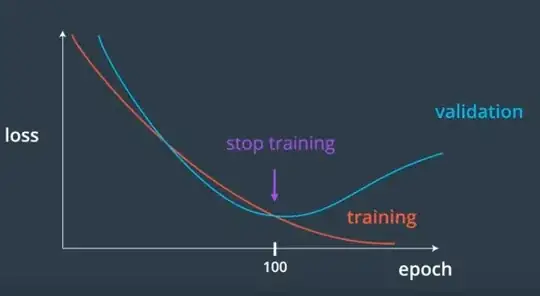

I have this code for training, but I have no idea when to stop training.

def train(self, train, validation, N=0.3, M=0.1):

# N: learning rate

# M: momentum factor

accuracy = list()

while(True):

error = 0.0

for p in train:

input, target = p

self.update(input)

error = error + self.backPropagate(target, N, M)

print "validation"

total = 0

for p in validation:

input, target = p

output = self.update(input)

total += sum([abs(target - output) for target, output in zip(target, output)]) #calculates sum of absolute diference between target and output

accuracy.append(total)

print min(accuracy)

print sum(accuracy[-5:])/5

#if i % 100 == 0:

print 'error %-14f' % error

if ? < ?:

break

edit

I can get an average error of 0.2 with validation data, after maybe 20 training iterations, that should be 80%?

average error = sum of absolute difference between validation target and output, given the validation data input/size of validation data.

1

avg error 0.520395

validation

0.246937882684

2

avg error 0.272367

validation

0.228832420879

3

avg error 0.249578

validation

0.216253590304

...

22

avg error 0.227753

validation

0.200239244714

23

avg error 0.227905

validation

0.199875013416