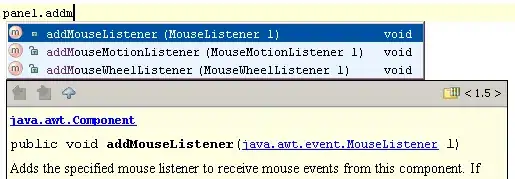

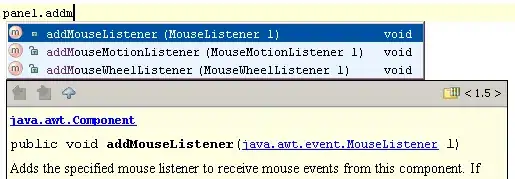

Calculating the mean of angles is generally a bad idea:

...

sum += Math.Atan2(yi, xi);

}

}

double avg = sum / (img.Width * img.Height);

The mean of a set of angles has no clear meaning: For example, the mean of one angle pointing up and one angle pointing down is a angle pointing right. Is that what you want? Assuming "up" is +PI, then the mean between two angles almost pointing up would be an angle pointing down, if one angle is PI-[some small value], the other -PI+[some small value]. That's probably not what you want. Also, you're completely ignoring the strength of the edge - most of the pixels in your real-life images aren't edges at all, so the gradient direction is mostly noise.

If you want to calculate something like an "average direction", you need to add up vectors instead of angles, then calculate Atan2 after the loop. Problem is: That vector sum tells you nothing about objects inside the image, as gradients pointing in opposite directions cancel each other out. It only tells you something about the difference in brightness between the first/last row and first/last column of the image. That's probably not what you want.

I think the simplest way to orient images is to create an angle histogram: Create an array with (e.g.) 360 bins for 360° of gradient directions. Then calculate the gradient angle and magnitude for each pixel. Add each gradient magnitude to the right angle-bin. This won't give you a single angle, but an angle-histogram, which can then be used to orient two images to each other using simple cyclic correlation.

Here's a proof-of-concept Mathematica implementation I've thrown together to see if this would work:

angleHistogram[src_] :=

(

Lx = GaussianFilter[ImageData[src], 2, {0, 1}];

Ly = GaussianFilter[ImageData[src], 2, {1, 0}];

angleAndOrientation =

MapThread[{Round[ArcTan[#1, #2]*180/\[Pi]],

Sqrt[#1^2 + #2^2]} &, {Lx, Ly}, 2];

angleAndOrientationFlat = Flatten[angleAndOrientation, 1];

bins = BinLists[angleAndOrientationFlat , 1, 5];

histogram =

Total /@ Flatten[bins[[All, All, All, 2]], {{1}, {2, 3}}];

maxIndex = Position[histogram, Max[histogram]][[1, 1]];

Labeled[

Show[

ListLinePlot[histogram, PlotRange -> All],

Graphics[{Red, Point[{maxIndex, histogram[[maxIndex]]}]}]

], "Maximum at " <> ToString[maxIndex] <> "\[Degree]"]

)

Results with sample images:

The angle histograms also show why the mean angle can't work: The histogram is essentially a single sharp peak, the other angles are roughly uniform. The mean of this histogram will always be dominated by the uniform "background noise". That's why you've got almost the same angle (about 180°) for each of the "real live" images with your current algorithm.

The tree image has a single dominant angle (the horizon), so in this case, you could use the mode of the histogram (the most frequent angle). But that will not work for every image:

Here you have two peaks. Cyclic correlation should still orient two images to each other, but simply using the mode is probably not enough.

Also note that the peak in the angle histogram is not "up": In the tree image above, the peak in the angle histogram is probably the horizon. So it's pointing up. In the Lena image, it's the vertical white bar in the background - so it's pointing to the right. Simply orienting the images using the most frequent angle will not turn every image with the right side pointing up.

This image has even more peaks: Using the mode (or, probably, any single angle) would be unreliable to orient this image. But the angle histogram as a whole should still give you a reliable orientation.

Note: I didn't pre-process the images, I didn't try gradient operators at different scales, I didn't post-process the resulting histogram. In a real-world application, you would tweak all these things to get the best possible algorithm for a large set of test images. This is just a quick test to see if the idea could work at all.

Add: To orient two images using this histogram, you would

- Normalize all histograms, so the area under the histogram is the same for each image (even if some are brighter, darker or blurrier)

- Take the histograms of the images, and compare them for each rotation you're interested in:

For example, in C#:

for (int rotationAngle = 0; rotationAngle < 360; rotationAngle++)

{

int difference = 0;

for (int i = 0; i < 360; i++)

difference += Math.Abs(histogram1[i] - histogram2[(i+rotationAngle) % 360]);

if (difference < bestDifferenceSoFar)

{

bestDifferenceSoFar = difference;

foundRotation = rotationAngle;

}

}

(you could speed this up using FFT if your histogram length is a power of two. But the code would be a lot more complex, and for 256 bins, it might not matter that much)