ncdu (ncurses du)

This awesome CLI utility allows you to easily find the large files and directories (recursive total size) interactively.

For example, from inside the root of a well known open source project we do:

sudo apt install ncdu

ncdu

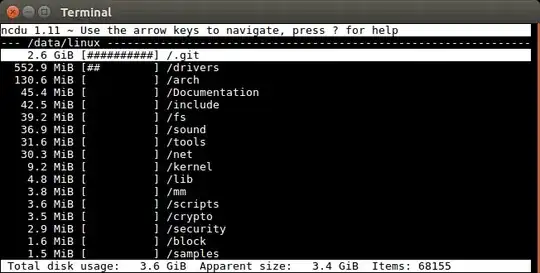

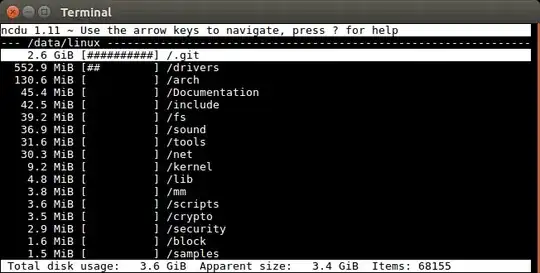

The outcome its:

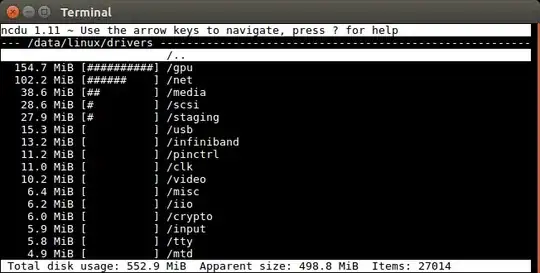

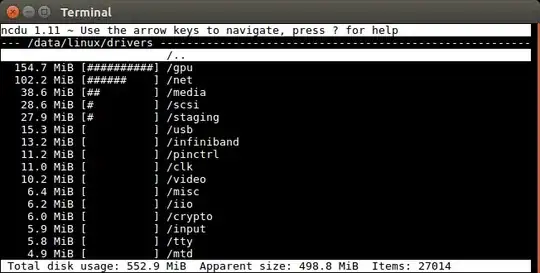

Then, I enter down and right on my keyboard to go into the /drivers folder, and I see:

ncdu only calculates file sizes recursively once at startup for the entire tree, so it is efficient. This way don't have to recalculate sizes as you move inside subdirectories as you try to determine what the disk hog is.

"Total disk usage" vs "Apparent size" is analogous to du, and I have explained it at: why is the output of `du` often so different from `du -b`

Project homepage: https://dev.yorhel.nl/ncdu

Related questions:

Tested in Ubuntu 16.04.

Ubuntu list root

You likely want:

ncdu --exclude-kernfs -x /

where:

-x stops crossing of filesystem barriers--exclude-kernfs skips special filesystems like /sys

MacOS 10.15.5 list root

To properly list root / on that system, I also needed --exclude-firmlinks, e.g.:

brew install ncdu

cd /

ncdu --exclude-firmlinks

otherwise it seemed to go into some link infinite loop, likely due to: https://www.swiftforensics.com/2019/10/macos-1015-volumes-firmlink-magic.html

The things we learn for love.

ncdu non-interactive usage

Another cool feature of ncdu is that you can first dump the sizes in a JSON format, and later reuse them.

For example, to generate the file run:

ncdu -o ncdu.json

and then examine it interactively with:

ncdu -f ncdu.json

This is very useful if you are dealing with a very large and slow filesystem like NFS.

This way, you can first export only once, which can take hours, and then explore the files, quit, explore again, etc.

The output format is just JSON, so it is easy to reuse it with other programs as well, e.g.:

ncdu -o - | python -m json.tool | less

reveals a simple directory tree data structure:

[

1,

0,

{

"progname": "ncdu",

"progver": "1.12",

"timestamp": 1562151680

},

[

{

"asize": 4096,

"dev": 2065,

"dsize": 4096,

"ino": 9838037,

"name": "/work/linux-kernel-module-cheat/submodules/linux"

},

{

"asize": 1513,

"dsize": 4096,

"ino": 9856660,

"name": "Kbuild"

},

[

{

"asize": 4096,

"dsize": 4096,

"ino": 10101519,

"name": "net"

},

[

{

"asize": 4096,

"dsize": 4096,

"ino": 11417591,

"name": "l2tp"

},

{

"asize": 48173,

"dsize": 49152,

"ino": 11418744,

"name": "l2tp_core.c"

},

Tested in Ubuntu 18.04.