In general the error:

Error: 2006 (CR_SERVER_GONE_ERROR) - MySQL server has gone away

means that the client couldn't send a question to the server.

mysql import

In your specific case while importing the database file via mysql, this most likely mean that some of the queries in the SQL file are too large to import and they couldn't be executed on the server, therefore client fails on the first occurred error.

So you've the following possibilities:

Add force option (-f) for mysql to proceed and execute rest of the queries.

This is useful if the database has some large queries related to cache which aren't relevant anyway.

Increase max_allowed_packet and wait_timeout in your server config (e.g. ~/.my.cnf).

Dump the database using --skip-extended-insert option to break down the large queries. Then import it again.

Try applying --max-allowed-packet option for mysql.

Common reasons

In general this error could mean several things, such as:

a query to the server is incorrect or too large,

Solution: Increase max_allowed_packet variable.

Make sure the variable is under [mysqld] section, not [mysql].

Don't afraid to use large numbers for testing (like 1G).

Don't forget to restart the MySQL/MariaDB server.

Double check the value was set properly by:

mysql -sve "SELECT @@max_allowed_packet" # or:

mysql -sve "SHOW VARIABLES LIKE 'max_allowed_packet'"

You got a timeout from the TCP/IP connection on the client side.

Solution: Increase wait_timeout variable.

You tried to run a query after the connection to the server has been closed.

Solution: A logic error in the application should be corrected.

Host name lookups failed (e.g. DNS server issue), or server has been started with --skip-networking option.

Another possibility is that your firewall blocks the MySQL port (e.g. 3306 by default).

The running thread has been killed, so retry again.

You have encountered a bug where the server died while executing the query.

A client running on a different host does not have the necessary privileges to connect.

And many more, so learn more at: B.5.2.9 MySQL server has gone away.

Debugging

Here are few expert-level debug ideas:

Check the logs, e.g.

sudo tail -f $(mysql -Nse "SELECT @@GLOBAL.log_error")

Test your connection via mysql, telnet or ping functions (e.g. mysql_ping in PHP).

Use tcpdump to sniff the MySQL communication (won't work for socket connection), e.g.:

sudo tcpdump -i lo0 -s 1500 -nl -w- port mysql | strings

On Linux, use strace. On BSD/Mac use dtrace/dtruss, e.g.

sudo dtruss -a -fn mysqld 2>&1

See: Getting started with DTracing MySQL

Learn more how to debug MySQL server or client at: 26.5 Debugging and Porting MySQL.

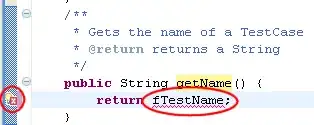

For reference, check the source code in sql-common/client.c file responsible for throwing the CR_SERVER_GONE_ERROR error for the client command.

MYSQL_TRACE(SEND_COMMAND, mysql, (command, header_length, arg_length, header, arg));

if (net_write_command(net,(uchar) command, header, header_length,

arg, arg_length))

{

set_mysql_error(mysql, CR_SERVER_GONE_ERROR, unknown_sqlstate);

goto end;

}