The answer is somewhat architecture dependent. On an x86, there is no additional overhead associated with volatile reads specifically, though there are implications for other optimizations.

JMM cookbook from Doug Lea, see architecture table near the bottom.

To clarify: There is not any additional overhead associated with the read itself. Memory barriers are used to ensure proper ordering. JSR-133 classifies four barriers "LoadLoad, LoadStore, StoreLoad, and StoreStore". Depending on the architecture, some of these barriers correspond to a "no-op", meaning no action is taken, others require a fence. There is no implicit cost associated with the Load itself, though one may be incurred if a fence is in place. In the case of the x86, only a StoreLoad barrier results in a fence.

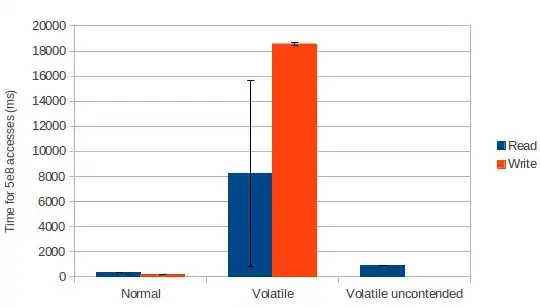

As pointed out in a blog post, the fact that the variable is volatile means there are assumptions about the nature of the variable that can no longer be made and some compiler optimizations would not be applied to a volatile.

Volatile is not something that should be used glibly, but it should also not be feared. There are plenty of cases where a volatile will suffice in place of more heavy handed locking.