I have almost 3.000 CSV files (containing tweets) with the same format, I want to merge these files into one new file and remove the duplicate tweets. I have come across various topics discussing similar questions however the number of files is usually quit small. I hope you can help me write a code within R that does this job both efficiently and effectively.

The CSV files have the following format:

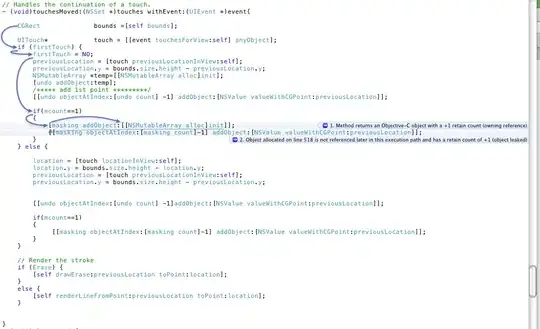

Image of CSV format:

I changed (in column 2 and 3) the usernames (on Twitter) to A-E and the 'actual names' to A1-E1.

Raw text file:

"tweet";"author";"local.time"

"1";"2012-06-05 00:01:45 @A (A1): Cruijff z'n met-zwart-shirt-zijn-ze-onzichtbaar logica is even mooi ontkracht in #bureausport.";"A (A1)";"2012-06-05 00:01:45"

"2";"2012-06-05 00:01:41 @B (B1): Welterusten #BureauSport";"B (B1)";"2012-06-05 00:01:41"

"3";"2012-06-05 00:01:38 @C (C1): Echt ..... eindelijk een origineel sportprogramma #bureausport";"C (C1)";"2012-06-05 00:01:38"

"4";"2012-06-05 00:01:38 @D (D1): LOL. \"Na onderzoek op de Fontys Hogeschool durven wij te stellen dat..\" Want Fontys staat zo hoog aangeschreven? #bureausport";"D (D1)";"2012-06-05 00:01:38"

"5";"2012-06-05 00:00:27 @E (E1): Ik kijk Bureau sport op Nederland 3. #bureausport #kijkes";"E (E1)";"2012-06-05 00:00:27"

Somehow my headers are messed up, they obviously should move one column to the right. Each CSV file contains up to 1500 tweets. I would like to remove the duplicates by checking the 2nd column (containing the tweets) simply because these should be unique and the author columns can be similar (e.g. one author posting multiple tweets).

Is it possible to combine merging the files and removing the duplicates or is this asking for trouble and should the processes be separated? As a starting point I included two links two blogs from Hayward Godwin that discuss three approaches for merging CSV files.

Obviously there are some topics related to my question on this site as well (e.g. Merging multiple csv files in R) but I haven't found anything that discusses both merging and removing the duplicates. I really hope you can help me and my limited R knowledge deal with this challenge!

Although I have tried some codes I found on the web, this didn't actually result in an output file. The approximately 3.000 CSV files have the format discussed above. I meanly tried the following code (for the merge part):

filenames <- list.files(path = "~/")

do.call("rbind", lapply(filenames, read.csv, header = TRUE))

This results in the following error:

Error in file(file, "rt") : cannot open the connection

In addition: Warning message:

In file(file, "rt") :

cannot open file '..': No such file or directory

Update

I have tried the following code:

# grab our list of filenames

filenames <- list.files(path = ".", pattern='^.*\\.csv$')

# write a special little read.csv function to do exactly what we want

my.read.csv <- function(fnam) { read.csv(fnam, header=FALSE, skip=1, sep=';', col.names=c('ID','tweet','author','local.time'), colClasses=rep('character', 4)) }

# read in all those files into one giant data.frame

my.df <- do.call("rbind", lapply(filenames, my.read.csv))

# remove the duplicate tweets

my.new.df <- my.df[!duplicated(my.df$tweet),]

But I run into the following errors:

After the 3rd line I get:

Error in read.table(file = file, header = header, sep = sep, quote = quote, : more columns than column names

After the 4th line I get:

Error: object 'my.df' not found

I suspect that these errors are caused by some failures made in the writing process of the csv files, since there are some cases of the author/local.time being in the wrong column. Either to the left or the right of where they supposed to be which results in an extra column. I manually adapted 5 files, and tested the code on these files, I didn't get any errors. However its seemed like nothing happened at all. I didn't get any output from R?

To solve the extra column problem I adjusted the code slightly:

#grab our list of filenames

filenames <- list.files(path = ".", pattern='^.*\\.csv$')

# write a special little read.csv function to do exactly what we want

my.read.csv <- function(fnam) { read.csv(fnam, header=FALSE, skip=1, sep=';', col.names=c('ID','tweet','author','local.time','extra'), colClasses=rep('character', 5)) }

# read in all those files into one giant data.frame

my.df <- do.call("rbind", lapply(filenames, my.read.csv))

# remove the duplicate tweets

my.new.df <- my.df[!duplicated(my.df$tweet),]

I tried this code on all the files, although R clearly started processing, I eventually got the following errors:

Error in read.table(file = file, header = header, sep = sep, quote = quote, : more columns than column names

In addition: Warning messages:

1: In read.table(file = file, header = header, sep = sep, quote = quote, : incomplete final line found by readTableHeader on 'Twitts - di mei 29 19_22_30 2012 .csv'

2: In read.table(file = file, header = header, sep = sep, quote = quote, : incomplete final line found by readTableHeader on 'Twitts - di mei 29 19_24_31 2012 .csv'

Error: object 'my.df' not found

What did I do wrong?