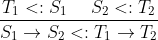

Suppose you have this Excel spreadsheet:

Note:

- the multi-line cell in C2;

- embedded comma in C1 and D3;

- blank cells, and cell with a space in D4.

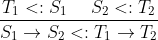

Saving that as CSV in Excel, you will get this csv file:

A1,B1,"C1,+comma",D1

,B2,"line 1

line 2",D2

,,C3,"D3,+comma"

,,,D4 space

Assumably, you will want to read that into Python with the blank cells still having meaning and the embedded comma treated correctly.

So, this:

with open("test.csv", 'rU') as csvIN:

outCSV=(line for line in csv.reader(csvIN, dialect='excel'))

for row in outCSV:

print("Length: ", len(row), row)

correctly produces the 4x4 List of List matrix represented in Excel:

Length: 4 ['A1', 'B1', 'C1,+comma', 'D1']

Length: 4 ['', 'B2', 'line 1\nline 2', 'D2']

Length: 4 ['', '', 'C3', 'D3,+comma']

Length: 4 ['', '', '', 'D4 space']

The example CSV file you posted lacks quotes around the field with an 'extra newline' rendering the meaning of that newline ambiguous. Is it a new row or a multi-line field?

Therefor, you can only interpret this csv file:

A, B, C, D, E, F

123, 456, tree

, very, bla, indigo

as a one dimension list like so:

with open("test.csv", 'rU') as csvIN:

outCSV=[field.strip() for row in csv.reader(csvIN, delimiter=',')

for field in row if field]

Which produces this one dimensional list:

['A', 'B', 'C', 'D', 'E', 'F', '123', '456', 'tree', 'very', 'bla', 'indigo']

This can then be interpreted and regrouped into any sub grouping as you wish.

The idiomatic regrouping method in python uses zip like so:

>>> zip(*[iter(outCSV)]*6)

[('A', 'B', 'C', 'D', 'E', 'F'), ('123', '456', 'tree', 'very', 'bla', 'indigo')]

Or, if you want a list of lists, this is also idiomatic:

>>> [outCSV[i:i+6] for i in range(0, len(outCSV),6)]

[['A', 'B', 'C', 'D', 'E', 'F'], ['123', '456', 'tree', 'very', 'bla', 'indigo']]

If you can change how your CSV file is created, it will be less ambiguous to interpret.