Which is more random?

rand()

OR

rand() + rand()

OR

rand() * rand()

Just how can one determine this? I mean. This is really puzzling me! One feels that they may all be equally random, but how can one be absolutely sure?!

Anyone?

Which is more random?

rand()

OR

rand() + rand()

OR

rand() * rand()

Just how can one determine this? I mean. This is really puzzling me! One feels that they may all be equally random, but how can one be absolutely sure?!

Anyone?

The concept of being "more random" doesn't really make sense. Your three methods give different distributions of random numbers. I can illustrate this in Matlab. First define a function f that, when called, gives you an array of 10,000 random numbers:

f = @() rand(10000,1);

Now look at the distribution of your three methods.

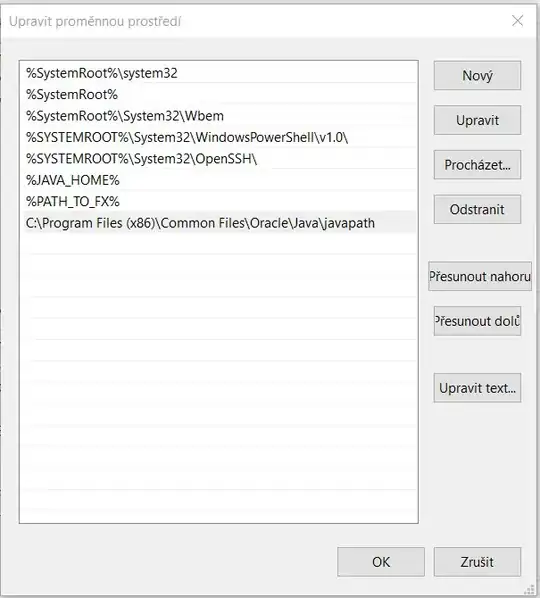

Your first method, hist(f()) gives a uniform distribution:

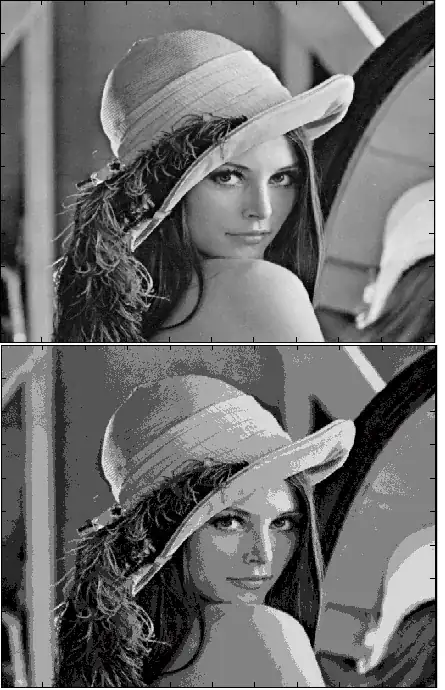

Your second method hist(f() + f()) gives a distribution which is peaked in the centre:

Your third method hist(f() .* f()) gives a distribution where numbers close to zero are more likely:

As to amount of entropy, I guess, is comparable.

If you need more entropy (randomness) than you have currently have, use cryptographically strong random generators.

Why they are comparable --- because if attacker could guess next pseudorandom value returned by

rand()

it would not be significally harder for him to guess next

rand()*rand()

Nevertheless argument about different distributions is important and valid!