Can CUDA compute the value of a function like this fast enough?

Many cost functionals are expressed in the form of summations of a certain number of terms. Examples are the:

Sphere function

Rosenbrock function

Styblinski-Tang function

In all those cases, the evaluation of the cost function can be performed by a reduction, or better, a transformation followed by a reduction.

CUDA Thrust has thrust::transform_reduce which can surely serve the scope, but of course you can set up your own transformation + reduction routines.

Below, I'm providing an example on how you can compute the Rosenbrock functional using either CUDA Thrust or a customized version of the reduction routine offered by the CUDA examples. In the latter case, a pointer to a __device__ transformation function is passed to the customized transform_reduce function, if the EXTERNAL keyword is defined, or the the transformation function is defined and compiled in the compilation unit of the customized transform_reduce routine.

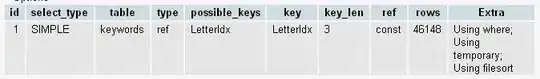

Some performance results on a Kepler K20c card for the non-EXTERNAL case:

N = 90000 Thrust = 0.055ms Customized = 0.059ms

N = 900000 Thrust = 0.67ms Customized = 0.14ms

N = 9000000 Thrust = 0.85ms Customized = 0.87ms

Here is the code. For the timing functions, please see OrangeOwlSolutions/Timing and OrangeOwlSolutions/CUDA_Utilities github projects.

Please, note that separate compilation is required.

kernel.cu

// --- Requires separate compilation

#include <stdio.h>

#include <thrust/device_vector.h>

#include "transform_reduce.cuh"

#include "Utilities.cuh"

#include "TimingCPU.h"

#include "TimingGPU.cuh"

/***************************************/

/* COST FUNCTION - GPU/CUSTOMIZED CASE */

/***************************************/

// --- Transformation function

__device__ __forceinline__ float transformation(const float * __restrict__ x, const int i) { return (100.f * (x[i+1] - x[i] * x[i]) * (x[i+1] - x[i] * x[i]) + (x[i] - 1.f) * (x[i] - 1.f)) ; }

// --- Device-side function pointer

__device__ pointFunction_t dev_pfunc = transformation;

/***********************************/

/* COST FUNCTION - GPU/THRUST CASE */

/***********************************/

struct CostFunctionStructGPU{

template <typename Tuple>

__host__ __device__ float operator()(Tuple a) {

float temp1 = (thrust::get<1>(a) - thrust::get<0>(a) * thrust::get<0>(a));

float temp2 = (thrust::get<0>(a) - 1.f);

return 100.f * temp1 * temp1 + temp2 * temp2;

}

};

/********/

/* MAIN */

/********/

int main()

{

const int N = 90000000;

float *x = (float *)malloc(N * sizeof(float));

for (int i=0; i<N; i++) x[i] = 3.f;

float *d_x; gpuErrchk(cudaMalloc((void**)&d_x, N * sizeof(float)));

gpuErrchk(cudaMemcpy(d_x, x, N * sizeof(float), cudaMemcpyHostToDevice));

/************************************************/

/* "CUSTOMIZED" DEVICE-SIDE TRANSFORM REDUCTION */

/************************************************/

float customizedDeviceResult = transform_reduce(d_x, N - 1, &dev_pfunc);

TimingGPU timerGPU;

timerGPU.StartCounter();

customizedDeviceResult = transform_reduce(d_x, N - 1, &dev_pfunc);

printf("Timing for 'customized', device-side transform reduction = %f\n", timerGPU.GetCounter());

printf("Result for 'customized', device-side transform reduction = %f\n", customizedDeviceResult);

printf("\n\n");

/************************************************/

/* THRUST-BASED DEVICE-SIDE TRANSFORM REDUCTION */

/************************************************/

thrust::device_vector<float> d_vec(N,3.f);

timerGPU.StartCounter();

float ThrustResult = thrust::transform_reduce(thrust::make_zip_iterator(thrust::make_tuple(d_vec.begin(), d_vec.begin() + 1)), thrust::make_zip_iterator(thrust::make_tuple(d_vec.begin() + N - 1, d_vec.begin() + N)), CostFunctionStructGPU(), 0.f, thrust::plus<float>());

printf("Timing for Thrust-based, device-side transform reduction = %f\n", timerGPU.GetCounter());

printf("Result for Thrust-based, device-side transform reduction = %f\n", ThrustResult);

printf("\n\n");

/*********************************/

/* HOST-SIDE TRANSFORM REDUCTION */

/*********************************/

// thrust::host_vector<float> h_vec(d_vec);

//sum_host = sum_host + transformation(thrust::raw_pointer_cast(h_vec.data()), i);

TimingCPU timerCPU;

timerCPU.StartCounter();

float sum_host = 0.f;

for (int i=0; i<N-1; i++) {

float temp = (100.f * (x[i+1] - x[i] * x[i]) * (x[i+1] - x[i] * x[i]) + (x[i] - 1.f) * (x[i] - 1.f));

sum_host = sum_host + temp;

//printf("%i %f %f\n", i, temp, sum_host);

}

printf("Timing for host-side transform reduction = %f\n", timerCPU.GetCounter());

printf("Result for host-side transform reduction = %f\n", sum_host);

printf("\n\n");

sum_host = 0.f;

float c = 0.f;

for (int i=0; i<N-1; i++) {

float temp = (100.f * (x[i+1] - x[i] * x[i]) * (x[i+1] - x[i] * x[i]) + (x[i] - 1.f) * (x[i] - 1.f)) - c;

float t = sum_host + temp;

c = (t - sum_host) - temp;

sum_host = t;

}

printf("Result for host-side transform reduction = %f\n", sum_host);

// cudaDeviceReset();

}

transform_reduce.cuh

#ifndef TRANSFORM_REDUCE_CUH

#define TRANSFORM_REDUCE_CUH

// --- Function pointer type

// --- Complete with your own favourite instantiations

typedef float(*pointFunction_t)(const float * __restrict__, const int);

template <class T> T transform_reduce(T *, unsigned int, pointFunction_t *);

#endif

transform_reduce.cu

#include <stdio.h>

#include "Utilities.cuh"

#include "transform_reduce.cuh"

#define BLOCKSIZE 512

#define warpSize 32

// --- Host-side function pointer

pointFunction_t h_pfunc;

// --- Uncomment if you want to apply CUDA error checking to the kernel launches

//#define DEBUG

//#define EXTERNAL

/*******************************************************/

/* CALCULATING THE NEXT POWER OF 2 OF A CERTAIN NUMBER */

/*******************************************************/

unsigned int nextPow2(unsigned int x)

{

--x;

x |= x >> 1;

x |= x >> 2;

x |= x >> 4;

x |= x >> 8;

x |= x >> 16;

return ++x;

}

/*************************************/

/* CHECK IF A NUMBER IS A POWER OF 2 */

/*************************************/

// --- Note: although x = 1 is a power of 2 (1 = 2^0), this routine returns 0 for x == 1.

bool isPow2(unsigned int x) {

if (x == 1) return 0;

else return ((x&(x-1))==0);

}

/***************************/

/* TRANSFORMATION FUNCTION */

/***************************/

template <class T>

__host__ __device__ __forceinline__ T transformation(const T * __restrict__ x, const int i) { return ((T)100 * (x[i+1] - x[i] * x[i]) * (x[i+1] - x[i] * x[i]) + (x[i] - (T)1) * (x[i] - (T)1)) ; }

/********************/

/* REDUCTION KERNEL */

/********************/

/*

This version adds multiple elements per thread sequentially. This reduces the overall

cost of the algorithm while keeping the work complexity O(n) and the step complexity O(log n).

(Brent's Theorem optimization)

Note, this kernel needs a minimum of 64*sizeof(T) bytes of shared memory.

In other words if blockSize <= 32, allocate 64*sizeof(T) bytes.

If blockSize > 32, allocate blockSize*sizeof(T) bytes.

*/

template <class T, unsigned int blockSize, bool nIsPow2>

__global__ void reductionKernel(T *g_idata, T *g_odata, unsigned int N, pointFunction_t pPointTransformation)

{

extern __shared__ T sdata[];

unsigned int tid = threadIdx.x; // Local thread index

unsigned int i = blockIdx.x*(blockDim.x*2) + threadIdx.x; // Global thread index - Fictitiously double the block dimension

unsigned int gridSize = blockSize*2*gridDim.x;

// --- Performs the first level of reduction in registers when reading from global memory on multiple elements per thread.

// More blocks will result in a larger gridSize and therefore fewer elements per thread

T mySum = 0;

while (i < N) {

#ifdef EXTERNAL

mySum += (*pPointTransformation)(g_idata, i);

#else

mySum += transformation(g_idata, i);

#endif

// --- Ensure we don't read out of bounds -- this is optimized away for powerOf2 sized arrays

if (nIsPow2 || i + blockSize < N)

#ifdef EXTERNAL

mySum += (*pPointTransformation)(g_idata, i+blockSize);

#else

mySum += transformation(g_idata, i+blockSize);

#endif

i += gridSize; }

// --- Each thread puts its local sum into shared memory

sdata[tid] = mySum;

__syncthreads();

// --- Reduction in shared memory. Fully unrolled loop.

if ((blockSize >= 512) && (tid < 256)) sdata[tid] = mySum = mySum + sdata[tid + 256];

__syncthreads();

if ((blockSize >= 256) && (tid < 128)) sdata[tid] = mySum = mySum + sdata[tid + 128];

__syncthreads();

if ((blockSize >= 128) && (tid < 64)) sdata[tid] = mySum = mySum + sdata[tid + 64];

__syncthreads();

#if (__CUDA_ARCH__ >= 300 )

// --- Single warp reduction by shuffle operations

if ( tid < 32 )

{

// --- Last iteration removed from the for loop, but needed for shuffle reduction

mySum += sdata[tid + 32];

// --- Reduce final warp using shuffle

for (int offset = warpSize/2; offset > 0; offset /= 2) mySum += __shfl_down(mySum, offset);

//for (int offset=1; offset < warpSize; offset *= 2) mySum += __shfl_xor(mySum, i);

}

#else

// --- Reduction within a single warp. Fully unrolled loop.

if ((blockSize >= 64) && (tid < 32)) sdata[tid] = mySum = mySum + sdata[tid + 32];

__syncthreads();

if ((blockSize >= 32) && (tid < 16)) sdata[tid] = mySum = mySum + sdata[tid + 16];

__syncthreads();

if ((blockSize >= 16) && (tid < 8)) sdata[tid] = mySum = mySum + sdata[tid + 8];

__syncthreads();

if ((blockSize >= 8) && (tid < 4)) sdata[tid] = mySum = mySum + sdata[tid + 4];

__syncthreads();

if ((blockSize >= 4) && (tid < 2)) sdata[tid] = mySum = mySum + sdata[tid + 2];

__syncthreads();

if ((blockSize >= 2) && ( tid < 1)) sdata[tid] = mySum = mySum + sdata[tid + 1];

__syncthreads();

#endif

// --- Write result for this block to global memory. At the end of the kernel, global memory will contain the results for the summations of

// individual blocks

if (tid == 0) g_odata[blockIdx.x] = mySum;

}

/******************************/

/* REDUCTION WRAPPER FUNCTION */

/******************************/

template <class T>

T transform_reduce_inner(T *g_idata, unsigned int N, pointFunction_t h_pfunc) {

// --- Reduction parameters

const int NumThreads = (N < BLOCKSIZE) ? nextPow2(N) : BLOCKSIZE;

const int NumBlocks = (N + NumThreads - 1) / NumThreads;

const int smemSize = (NumThreads <= 32) ? 2 * NumThreads * sizeof(T) : NumThreads * sizeof(T);

// --- Device memory space where storing the partial reduction results

T *g_odata; gpuErrchk(cudaMalloc((void**)&g_odata, NumBlocks * sizeof(T)));

if (isPow2(N)) {

switch (NumThreads) {

case 512: reductionKernel<T, 512, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 256: reductionKernel<T, 256, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 128: reductionKernel<T, 128, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 64: reductionKernel<T, 64, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 32: reductionKernel<T, 32, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 16: reductionKernel<T, 16, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 8: reductionKernel<T, 8, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 4: reductionKernel<T, 4, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 2: reductionKernel<T, 2, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 1: reductionKernel<T, 1, true><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

}

#ifdef DEBUG

gpuErrchk(cudaPeekAtLastError());

gpuErrchk(cudaDeviceSynchronize());

#endif

}

else {

switch (NumThreads) {

case 512: reductionKernel<T, 512, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 256: reductionKernel<T, 256, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 128: reductionKernel<T, 128, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 64: reductionKernel<T, 64, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 32: reductionKernel<T, 32, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 16: reductionKernel<T, 16, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 8: reductionKernel<T, 8, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 4: reductionKernel<T, 4, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 2: reductionKernel<T, 2, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

case 1: reductionKernel<T, 1, false><<< NumBlocks, NumThreads, smemSize>>>(g_idata, g_odata, N, h_pfunc); break;

}

#ifdef DEBUG

gpuErrchk(cudaPeekAtLastError());

gpuErrchk(cudaDeviceSynchronize());

#endif

}

// --- The last part of the reduction, which would be expensive to perform on the device, is executed on the host

T *host_vector = (T *)malloc(NumBlocks * sizeof(T));

gpuErrchk(cudaMemcpy(host_vector, g_odata, NumBlocks * sizeof(T), cudaMemcpyDeviceToHost));

T sum_transformReduce = (T)0;

for (int i=0; i<NumBlocks; i++) sum_transformReduce = sum_transformReduce + host_vector[i];

return sum_transformReduce;

}

template <class T>

T transform_reduce(T *g_idata, unsigned int N, pointFunction_t *dev_pfunc) {

#ifdef EXTERNAL

gpuErrchk(cudaMemcpyFromSymbol(&h_pfunc, *dev_pfunc, sizeof(pointFunction_t)));

#endif

T customizedDeviceResult = transform_reduce_inner(g_idata, N, h_pfunc);

return customizedDeviceResult;

}

// --- Complete with your own favourite instantiations

template float transform_reduce(float *, unsigned int, pointFunction_t *);