I'm looking for an HTML Parser module for Python that can help me get the tags in the form of Python lists/dictionaries/objects.

If I have a document of the form:

<html>

<head>Heading</head>

<body attr1='val1'>

<div class='container'>

<div id='class'>Something here</div>

<div>Something else</div>

</div>

</body>

</html>

then it should give me a way to access the nested tags via the name or id of the HTML tag so that I can basically ask it to get me the content/text in the div tag with class='container' contained within the body tag, or something similar.

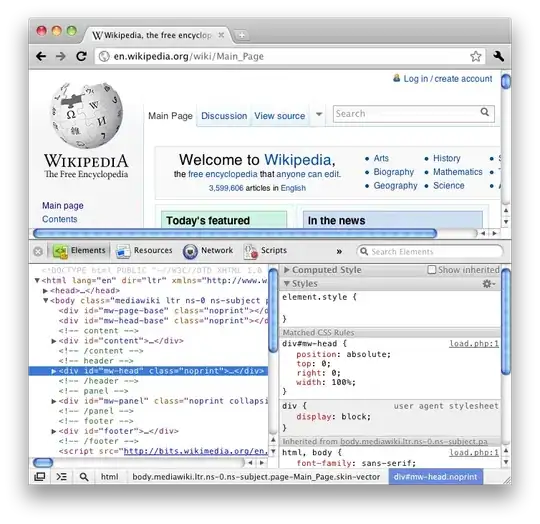

If you've used Firefox's "Inspect element" feature (view HTML) you would know that it gives you all the tags in a nice nested manner like a tree.

I'd prefer a built-in module but that might be asking a little too much.

I went through a lot of questions on Stack Overflow and a few blogs on the internet and most of them suggest BeautifulSoup or lxml or HTMLParser but few of these detail the functionality and simply end as a debate over which one is faster/more efficent.