I know what a tail recursive algorithm is as written out in this SO answer. However I am going through this video of quick sort algorithm from MIT and at 18:30 seconds the professor says that this is tail recursive algorithm. I fail to connect how this is tail recursive . We are not doing calculation at any step of recursion or are we ? Can you explain why this is cited as an example of tail recursive algorithm . Please base your answer on the premise that I know what an recursive algorithm is . The part that isn't clear to me is why it is called tail recursive ?

-

2Did you read that wikipedia article from your previous question? – Andrey Aug 08 '12 at 11:59

-

2@Andrey yes I did but I found the answer in SO that I linked to to be easier and clearer to understand. – Geek Aug 08 '12 at 12:04

-

possible duplicate of [What is tail-recursion?](http://stackoverflow.com/questions/33923/what-is-tail-recursion). Once you see the definition of tail recursive, an the definition of the algorithm at 17:28, it is clear that it is tail recursive, since the return value of the recursive step is the return value of a call to itself. – Ciro Santilli OurBigBook.com Feb 28 '15 at 10:25

3 Answers

Tail recursion is not about calculating in steps. It is about "the recursion could be evaluated without building call stack." The example given by What is tail recursion? is an special caseof this. If you look the example more deeply, what you can find is that

def recsum(x):

if x==1:

return x

else:

return x+recsum(x-1)

1) to run the above code successfully, you need to build the call stack. But,

def tailrecsum(x,running_total=0):

if x==0:

return running_total

else:

return tailrecsum(x-1,running_total+x)

2) to run the above code, you don't need to build the call stack because of running_total. Just build the call stack for the "original call" and for the recursion, no need to build call stack, because the status required to evaluate this function is stored in running_total.

And, about the quick-sort, I think the professor probably meant that quick-sort could be optimized by "using" tail-recusion. For the two branching parts of qsort(), we can use tail-recursion on one side that has higher items (based on pivot position).

-

2can you put the call stacks in your answer to explain it further . To me it seems like you need to build the call stack in both the procedures . tailrecsum calls tail recursive sum calls tailrecsum .... So the call stack is building up ...Isn't it ? – Geek Aug 08 '12 at 15:06

-

1This is continuation from my previous comment ...How can the compiler compute a recursive call without building the stack ? How eaxctly you meant by "building the call stack "? – Geek Aug 08 '12 at 15:16

-

2@Geek: I'm just a beginner in this subject, and I'm in the process of reading "Structure and Interpretation of Computer Programs" (SICP), which is available for free on-line; the concept of tail-recursion is intimately related with the topic "linear recursion versus iteration". SICP has very much to say about it here: http://mitpress.mit.edu/sicp/full-text/book/book-Z-H-11.html#%_sec_1.2.1. This link from SICP explains it very clearly. – favq Aug 09 '12 at 00:08

-

2The big difference between recsum and tailrecsum is that the first one generates a recursive process, whereas the last one generates an iterative process. In recsum, notice that each call needs to return its value so that it can be summed to x. After the last call to recsum is made and base case is reached, there is now a chain of deferred additions that need to be computed. So, the entire chain of calls must be remembered until the last call is made. This is linear recursion (the SICP link that I gave you explains it very clearly). – favq Aug 09 '12 at 00:10

-

In tailrecsum, it is different. The call to tailrecsum with "x" as parameter is reduced/converted to a new call to tailrecsum with "x-1" as parameter, and so on; there are no deferred additions left to be computed afterwards. So, the last call to tailrecsum (when x reaches zero) will simply return the final answer, which is stored in running_total, so, the previous calls never have to be "remembered". This means that it should need constant memory to execute, whereas the linear recursive one requires memory that grows with the number of calls. Again, SICP explains this very clearly. – favq Aug 09 '12 at 00:14

-

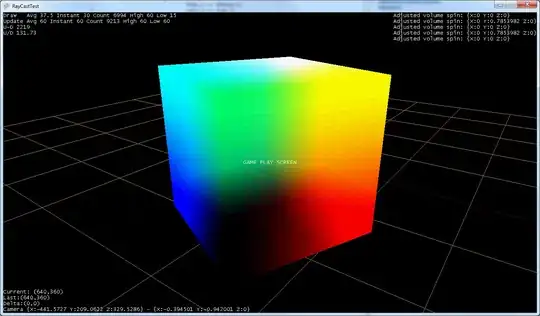

1I think anonymous explained nicely, and also I have uploaded a simple diagram (about building call stack: ignoring return address and local variables and just focusing on params). Typically, tailrecsum should build call stack as depicted in the middle. But, if compiler is smart enough, it knows that tailrecsum do not need to build the callstack, because it already knows the answer (in the param of running_total) – jaeyong Aug 09 '12 at 02:34

-

1And, about not building the call stack, it just overwrites the param section of the previous call stack without modifying the return address if I recall correctly. – jaeyong Aug 09 '12 at 02:35

Have a look at the wiki page of Quicksort. There is a version of tail recursive

function quicksort(array, 'left', 'right')

// If the list has 2 or more items

if 'left' < 'right'

// See "Choice of pivot" section below for possible choices

choose any 'pivotIndex' such that 'left' ≤ 'pivotIndex' ≤ 'right'

// Get lists of bigger and smaller items and final position of pivot

'pivotNewIndex' := partition(array, 'left', 'right', 'pivotIndex')

// Recursively sort elements smaller than the pivot

quicksort(array, 'left', 'pivotNewIndex' - 1)

// Recursively sort elements at least as big as the pivot

quicksort(array, 'pivotNewIndex' + 1, 'right')

According to the definition of Tail recursion, the last method call of quicksort is itself, that's tail recursion. But some other implementations are not tail recursive.

quicksort(left) + pivot + quicksort(right)

Because the final action in quicksort is sorted_left + pivot + sorted_right

- 5,177

- 3

- 27

- 28

The first step(s) of the recursive function is partitioning. And then, as a last step, you call the recursive function on the two partitions.

From wikipedia:

In computer science, a tail call is a subroutine call that happens inside another procedure as its final action; it may produce a return value which is then immediately returned by the calling procedure.

- 2,626

- 2

- 24

- 44