Well I had a little fun with this one. The first thing I thought of when I first read the problem was group theory (the symmetric group Sn, in particular). The for loop simply builds a permutation σ in Sn by composing transpositions (i.e. swaps) on each iteration. My math is not all that spectacular and I'm a little rusty, so if my notation is off bear with me.

Overview

Let A be the event that our array is unchanged after permutation. We are ultimately asked to find the probability of event A, Pr(A).

My solution attempts to follow the following procedure:

- Consider all possible permutations (i.e. reorderings of our array)

- Partition these permutations into disjoint sets based on the number of so-called identity transpositions they contain. This helps reduce the problem to even permutations only.

- Determine the probability of obtaining the identity permutation given that the permutation is even (and of a particular length).

- Sum these probabilities to obtain the overall probability the array is unchanged.

1) Possible Outcomes

Notice that each iteration of the for loop creates a swap (or transposition) that results one of two things (but never both):

- Two elements are swapped.

- An element is swapped with itself. For our intents and purposes, the array is unchanged.

We label the second case. Let's define an identity transposition as follows:

An identity transposition occurs when a number is swapped with itself.

That is, when n == m in the above for loop.

For any given run of the listed code, we compose N transpositions. There can be 0, 1, 2, ... , N of the identity transpositions appearing in this "chain".

For example, consider an N = 3 case:

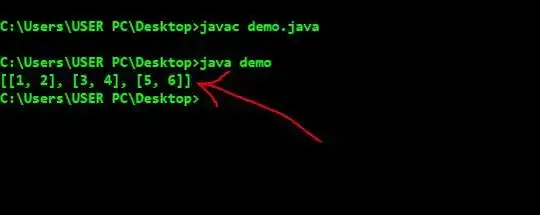

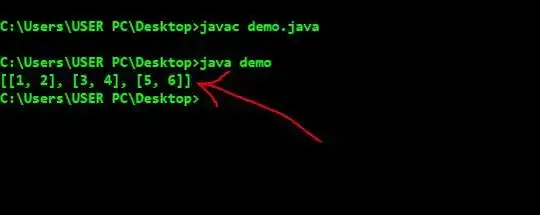

Given our input [0, 1, 2].

Swap (0 1) and get [1, 0, 2].

Swap (1 1) and get [1, 0, 2]. ** Here is an identity **

Swap (2 2) and get [1, 0, 2]. ** And another **

Note that there is an odd number of non-identity transpositions (1) and the array is changed.

2) Partitioning Based On the Number of Identity Transpositions

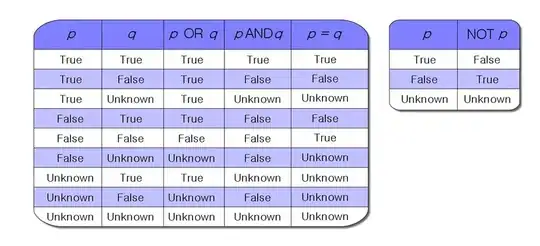

Let K_i be the event that i identity transpositions appear in a given permutation. Note this forms an exhaustive partition of all possible outcomes:

- No permutation can have two different quantities of identity transpositions simultaneously, and

- All possible permutations must have between

0 and N identity transpositions.

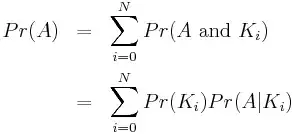

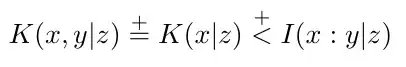

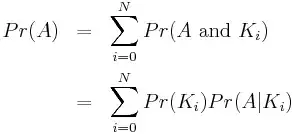

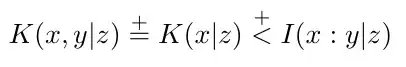

Thus we can apply the Law of Total Probability:

Now we can finally take advantage of the the partition. Note that when the number of non-identity transpositions is odd, there is no way the array can go unchanged*. Thus:

*From group theory, a permutation is even or odd but never both. Therefore an odd permutation cannot be the identity permutation (since the identity permutation is even).

3) Determining Probabilities

So we now must determine two probabilities for N-i even:

The First Term

The first term,  , represents the probability of obtaining a permutation with

, represents the probability of obtaining a permutation with i identity transpositions. This turns out to be binomial since for each iteration of the for loop:

- The outcome is independent of the results before it, and

- The probability of creating an identity transposition is the same, namely

1/N.

Thus for N trials, the probability of obtaining i identity transpositions is:

The Second Term

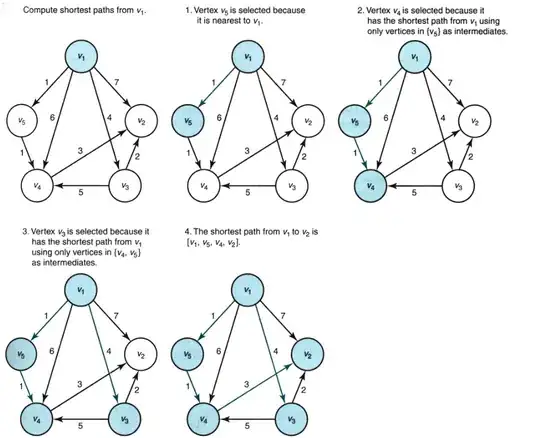

So if you've made it this far, we have reduced the problem to finding  for

for N - i even. This represents the probability of obtaining an identity permutation given i of the transpositions are identities. I use a naive counting approach to determine the number of ways of achieving the identity permutation over the number of possible permutations.

First consider the permutations (n, m) and (m, n) equivalent. Then, let M be the number of non-identity permutations possible. We will use this quantity frequently.

The goal here is to determine the number of ways a collections of transpositions can be combined to form the identity permutation. I will try to construct the general solution along side an example of N = 4.

Let's consider the N = 4 case with all identity transpositions (i.e. i = N = 4). Let X represent an identity transposition. For each X, there are N possibilities (they are: n = m = 0, 1, 2, ... , N - 1). Thus there are N^i = 4^4 possibilities for achieving the identity permutation. For completeness, we add the binomial coefficient, C(N, i), to consider ordering of the identity transpositions (here it just equals 1). I've tried to depict this below with the physical layout of elements above and the number of possibilities below:

I = _X_ _X_ _X_ _X_

N * N * N * N * C(4, 4) => N^N * C(N, N) possibilities

Now without explicitly substituting N = 4 and i = 4, we can look at the general case. Combining the above with the denominator found previously, we find:

This is intuitive. In fact, any other value other than 1 should probably alarm you. Think about it: we are given the situation in which all N transpositions are said to be identities. What's the probably that the array is unchanged in this situation? Clearly, 1.

Now, again for N = 4, let's consider 2 identity transpositions (i.e. i = N - 2 = 2). As a convention, we will place the two identities at the end (and account for ordering later). We know now that we need to pick two transpositions which, when composed, will become the identity permutation. Let's place any element in the first location, call it t1. As stated above, there are M possibilities supposing t1 is not an identity (it can't be as we have already placed two).

I = _t1_ ___ _X_ _X_

M * ? * N * N

The only element left that could possibly go in the second spot is the inverse of t1, which is in fact t1 (and this is the only one by uniqueness of inverse). We again include the binomial coefficient: in this case we have 4 open locations and we are looking to place 2 identity permutations. How many ways can we do that? 4 choose 2.

I = _t1_ _t1_ _X_ _X_

M * 1 * N * N * C(4, 2) => C(N, N-2) * M * N^(N-2) possibilities

Again looking at the general case, this all corresponds to:

Finally we do the N = 4 case with no identity transpositions (i.e. i = N - 4 = 0). Since there are a lot of possibilities, it starts to get tricky and we must be careful not to double count. We start similarly by placing a single element in the first spot and working out possible combinations. Take the easiest first: the same transposition 4 times.

I = _t1_ _t1_ _t1_ _t1_

M * 1 * 1 * 1 => M possibilities

Let's now consider two unique elements t1 and t2. There are M possibilities for t1 and only M-1 possibilities for t2 (since t2 cannot be equal to t1). If we exhaust all arrangements, we are left with the following patterns:

I = _t1_ _t1_ _t2_ _t2_

M * 1 * M-1 * 1 => M * (M - 1) possibilities (1)st

= _t1_ _t2_ _t1_ _t2_

M * M-1 * 1 * 1 => M * (M - 1) possibilities (2)nd

= _t1_ _t2_ _t2_ _t1_

M * M-1 * 1 * 1 => M * (M - 1) possibilities (3)rd

Now let's consider three unique elements, t1, t2, t3. Let's place t1 first and then t2. As usual, we have:

I = _t1_ _t2_ ___ ___

M * ? * ? * ?

We can't yet say how many possible t2s there can be yet, and we will see why in a minute.

We now place t1 in the third spot. Notice, t1 must go there since if were to go in the last spot, we would just be recreating the (3)rd arrangement above. Double counting is bad! This leaves the third unique element t3 to the final position.

I = _t1_ _t2_ _t1_ _t3_

M * ? * 1 * ?

So why did we have to take a minute to consider the number of t2s more closely? The transpositions t1 and t2 cannot be disjoint permutations (i.e. they must share one (and only one since they also cannot be equal) of their n or m). The reason for this is because if they were disjoint, we could swap the order of permutations. This means we would be double counting the (1)st arrangement.

Say t1 = (n, m). t2 must be of the form (n, x) or (y, m) for some x and y in order to be non-disjoint. Note that x may not be n or m and y many not be n or m. Thus, the number of possible permutations that t2 could be is actually 2 * (N - 2).

So, coming back to our layout:

I = _t1_ _t2_ _t1_ _t3_

M * 2(N-2) * 1 * ?

Now t3 must be the inverse of the composition of t1 t2 t1. Let's do it out manually:

(n, m)(n, x)(n, m) = (m, x)

Thus t3 must be (m, x). Note this is not disjoint to t1 and not equal to either t1 or t2 so there is no double counting for this case.

I = _t1_ _t2_ _t1_ _t3_

M * 2(N-2) * 1 * 1 => M * 2(N - 2) possibilities

Finally, putting all of these together:

4) Putting it all together

So that's it. Work backwards, substituting what we found into the original summation given in step 2. I computed the answer to the N = 4 case below. It matches the empirical number found in another answer very closely!

N = 4

M = 6 _________ _____________ _________

| Pr(K_i) | Pr(A | K_i) | Product |

_________|_________|_____________|_________|

| | | | |

| i = 0 | 0.316 | 120 / 1296 | 0.029 |

|_________|_________|_____________|_________|

| | | | |

| i = 2 | 0.211 | 6 / 36 | 0.035 |

|_________|_________|_____________|_________|

| | | | |

| i = 4 | 0.004 | 1 / 1 | 0.004 |

|_________|_________|_____________|_________|

| | |

| Sum: | 0.068 |

|_____________|_________|

Correctness

It would be cool if there was a result in group theory to apply here-- and maybe there is! It would certainly help make all this tedious counting go away completely (and shorten the problem to something much more elegant). I stopped working at N = 4. For N > 5, what is given only gives an approximation (how good, I'm not sure). It is pretty clear why that is if you think about it: for example, given N = 8 transpositions, there are clearly ways of creating the identity with four unique elements which are not accounted for above. The number of ways becomes seemingly more difficult to count as the permutation gets longer (as far as I can tell...).

Anyway, I definitely couldn't do something like this within the scope of an interview. I would get as far as the denominator step if I was lucky. Beyond that, it seems pretty nasty.

.

.