SQL Server will generally try and sort large inserts into clustered index order prior to insert anyway.

If the source for the insert is a table variable however then it will not take account of the cardinality unless the statement is recompiled after the table variable is populated. Without this it will assume the insert will only be one row.

The below script demonstrates three possible scenarios.

- The insert source is already exactly in correct order.

- The insert source is exactly in reversed order.

- The insert source is exactly in reversed order but

OPTION (RECOMPILE) is used so SQL Server compiles a plan suited for inserting 1,000,000 rows.

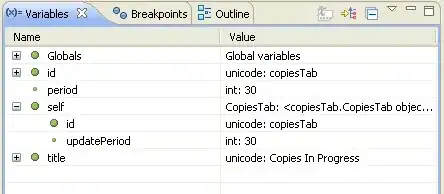

Execution Plans

The third one has a sort operator to get the inserted values into clustered index order first.

/*Create three separate identical tables*/

CREATE TABLE Tmp1(a int primary key clustered (a))

CREATE TABLE Tmp2(a int primary key clustered (a))

CREATE TABLE Tmp3(a int primary key clustered (a))

DBCC FREEPROCCACHE;

GO

DECLARE @Source TABLE (N INT PRIMARY KEY (N ASC))

INSERT INTO @Source

SELECT TOP (1000000) ROW_NUMBER() OVER (ORDER BY (SELECT 0))

FROM sys.all_columns c1, sys.all_columns c2, sys.all_columns c3

SET STATISTICS TIME ON;

PRINT 'Tmp1'

INSERT INTO Tmp1

SELECT TOP (1000000) N

FROM @Source

ORDER BY N

PRINT 'Tmp2'

INSERT INTO Tmp2

SELECT TOP (1000000) 1000000 - N

FROM @Source

ORDER BY N

PRINT 'Tmp3'

INSERT INTO Tmp3

SELECT 1000000 - N

FROM @Source

ORDER BY N

OPTION (RECOMPILE)

SET STATISTICS TIME OFF;

Verify Results and clean up

SELECT object_name(object_id) AS name,

page_count,

avg_fragmentation_in_percent,

fragment_count,

avg_fragment_size_in_pages

FROM

sys.dm_db_index_physical_stats(db_id(), object_id('Tmp1'), 1, NULL, 'DETAILED')

WHERE index_level = 0

UNION ALL

SELECT object_name(object_id) AS name,

page_count,

avg_fragmentation_in_percent,

fragment_count,

avg_fragment_size_in_pages

FROM

sys.dm_db_index_physical_stats(db_id(), object_id('Tmp2'), 1, NULL, 'DETAILED')

WHERE index_level = 0

UNION ALL

SELECT object_name(object_id) AS name,

page_count,

avg_fragmentation_in_percent,

fragment_count,

avg_fragment_size_in_pages

FROM

sys.dm_db_index_physical_stats(db_id(), object_id('Tmp3'), 1, NULL, 'DETAILED')

WHERE index_level = 0

DROP TABLE Tmp1, Tmp2, Tmp3

STATISTICS TIME ON results

+------+----------+--------------+

| | CPU Time | Elapsed Time |

+------+----------+--------------+

| Tmp1 | 6718 ms | 6775 ms |

| Tmp2 | 7469 ms | 7240 ms |

| Tmp3 | 7813 ms | 9318 ms |

+------+----------+--------------+

Fragmentation Results

+------+------------+------------------------------+----------------+----------------------------+

| name | page_count | avg_fragmentation_in_percent | fragment_count | avg_fragment_size_in_pages |

+------+------------+------------------------------+----------------+----------------------------+

| Tmp1 | 3345 | 0.448430493 | 17 | 196.7647059 |

| Tmp2 | 3345 | 99.97010463 | 3345 | 1 |

| Tmp3 | 3345 | 0.418535127 | 16 | 209.0625 |

+------+------------+------------------------------+----------------+----------------------------+

Conclusion

In this case all three of them ended up using exactly the same number of pages. However Tmp2 is 99.97% fragmented compared with only 0.4% for the other two. The insert to Tmp3 took the longest as this required an additional sort step first but this one time cost needs to be set against the benefit to future scans against the table of minimal fragmentation.

The reason why Tmp2 is so heavily fragmented can be seen from the below query

WITH T AS

(

SELECT TOP 3000 file_id, page_id, a

FROM Tmp2

CROSS APPLY sys.fn_PhysLocCracker(%%physloc%%)

ORDER BY a

)

SELECT file_id, page_id, MIN(a), MAX(a)

FROM T

group by file_id, page_id

ORDER BY MIN(a)

With zero logical fragmentation the page with the next highest key value would be the next highest page in the file but the pages are exactly in the opposite order of what they are supposed to be.

+---------+---------+--------+--------+

| file_id | page_id | Min(a) | Max(a) |

+---------+---------+--------+--------+

| 1 | 26827 | 0 | 143 |

| 1 | 26826 | 144 | 442 |

| 1 | 26825 | 443 | 741 |

| 1 | 26824 | 742 | 1040 |

| 1 | 26823 | 1041 | 1339 |

| 1 | 26822 | 1340 | 1638 |

| 1 | 26821 | 1639 | 1937 |

| 1 | 26820 | 1938 | 2236 |

| 1 | 26819 | 2237 | 2535 |

| 1 | 26818 | 2536 | 2834 |

| 1 | 26817 | 2835 | 2999 |

+---------+---------+--------+--------+

The rows arrived in descending order so for example values 2834 to 2536 were put into page 26818 then a new page was allocated for 2535 but this was page 26819 rather than page 26817.

One possible reason why the insert to Tmp2 took longer than Tmp1 is because as the rows are being inserted in exactly reverse order on the page every insert to Tmp2 means the slot array on the page needs to be rewritten with all previous entries moved up to make room for the new arrival.