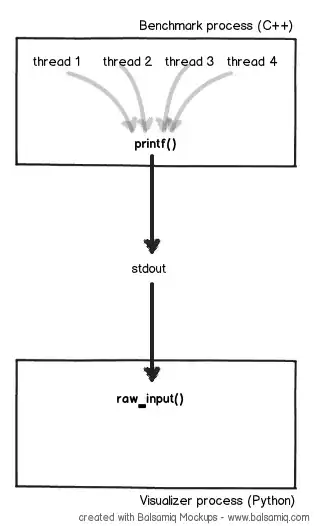

I'm working on a benchmark application which uses an user-defined number of threads to do the processing. I'm also working on a visualizer application for the benchmark results.

The benchmark itself is written in C++ (and uses pthreads for the threading) while the visualizer is written in Python.

Right now, what I'm doing to make the two talk is piping stdout from the benchmark to the visualizer. This has the advantage of being able to use a tool like netcat to run the benchmark on one machine and the visualizer on another.

A bit about the benchmark:

- It is very CPU bound

- Each thread writes important data (i.e. data that I need for the visualizer) every few 10's of milliseconds.

- Each datum printed is a line of 5 to 20 characters.

- As stated previously, the number of threads is highly variable (can be 1, 2, 40, etc.)

- Even though it is important that the data isn't mangled (e.g. that one thread preempts another during a

printf/cout, causing the printed data to be interleaved with the output on another thread), it's not very important that the writes are done in the correct order.

Example regarding the last point:

// Thread 1 prints "I'm one\n" at the 3 seconds mark

// thread 2 prints "I'm two\n" at the 4 seconds mark

// This is fine

I'm two

I'm one

// This is not

I'm I'm one

two

On the benchmark, I switched from std::cout to printf due to it being closer to a write (2) in order to minimize the chance of interleaving between outputs of different threads.

I'm worried that writing to stdout from multiple threads will be cause for a bottleneck as the number of threads increases. It is quite important that the output-for-visualization part of the benchmark is extremely light on resources so as not to skew the results.

I'm looking for ideas on an efficient way of making my two applications talk without impacting the performance of my benchmark more than the absolute essential. Any ideas? Any of you tackled problems like this before? Any smarter/cleaner solutions?