Related: R: Marking slope changes in LOESS curve using ggplot2

This question is trying to find the min/max y (slope=0); I'd like to find the min/max

For background, I'm conducting some various modelling techniques and thought I might use slope to gauge the best models produced by random seeds when iterating through neural network results.

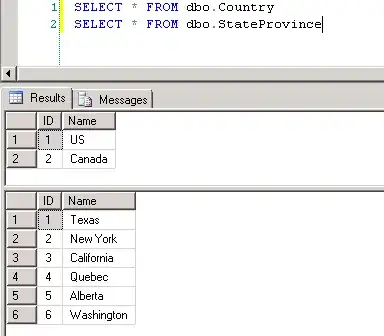

Get the data:

nn <- read.csv("http://pastebin.com/raw.php?i=6SSCb3QR", header=T)

rbf <- read.csv("http://pastebin.com/raw.php?i=hfmY1g46", header=T)

For an example, here's the results of a trained neural network for my data:

library(ggplot2)

ggplot(nn, aes(x=x, y=y, colour=factor(group))) +

geom_point() + stat_smooth(method="loess", se=F)

Similarly, here's one rbf model:

ggplot(rbf, aes(x=x, y=y, colour=factor(group))) +

geom_point() + stat_smooth(method="loess", se=F)

The RBF model fits the data better, and agrees better with background knowledge of the variables. I thought of trying to calculate the min/max slope of the fitted line in order to prune out NNs with steep cliffs vs. more gentle curves. Identifying crossing lines would be another way to prune, but that's a different question.

Thanks for any suggestions.

Note: I used ggplot2 here and tagged the question accordingly, but that doesn't mean it couldn't be accomplished with some other function. I just wanted to visually illustrate why I'm trying to do this. I suppose a loop could do this with y1-y0/x1-x0, but perhaps there's a better way.?