I found 7-zip great and I will like to use it on .net applications. I have a 10MB file (a.001) and it takes:

2 seconds to encode.

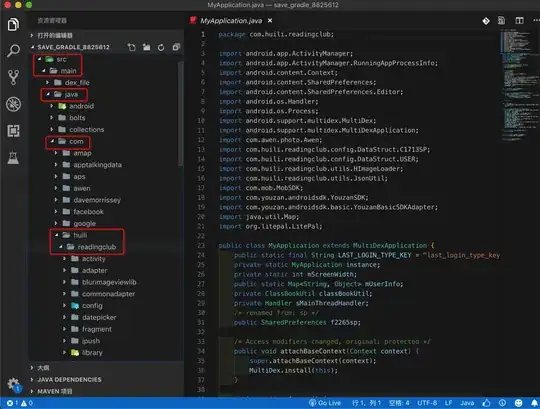

Now it will be nice if I could do the same thing on c#. I have downloaded http://www.7-zip.org/sdk.html LZMA SDK c# source code. I basically copied the CS directory into a console application in visual studio:

Then I compiled and eveything compiled smoothly. So on the output directory I placed the file a.001 which is 10MB of size. On the main method that came on the source code I placed:

[STAThread]

static int Main(string[] args)

{

// e stands for encode

args = "e a.001 output.7z".Split(' '); // added this line for debug

try

{

return Main2(args);

}

catch (Exception e)

{

Console.WriteLine("{0} Caught exception #1.", e);

// throw e;

return 1;

}

}

when I execute the console application the application works great and I get the output a.7z on the working directory. The problem is that it takes so long. It takes about 15 seconds to execute! I have also tried https://stackoverflow.com/a/8775927/637142 approach and it also takes very long. Why is it 10 times slower than the actual program ?

Also

Even if I set to use only one thread:

It still takes much less time (3 seconds vs 15):

(Edit) Another Possibility

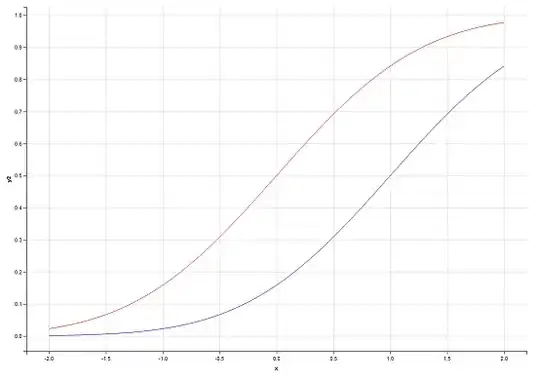

Could it be because C# is slower than assembly or C ? I notice that the algorithm does a lot of heavy operations. For example compare these two blocks of code. They both do the same thing:

C

#include <time.h>

#include<stdio.h>

void main()

{

time_t now;

int i,j,k,x;

long counter ;

counter = 0;

now = time(NULL);

/* LOOP */

for(x=0; x<10; x++)

{

counter = -1234567890 + x+2;

for (j = 0; j < 10000; j++)

for(i = 0; i< 1000; i++)

for(k =0; k<1000; k++)

{

if(counter > 10000)

counter = counter - 9999;

else

counter= counter +1;

}

printf (" %d \n", time(NULL) - now); // display elapsed time

}

printf("counter = %d\n\n",counter); // display result of counter

printf ("Elapsed time = %d seconds ", time(NULL) - now);

gets("Wait");

}

output

c#

static void Main(string[] args)

{

DateTime now;

int i, j, k, x;

long counter;

counter = 0;

now = DateTime.Now;

/* LOOP */

for (x = 0; x < 10; x++)

{

counter = -1234567890 + x + 2;

for (j = 0; j < 10000; j++)

for (i = 0; i < 1000; i++)

for (k = 0; k < 1000; k++)

{

if (counter > 10000)

counter = counter - 9999;

else

counter = counter + 1;

}

Console.WriteLine((DateTime.Now - now).Seconds.ToString());

}

Console.Write("counter = {0} \n", counter.ToString());

Console.Write("Elapsed time = {0} seconds", DateTime.Now - now);

Console.Read();

}

Output

Note how much slower was c#. Both programs where run from outside visual studio on release mode. Maybe that is the reason why it takes so much longer in .net than on c++.

Also I got the same results. C# was 3 times slower just like on the example I just showed!

Conclusion

I cannot seem to know what is causing the problem. I guess I will use 7z.dll and invoke the necessary methods from c#. A library that does that is at: http://sevenzipsharp.codeplex.com/ and that way I am using the same library that 7zip is using as:

// dont forget to add reference to SevenZipSharp located on the link I provided

static void Main(string[] args)

{

// load the dll

SevenZip.SevenZipCompressor.SetLibraryPath(@"C:\Program Files (x86)\7-Zip\7z.dll");

SevenZip.SevenZipCompressor compress = new SevenZip.SevenZipCompressor();

compress.CompressDirectory("MyFolderToArchive", "output.7z");

}