I'm looking to copy a production PostgreSQL database to a development server. What's the quickest, easiest way to go about doing this?

13 Answers

You don't need to create an intermediate file. You can do

pg_dump -C -h localhost -U localuser dbname | psql -h remotehost -U remoteuser dbname

or

pg_dump -C -h remotehost -U remoteuser dbname | psql -h localhost -U localuser dbname

using psql or pg_dump to connect to a remote host.

With a big database or a slow connection, dumping a file and transfering the file compressed may be faster.

As Kornel said there is no need to dump to a intermediate file, if you want to work compressed you can use a compressed tunnel

pg_dump -C dbname | bzip2 | ssh remoteuser@remotehost "bunzip2 | psql dbname"

or

pg_dump -C dbname | ssh -C remoteuser@remotehost "psql dbname"

but this solution also requires to get a session in both ends.

Note: pg_dump is for backing up and psql is for restoring. So, the first command in this answer is to copy from local to remote and the second one is from remote to local. More -> https://www.postgresql.org/docs/9.6/app-pgdump.html

- 4,182

- 1

- 30

- 45

- 14,563

- 2

- 21

- 12

-

35There's no need for intermediate files - you may use compressed SSH tunnel or simply pipe: pg_dump | bzip2 | ssh "bunzip2 | pg_restore" – Kornel Aug 06 '09 at 12:46

-

5If you use bzip2, turn off ssh compression to speed up the transfer! – lzap Jun 19 '12 at 09:34

-

1The command ssh already has the compression disabled by default. In the example when using ssh compression, I am not using bzip2 and when using bzip2 I am not using ssh compression. – Ferran Jun 19 '12 at 16:07

-

8How can I work compressed if I'm *pulling* data from production down into development? I have set up an SSH connection from development into production. So would it be `ssh remoteuser@remotehost "pg_dump -C dbname | bzip2" | bunzip2 | psql dbname`? – Jeromy French Feb 26 '13 at 02:51

-

2I would expect that you should be able to copy a remote database with name x to a local database with name y, but @Ferran's solution does not work for this... It looks to me like porneL's solution just leaves the bzip2 files on the server, so that's not a one step process. This being the case, I guess I'll drop database y, use the "or" part of Ferran's solution which restores x, then rename the database to y. – Darin Peterson Mar 04 '14 at 16:28

-

3This is what I did: (1) pg_dump -C -h remotehost -U remoteuser x | psql -h localhost -U localuser (2) dropdb y (3) psql -U postgres -c 'ALTER DATABASE "x" RENAME TO "y"' – Darin Peterson Mar 04 '14 at 17:22

-

2I used the command in the answer to copy a database from local host to remotehost, but it always tries to create a database with the name of database on localhost side, no matter what name I give in the second part of command. I had to rename it before copying it to the remote host. Any idea how to do it in the same command? – Dark Light Jul 08 '14 at 18:35

-

1The -C option of pg_dump adds a commend to create the database at the begining of the dump. You can omit this option and run "psql newdbname" where "newdbname" is a database created on the receiving host. – Ferran Jul 10 '14 at 15:44

-

1We've found that you get a considerably faster transfer if you use `netcat` to transmit the file, rather than `ssh`. – ocharles Jan 07 '15 at 22:16

-

Note for RDS users: I needed to put my hostname in quotations, without any HTTP/HTTPS. I.e. pg_dump -C -h "host.lkjlkjl.us-east-1.rds.amazonaws.com" -U remoteuser dbname | psql -h localhost -U localuser dbname – joel.software Feb 17 '15 at 21:01

-

1In case you have not default PORTS you can add "-p" option (default is "-p 5432") for both local and remote host. – Fil May 06 '15 at 17:06

-

2@JeromyFrench for the future readers, the command `ssh -i

postgres@ – rogueleaderr Dec 08 '15 at 22:30-C "pg_dump -C " | psql ` worked for me to pull data from a remote host. -

For the case you want to copy to a compressed file on a remote machine and not import directly or decompress directly, use (as posgres user): pg_dump -C dbname | bzip2 | ssh username@remotehost "tee > dumpfile.bz2" non-postgres users should use: pg_dump -h localhost -U dbusername dbname | bzip2 | ssh username@remotehost "tee > dumpfile.bz2" – anneb May 31 '16 at 09:47

-

Permission denied (publickey,password). i get this error , even after allowing ip in pg_hba.config in both remote and local but still get this error – The pyramid Dec 09 '18 at 23:26

-

2Note, you can add `-v ON_ERROR_STOP=1` at the end of your psql line if you'd like to stop and check/deal with every error you run into, e.g. `pg_dump -C -h localhost -U sarahpopov somni | psql -h 165.227.36.129 -U somni somni -v ON_ERROR_STOP=1` – spops Mar 14 '19 at 03:52

-

Note also, that first command is for copying from the LOCAL to remote. – user2501323 Apr 26 '19 at 12:32

-

10You cannot use this comand if both servers ask for a password. They will ask simultaneously and the entered password will always go to the wrong instance, as per Murphy's law (just confirmed that twice). – ygoe Oct 20 '19 at 20:21

-

What if I want to use this to copy the database into the same machine with a different name? -C will create a database with the same name, instead I used without -C and created the database first, it works but it is a two line solution :P – Obay Abd-Algader Nov 17 '20 at 07:46

-

should I first create the database on the new server side ? I get an error that it is already exist. – Sion C Apr 21 '21 at 12:22

-

2Is it possible to multi thread this? – CodeGuru Oct 02 '21 at 05:17

-

Anybody else run into having to do `stty sane` after doing this pipe operation? When I run it, I get two overlapping password prompts...the first password typed in is hidden, then the second one shows in cleartext, and the tty is broken (characters don't show up) when the command finishes. – Brian A. Henning Feb 22 '23 at 19:27

-

1Any way to see the progress? Like a progress bar or something. – Joe Eifert Mar 28 '23 at 15:31

-

Copy will be dirty if a role is missing. It does not stop on error by default. – Grim Jun 07 '23 at 07:23

-

Passing the db-name is nonsense because the dump is used having the -C for "create database" statements. – Grim Jun 07 '23 at 08:00

pg_dump the_db_name > the_backup.sql

Then copy the backup to your development server, restore with:

psql the_new_dev_db < the_backup.sql

- 33,530

- 16

- 61

- 61

-

5Some one told me this can be problematic - permissions problems causing either the dump or restore to die when it hits a trigger? – Robin Barnes Aug 06 '09 at 09:26

-

27@rmbarnes: If there are problems - they have to be fixed. Without detailed knowledge what this "Some one" did - nobody can confirm nor dismiss this claim. – Aug 06 '09 at 10:06

-

6Use the --no-owner flag with pg_dump. This skips the problem and the first edit of this post used it -- but then I thought you might need more precise fidelity to the original database. – unmounted Aug 06 '09 at 17:05

-

5For me, above approach worked in following way: pg_dump -C -h host -U username db_name > /any_directory/dump_schema_and_data_file .And for restoring from file: psql -h host -U username db_name < dump_schema_and_data_file – Ali Raza Bhayani Mar 26 '14 at 09:38

-

That saved me a LOT of aggravation. I used Google drive to move the file between machines. Since I already had the database on the new machine (But blank) I got a LOT of duplicate key errors. However, it is a dev environment and they didn't hurt anything. – Chris Mendla Apr 04 '19 at 03:47

Use pg_dump, and later psql or pg_restore - depending whether you choose -Fp or -Fc options to pg_dump.

Example of usage:

ssh production

pg_dump -C -Fp -f dump.sql -U postgres some_database_name

scp dump.sql development:

rm dump.sql

ssh development

psql -U postgres -f dump.sql

-

-

`-F, --format=c|d|t|p` output file format (custom, directory, tar, plain text (default)) – p13rr0m Dec 02 '21 at 14:54

-

The `-Fc` produce compressed and smaller file. It can be restored in parallel with `-j ` option e.g. `pg_restore -j 8 -d dbname dump.sql`. The `-Fd` “directory” format additionally supports parallel dumps. – Sergey Ponomarev Jul 21 '23 at 23:37

If you are looking to migrate between versions (eg you updated postgres and have 9.1 running on localhost:5432 and 9.3 running on localhost:5434) you can run:

pg_dumpall -p 5432 -U myuser91 | psql -U myuser94 -d postgres -p 5434

Check out the migration docs.

- 6,894

- 8

- 43

- 62

-

I'm asked for the (myuser91/postgres)-password multiple times, is there a way so that I need to enter the password only once? – Martin Weber Dec 15 '17 at 10:50

-

@MartinWeber Create a ,pgpass file as per this document https://www.postgresql.org/docs/9.4/static/libpq-pgpass.html – Scott Warren Dec 21 '17 at 04:06

-

-

If they are on different servers, you can use -h to specify the hosts. – Haroldo_OK May 14 '20 at 18:36

pg_basebackup seems to be the better way of doing this now, especially for large databases.

You can copy a database from a server with the same or older major version. Or more precisely:

pg_basebackupworks with servers of the same or an older major version, down to 9.1. However, WAL streaming mode (-X stream) only works with server version 9.3 and later, and tar format mode (--format=tar) of the current version only works with server version 9.5 or later.

For that you need on the source server:

listen_addresses = '*'to be able to connect from the target server. Make sure port 5432 is open for that matter.- At least 1 available replication connection:

max_wal_senders = 1(-X fetch),2for-X stream(the default in case of PostgreSQL 12), or more. wal_level = replicaor higher to be able to setmax_wal_senders > 0.host replication postgres DST_IP/32 trustinpg_hba.conf. This grants access to thepgcluster to anyone from theDST_IPmachine. You might want to resort to a more secure option.

Changes 1, 2, 3 require server restart, change 4 requires reload.

On the target server:

# systemctl stop postgresql@VERSION-NAME

postgres$ pg_basebackup -h SRC_IP -U postgres -D VERSION/NAME --progress

# systemctl start postgresql@VERSION-NAME

- 16,722

- 15

- 114

- 161

- 457

- 4

- 8

-

13

-

7

-

Chances are small that you would use different database version for development and production. Last time I had some unpleasant conversation with one of my teammates as she was trying to submit an issue that some code is not working with PG 9.6 while we had used 9.5 in production at that time. Base backup is much faster. Then pg_upgrade is the way to go if needed. – Zorg Jan 18 '18 at 04:25

-

3Chances are that you want to migrate to a newer version, and don't want to stop PostgreSQL. – x-yuri Oct 05 '18 at 09:49

-

2Chances are that whenever you upgrade your database, you upgrade it on dev and staging before you do it on production. – andrew lorien Dec 06 '18 at 06:07

Accepted answer is correct, but if you want to avoid entering the password interactively, you can use this:

PGPASSWORD={{export_db_password}} pg_dump --create -h {{export_db_host}} -U {{export_db_user}} {{export_db_name}} | PGPASSWORD={{import_db_password}} psql -h {{import_db_host}} -U {{import_db_user}} {{import_db_name}}

- 943

- 11

- 22

-

2

-

-

If u use an ip public of postgres to connect, you have to add options -p to specify the port of host e.g: -h {{export_db_host}} -p {{export_db_port}} – Bona Ws Jan 07 '22 at 19:41

-

1

-

can I use this syntax in powershell and do I need to put password in quotation marks? @zoran – Learner Jan 19 '22 at 02:52

Run this command with database name, you want to backup, to take dump of DB.

pg_dump -U {user-name} {source_db} -f {dumpfilename.sql}

eg. pg_dump -U postgres mydbname -f mydbnamedump.sql

Now scp this dump file to remote machine where you want to copy DB.

eg. scp mydbnamedump.sql user01@remotemachineip:~/some/folder/

On remote machine run following command in ~/some/folder to restore the DB.

psql -U {user-name} -d {desintation_db}-f {dumpfilename.sql}

eg. psql -U postgres -d mynewdb -f mydbnamedump.sql

- 1,575

- 1

- 12

- 14

-

how can you backup if you are unable to connect to psql? is there a file system way to achieve this? ex: if your server config/exe got infected with a virus? – ullfindsmit Sep 06 '21 at 02:55

Dump your database : pg_dump database_name_name > backup.sql

Import your database back: psql db_name < backup.sql

- 309

- 4

- 4

-

And this works when you are remote to the source and destination database servers? For example, I'm at my workstation and coping a database between 2 pg server running in docker containers on different servers ( not my workstation ). – majorgear Mar 12 '23 at 23:49

I struggled quite a lot and eventually the method that allowed me to make it work with Rails 4 was:

on your old server

sudo su - postgres

pg_dump -c --inserts old_db_name > dump.sql

I had to use the postgres linux user to create the dump. also i had to use -c to force the creation of the database on the new server. --inserts tells it to use the INSERT() syntax which otherwise would not work for me :(

then, on the new server, simpy:

sudo su - postgres

psql new_database_name < dump.sql

to transfer the dump.sql file between server I simply used the "cat" to print the content and than "nano" to recreate it copypasting the content.

Also, the ROLE i was using on the two database was different so i had to find-replace all the owner name in the dump.

- 4,171

- 3

- 30

- 36

Let me share a Linux shell script to copy your table data from one server to another PostgreSQL server.

Reference taken from this blog:

Linux Bash Shell Script for data migration between PostgreSQL Servers:

#!/bin/bash

psql \

-X \

-U user_name \

-h host_name1 \

-d database_name \

-c "\\copy tbl_Students to stdout" \

| \

psql \

-X \

-U user_name \

-h host_name2 \

-d database_name \

-c "\\copy tbl_Students from stdin"

I am just migrating the data; please create a blank table at your destination/second database server.

This is a utility script. Further, you can modify the script for generic use something like by adding parameters for host_name, database_name, table_name and others

- 17,828

- 6

- 117

- 94

- 7,103

- 3

- 45

- 43

Here is an example using pg_basebackup

I chose to go this route because it backs up the entire database cluster (users, databases, etc.).

I'm posting this as a solution on here because it details every step I had to take, feel free to add recommendations or improvements after reading other answers on here and doing some more research.

For Postgres 12 and Ubuntu 18.04 I had to do these actions:

On the server that is currently running the database:

Update pg_hba.conf, for me located at /etc/postgresql/12/main/pg_hba.conf

Add the following line (substitute 192.168.0.100 with the IP address of the server you want to copy the database to).

host replication postgres 192.168.0.100/32 trust

Update postgresql.conf, for me located at /etc/postgresql/12/main/postgresql.conf. Add the following line:

listen_addresses = '*'

Restart postgres:

sudo service postgresql restart

On the host you want to copy the database cluster to:

sudo service postgresql stop

sudo su root

rm -rf /var/lib/postgresql/12/main/*

exit

sudo -u postgres pg_basebackup -h 192.168.0.101 -U postgres -D /var/lib/postgresql/12/main/

sudo service postgresql start

Big picture - stop the service, delete everything in the data directory (mine is in /var/lib/postgreql/12). The permissions on this directory are drwx------ with user and group postgres. I could only do this as root, not even with sudo -u postgres. I'm unsure why. Ensure you are doing this on the new server you want to copy the database to! You are deleting the entire database cluster.

Make sure to change the IP address from 192.168.0.101 to the IP address you are copying the database from. Copy the data from the original server with pg_basebackup. Start the service.

Update pg_hba.conf and postgresql.conf to match the original server configuration - before you made any changes adding the replication line and the listen_addresses line (in my care I had to add the ability to log-in locally via md5 to pg_hba.conf).

Note there are considerations for max_wal_senders and wal_level that can be found in the documentation. I did not have to do anything with this.

- 5,099

- 7

- 43

- 81

-

1it is possible run some as ``pg_dumpall -C -h localhost -U postgres | psql -h second.server.com -U postgres`` and then OVERWRITE the OLD databases, OLD schemas, OLD roles, OLD any... in host "second.server.com" ? – VyR Mar 30 '21 at 23:03

-

Will this work with managed postgresql databases like azure or digital ocean? – holms May 06 '22 at 23:38

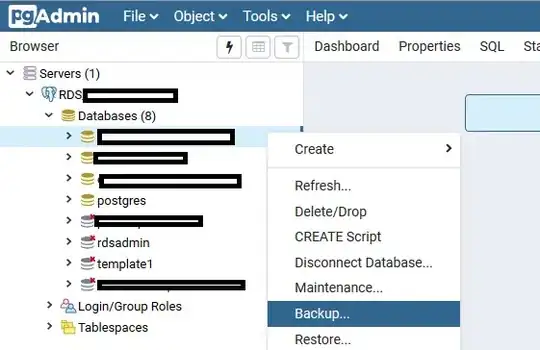

If you are more comfortable with a GUI, you can use the pgAdmin software.

- Connect to your source and destination servers

- Right-click on the source db > backup

- Right-click on the destination server > create > database. Use the same properties as the source db (you can see the properties of the source db by right-click > properties)

- Right-click on the created db > restore.

- 1,476

- 2

- 20

- 33

-

1

-

no pgadmin available in any cloud providers nowadays, it's very legacy approach – holms May 06 '22 at 23:36

-

1not really "legacy", it's a database client. You can always use SSH tunneling to connect to your database. – YohjiNakamoto Jul 16 '22 at 12:55

-

1@holms the example shown in the photo is connected to AWS-RDS. To connect to any DB server you just need to make sure the connection is allowed from the machine that pgAdmin is installed to the DB server that you want to connect. – LoMaPh Jul 16 '22 at 17:43

This is the easiest solution:

pg_dump --dbname=postgresql://[user]:[password]@[host]:[port]/[database] | psql --dbname=postgresql://[user]:[password]@[host]:[port]/[database]

The first part, pg_dump, is downloading the database you want to copy.

The second part, psql, is uploading to that address.

An example:

pg_dump --dbname=postgresql://postgres:password@originaldatabase.cluster-awsstring.eu-west-1.rds.amazonaws.com:5432/originaldatabasename | psql --dbname=postgresql://postgres:password@newdatabase.cluster-awsstring.eu-west-1.rds.amazonaws.com:5432/newdatabasename

I found the previous examples didn't spell out how to construct the --dbname string or in the docs. Ref: What is the format for the PostgreSQL connection string / URL?

- 13

- 3