I have Microsoft Visual Studio 2010 on Windows 7 64bit. (In project properties "Character set" is set to "Not set", however every setting leads to same output.)

Source code:

using namespace std;

char const charTest[] = "árvíztűrő tükörfúrógép ÁRVÍZTŰRŐ TÜKÖRFÚRÓGÉP\n";

cout << charTest;

printf(charTest);

if(set_codepage()) // SetConsoleOutputCP(CP_UTF8); // *1

cerr << "DEBUG: set_codepage(): OK" << endl;

else

cerr << "DEBUG: set_codepage(): FAIL" << endl;

cout << charTest;

printf(charTest);

*1: Including windows.h messes up things, so I'm including it from a separate cpp.

The compiled binary contains the string as correct UTF-8 byte sequence. If I set the console to UTF-8 with chcp 65001 and issue type main.cpp, the string displays correctly.

Test (console set to use Lucida Console font):

D:\dev\user\geometry\Debug>chcp

Active code page: 852

D:\dev\user\geometry\Debug>listProcessing.exe

├írv├şzt┼▒r┼Ĺ t├╝k├Ârf├║r├│g├ęp ├üRV├ŹZT┼░R┼É T├ťK├ľRF├ÜR├ôG├ëP

├írv├şzt┼▒r┼Ĺ t├╝k├Ârf├║r├│g├ęp ├üRV├ŹZT┼░R┼É T├ťK├ľRF├ÜR├ôG├ëP

DEBUG: set_codepage(): OK

��rv��zt��r�� t��k��rf��r��g��p ��RV��ZT��R�� T��K��RF��R��G��P

árvíztűrő tükörfúrógép ÁRVÍZTŰRŐ TÜKÖRFÚRÓGÉP

What is the explanation behind that? Can I somehow ask cout to work as printf?

ATTACHMENT

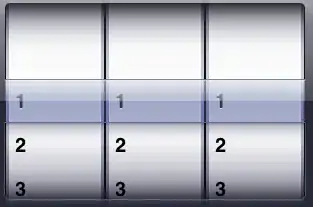

Many says that Windows console does not support UTF-8 characters at all. I'm a Hungarian guy in Hungary, my Windows is set to English (except date formats, they are set to Hungarian) and Cyrillic letters are still displayed correctly alongside Hungarian letters:

(My default console codepage is CP852)