When you read MSDN on System.Single:

Singlecomplies with the IEC 60559:1989 (IEEE 754) standard for binary floating-point arithmetic.

and the C# Language Specification:

The

floatanddoubletypes are represented using the 32-bit single-precision and 64-bit double-precision IEEE 754 formats [...]

and later:

The product is computed according to the rules of IEEE 754 arithmetic.

you easily get the impression that the float type and its multiplication comply with IEEE 754.

It is a part of IEEE 754 that multiplcation is well-defined. By that I mean that when you have two float instances, there exists one and only one float which is their "correct" product. It is not permissible that the product depends on some "state" or "set-up" of the system calculating it.

Now, consider the following simple program:

using System;

static class Program

{

static void Main()

{

Console.WriteLine("Environment");

Console.WriteLine(Environment.Is64BitOperatingSystem);

Console.WriteLine(Environment.Is64BitProcess);

bool isDebug = false;

#if DEBUG

isDebug = true;

#endif

Console.WriteLine(isDebug);

Console.WriteLine();

float a, b, product, whole;

Console.WriteLine("case .58");

a = 0.58f;

b = 100f;

product = a * b;

whole = 58f;

Console.WriteLine(whole == product);

Console.WriteLine((a * b) == product);

Console.WriteLine((float)(a * b) == product);

Console.WriteLine((int)(a * b));

}

}

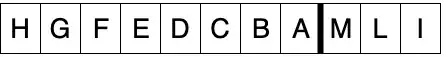

Appart from writing some info on the environment and compile configuration, the program just considers two floats (namely a and b) and their product. The last four write-lines are the interesting ones. Here's the output of running this on a 64-bit machine after compiling with Debug x86 (left), Release x86 (middle), and x64 (right):

We conclude that the result of simple float operations depends on the build configuration.

The first line after "case .58" is a simple check of equality of two floats. We expect it to be independent of build mode, but it's not. The next two lines we expect to be identical because it does not change anything to cast a float to a float. But they are not. We also expect them to read "True↩ True" because we're comparing the product a*b to itself. The last line of the output we expect to be independent of build configuration, but it's not.

To figure out what the correct product is, we calculate manually. The binary representation of 0.58 (a) is:

0 . 1(001 0100 0111 1010 1110 0)(001 0100 0111 1010 1110 0)...

where the block in parentheses is the period which repeats forever. The single-precision representation of this number needs to be rounded to:

0 . 1(001 0100 0111 1010 1110 0)(001 (*)

where we have rounded (in this case round down) to the nearest representable Single. Now, the number "one hundred" (b) is:

110 0100 . (**)

in binary. Computing the full product of the numbers (*) and (**) gives:

11 1001 . 1111 1111 1111 1111 1110 0100

which rounded (in this case rounding up) to single-precision gives

11 1010 . 0000 0000 0000 0000 00

where we rounded up because the next bit was 1, not 0 (round to nearest). So we conclude that the result is 58f according to IEEE. This was not in any way given a priori, for example 0.59f * 100f is less than 59f, and 0.60f * 100f is greater than 60f, according to IEEE.

So it looks like the x64 version of the code got it right (right-most output window in the picture above).

Note: If any of the readers of this question have an old 32-bit CPU, it would be interesting to hear what the output of the above program is on their architecture.

And now for the question:

- Is the above a bug?

- If this is not a bug, where in the C# Specifcation does it say that the runtime may choose to perform a

floatmultiplication with extra precision and then "forget" to get rid of that precision again? - How can casting a

floatexpression to the typefloatchange anything? - Isn't it a problem that seemingly innocent operations like splitting an expression into two expressions by e.g. pulling out an

(a*b)to a temprary local variable, changes behavior, when they ought to be mathematically (as per IEEE) equivalent? How can the programmer know in advance if the runtime chooses to hold thefloatwith "artificial" extra (64-bit) precision or not? - Why are "optimizations" from compiling in Release mode allowed to change arithmetics?

(This was done in the 4.0 version of the .NET Framework.)