I'm using a xcopy in an XP windows script to recursively copy a directory. I keep getting an 'Insufficient Memory' error, which I understand is because a file I'm trying to copy has too long a path. I can easily reduce the path length, but unfortunately I can't work out which files are violating the path length restriction. The files that are copied are printed to the standard output (which I'm redirecting to a log file), but the error message is printed to the terminal, so I can't even work out approximately which directory the error is being given for.

-

[On Windows 7, how do I find all my files whose filenames are too long](https://superuser.com/q/647858/241386) – phuclv Aug 08 '18 at 04:35

9 Answers

do a dir /s /b > out.txt and then add a guide at position 260

In powershell cmd /c dir /s /b |? {$_.length -gt 260}

- 25,014

- 6

- 48

- 78

-

1dir /s /b > out.txt does the job beautifully. Thanks. "'Get-ChildItem' is not recognized as an internal or external command, operable program or batch file." I guess I don't have powershell. – WestHamster Oct 03 '12 at 20:35

-

1@WestHamster, you'll have to run that command in the PowerShell Console. – Rami A. Apr 08 '13 at 11:35

-

6It trows an error like: `Get-ChildItem : The specified path, file name, or both are too long. The fully qualified file name must be less than 260 characters, and the directory name must be less than 248 characters.` Along with it, only a shortened path is printed. eg: `D:\Dropbox\ProY...sh._setbinddata`. Those are exactly the paths i want to find, but I cant see the whole path! Any way around this? – MiniGod Jan 13 '15 at 00:32

-

Am I the only one who can't get this to work? Using Win7 64 bit Home Premium, Powershell 2.0 -- when I create a test file with a long name (240 characters) and then rename the directory in which it sits to also have a long name, `Get-ChildItems -r *` stops seeing the file... only `dir /s /b` works for me. – Jonas Heidelberg Apr 13 '15 at 22:26

-

See my answer for a way to achieve this in powershell, allowing you to either find the offending file names (those above 260 characters) or alternatively, ignore them. – Jonno Oct 18 '15 at 12:11

-

Perfect! This was the only thing I could use to tell me which path was too long when I ran in to a Visual Studio error: `ASPNETCOMPILER : error ASPRUNTIME: The specified path, file name, or both are too long. The fully qualified file name must be less than 260 characters, and the directory name must be less than 248 characters.` – Eddie Fletcher Mar 22 '16 at 02:43

-

What does it mean to add a guide at 260? I'm new to command prompt. – Animatoring Aug 12 '16 at 15:07

-

-

it seems you can [use fully-qualified path to overcome gci's path limit](https://stackoverflow.com/a/39663217/995714) – phuclv Aug 08 '18 at 04:07

I created the Path Length Checker tool for this purpose, which is a nice, free GUI app that you can use to see the path lengths of all files and directories in a given directory.

I've also written and blogged about a simple PowerShell script for getting file and directory lengths. It will output the length and path to a file, and optionally write it to the console as well. It doesn't limit to displaying files that are only over a certain length (an easy modification to make), but displays them descending by length, so it's still super easy to see which paths are over your threshold. Here it is:

$pathToScan = "C:\Some Folder" # The path to scan and the the lengths for (sub-directories will be scanned as well).

$outputFilePath = "C:\temp\PathLengths.txt" # This must be a file in a directory that exists and does not require admin rights to write to.

$writeToConsoleAsWell = $true # Writing to the console will be much slower.

# Open a new file stream (nice and fast) and write all the paths and their lengths to it.

$outputFileDirectory = Split-Path $outputFilePath -Parent

if (!(Test-Path $outputFileDirectory)) { New-Item $outputFileDirectory -ItemType Directory }

$stream = New-Object System.IO.StreamWriter($outputFilePath, $false)

Get-ChildItem -Path $pathToScan -Recurse -Force | Select-Object -Property FullName, @{Name="FullNameLength";Expression={($_.FullName.Length)}} | Sort-Object -Property FullNameLength -Descending | ForEach-Object {

$filePath = $_.FullName

$length = $_.FullNameLength

$string = "$length : $filePath"

# Write to the Console.

if ($writeToConsoleAsWell) { Write-Host $string }

#Write to the file.

$stream.WriteLine($string)

}

$stream.Close()

-

2Interesting script, but I'm still getting this error: `Get-ChildItem : The specified path, file name, or both are too long. The fully qualified file name must be less than 260 characters, and the directory name must be less than 248 characters.` – zkurtz Dec 04 '14 at 21:39

-

Excellent tool!! I had huge date, wanted to create a backup on a WD MyCloud HDD. By this tool came to know the max length to create a folder structure on HDD. Thanks. – NJMR Feb 18 '16 at 04:14

-

Great tool! I used it to create a script able to find all files/folders having a path longer than 247 characters, which is the limit for Synology Drive Client in Windows. The main code is `PathLengthChecker.exe RootDirectory="C:\[MyFolder]" MinLength=248`, while `echo SELECT sync_folder FROM session_table;| sqlite3.exe %LocalAppData%\SynologyDrive\data\db\sys.sqlite` was needed to programmatically get the share folders. (If you are interested in the full code feel free to ask) – Kar.ma Dec 10 '20 at 16:18

-

Great tool, thank you. For my Rsync-Job between 2 Synology NAS, I should know all FILENAMES longer then 143 characters. This is not the entire path length, it is only the max length per filename. How could I find such filenames? – PeterCo Dec 22 '20 at 14:43

-

1Hey @PeterCo you can still use the above PowerShell script, but instead of using `$_.FullName` just replace it with `$_.Name` everywhere; that will give you the filename instead of the full path. – deadlydog Dec 22 '20 at 18:58

As a refinement of simplest solution, and if you can’t or don’t want to install Powershell, just run:

dir /s /b | sort /r /+261 > out.txt

or (faster):

dir /s /b | sort /r /+261 /o out.txt

And lines longer than 260 will get to the top of listing. Note that you must add 1 to SORT column parameter (/+n).

- 420

- 4

- 5

-

Thanks for the refinement. It was my case that I could not install Powershell (Windows 2003 box). VOTED UP – preOtep Sep 20 '16 at 18:10

-

-

-

-

Up voted. I think this should also mention SUBST to shorten a path into a drive ftw. – cliffclof Sep 10 '20 at 04:49

I've made an alternative to the other good answers on here that uses PowerShell, but mine also saves the list to a file. Will share it here in case anyone else needs wants something like that.

Warning: Code overwrites "longfilepath.txt" in the current working directory. I know it's unlikely you'd have one already, but just in case!

Purposely wanted it in a single line:

Out-File longfilepath.txt ; cmd /c "dir /b /s /a" | ForEach-Object { if ($_.length -gt 250) {$_ | Out-File -append longfilepath.txt}}

Detailed instructions:

- Run PowerShell

- Traverse to the directory you want to check for filepath lengths (C: works)

- Copy and paste the code [Right click to paste in PowerShell, or Alt + Space > E > P]

- Wait until it's done and then view the file:

cat longfilepath.txt | sort

Explanation:

Out-File longfilepath.txt ; – Create (or overwrite) a blank file titled 'longfilepath.txt'. Semi-colon to separate commands.

cmd /c "dir /b /s /a" | – Run dir command on PowerShell, /a to show all files including hidden files. | to pipe.

ForEach-Object { if ($_.length -gt 250) {$_ | Out-File -append longfilepath.txt}} – For each line (denoted as $_), if the length is greater than 250, append that line to the file.

- 1,049

- 12

- 15

From http://www.powershellmagazine.com/2012/07/24/jaap-brassers-favorite-powershell-tips-and-tricks/:

Get-ChildItem –Force –Recurse –ErrorAction SilentlyContinue –ErrorVariable AccessDenied

the first part just iterates through this and sub-folders; using -ErrorVariable AccessDenied means push the offending items into the powershell variable AccessDenied.

You can then scan through the variable like so

$AccessDenied |

Where-Object { $_.Exception -match "must be less than 260 characters" } |

ForEach-Object { $_.TargetObject }

If you don't care about these files (may be applicable in some cases), simply drop the -ErrorVariable AccessDenied part.

- 1,976

- 2

- 21

- 21

-

Problem is, `$_.TargetObject` doesn't contain the full path to _files_ that violate `MAX_PATH`, only _Directory names_, and the _parent directory name_ of the offending files. I am working on finding a non-Robocopy or Subst or Xcopy solution, and will post when I finding a working solution. – user66001 Dec 15 '15 at 19:08

you can redirect stderr.

more explanation here, but having a command like:

MyCommand >log.txt 2>errors.txt

should grab the data you are looking for.

Also, as a trick, Windows bypasses that limitation if the path is prefixed with \\?\ (msdn)

Another trick if you have a root or destination that starts with a long path, perhaps SUBST will help:

SUBST Q: "C:\Documents and Settings\MyLoginName\My Documents\MyStuffToBeCopied"

Xcopy Q:\ "d:\Where it needs to go" /s /e

SUBST Q: /D

- 15,695

- 5

- 45

- 66

-

Redirecting the std output and the errors should give me a good enough indication of where the big file is thanks. command > file 2>&1 – WestHamster Oct 03 '12 at 17:58

-

that will work, but my command *should* separate the errors into a separate file – SeanC Oct 03 '12 at 17:59

-

\\?\ looks good too. I can't get the following to work though: xcopy /s \\?\Q:\nuthatch \\?\Q:\finch – WestHamster Oct 03 '12 at 18:06

-

hmm... seems `\\?\ ` doesn't work for the command line. Added another idea using `SUBST` – SeanC Oct 03 '12 at 18:17

-

Unfortunately subst won't be too useful because the command is of the type: xcopy /s q:\nuthatch q:\finch

and the long path is embedded somewhere unknown under nuthatch – WestHamster Oct 03 '12 at 20:27

TLPD ("too long path directory") is the program that saved me. Very easy to use:

- 1,281

- 17

- 20

Crazily, this question is still relevant. None of the answers gave me quite what I wanted although E235 gave me the base. I also print out the length of the name to make it easier to see how many characters one has to trim.

Get-ChildItem -Recurse | Where-Object {$_.FullName.Length -gt 260} | %{"{0} : {1}" -f $_.fullname.Length,$_.fullname }

- 126

- 9

For paths greater than 260:

you can use:

Get-ChildItem | Where-Object {$_.FullName.Length -gt 260}

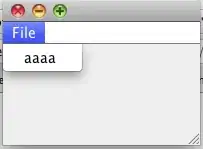

Example on 14 chars:

To view the paths lengths:

Get-ChildItem | Select-Object -Property FullName, @{Name="FullNameLength";Expression={($_.FullName.Length)}

Get paths greater than 14:

Get-ChildItem | Where-Object {$_.FullName.Length -gt 14}

Screenshot:

For filenames greater than 10:

Get-ChildItem | Where-Object {$_.PSChildName.Length -gt 10}

Screenshot:

- 11,560

- 24

- 91

- 141