I am implementing SVM using scikit package in python. I am having difficulty while interpreting the "alpha i" values in plot_separating_hyperplane.py

import numpy as np

import pylab as pl

from sklearn import svm

# we create 40 separable points

np.random.seed(0)

X = np.r_[np.random.randn(20, 2) - [2, 2], np.random.randn(20, 2) + [2, 2]]

Y = [0] * 20 + [1] * 20

# fit the model

clf = svm.SVC(kernel='linear')

clf.fit(X, Y)

print clf.support_vectors_

#support_vectors_ prints the support vectors

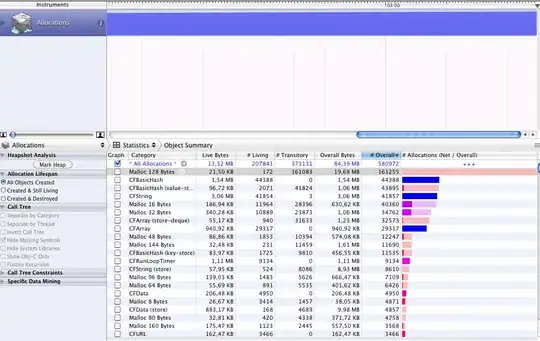

print clf.dual_coef_

#dual_coef_ gives us the "alpha i, y i" value for all support vectors

Sample output

Dual_coef_ = [[ 0.04825885 0.56891844 -0.61717729]]

Support Vectors =

[[-1.02126202 0.2408932 ]

[-0.46722079 -0.53064123]

[ 0.95144703 0.57998206]]

Dual_coef_ gives us the "alpha i * y i" values. We can confirm that summation of "alpha i * y i" = 0 (0.04825885 + 0.56891844 - 0.61717729 = 0)

I wanted to find out the "alpha i" values. It should be easy, since we have "alpha i * y i" values. But i'm getting all "alpha i's" to be negative. For example, the point (0.95144703, 0.57998206) lies above the line (see link). So y = +1. If y = +1, alpha will be -0.61717729. Similarly for point (-1.02126202, 0.2408932) lies below the line. So y = -1, and hence alpha = -0.04825885.

Why am I getting alpha values to be negative? Is my interpretation wrong? Any help will be appreciated.

For your reference,

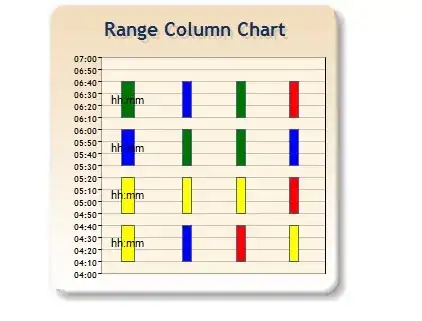

For the Support Vector Classifier (SVC),

Given training vectors , i=1,..., n, in two classes, and a vector such that , SVC solves the following primal problem:

Its dual is

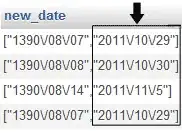

where 'e' is the vector of all ones, C > 0 is the upper bound, Q is an n by n positive semidefinite matrix,  and

and  is the kernel. Here training vectors are mapped into a higher (maybe infinite) dimensional space by the function .

is the kernel. Here training vectors are mapped into a higher (maybe infinite) dimensional space by the function .