Why is decimal not a primitive type?

Console.WriteLine(typeof(decimal).IsPrimitive);

outputs false.

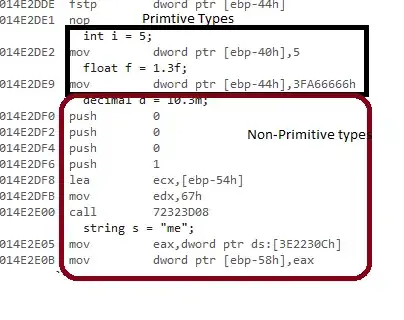

It is a base type, it's part of the specifications of the language, but not a primitive. What primitive type(s) do represent a decimal in the framework? An int for example has a field m_value of type int. A double has a field m_value of type double. It's not the case for decimal. It seems to be represented by a bunch of ints but I'm not sure.

Why does it look like a primitive type, behaves like a primitive type (except in a couple of cases) but is not a primitive type?