Here's a simple, effective, but perhaps somewhat naive approach.

First make sure you make a generic interpolator through both functions. That way you can evaluate both functions in between the given data points. I used a cubic-splines interpolator, since that seems general enough for the type of smooth functions you provided (and does not require additional toolboxes).

Then you evaluate the source function ("original") at a large number of points. Use this number also as a parameter in an inline function, that takes as input X, where

X = [a b]

(as in ax+b). For any input X, this inline function will compute

the function values of the target function at the same x-locations, but then scaled and offset by a and b, respectively.

The sum of the squared-differences between the resulting function values, and the ones of the source function you computed earlier.

Use this inline function in fminsearch with some initial estimate (one that you have obtained visually or by via automatic means). For the example you provided, I used a few random ones, which all converged to near-optimal fits.

All of the above in code:

function s = findScaleOffset

%% initialize

f2 = [0;0.450541598502498;0.0838213779969326;0.228976968716819;0.91333736150167;0.152378018969223;0.825816977489547;0.538342435260057;0.996134716626885;0.0781755287531837;0.442678269775446;0];

f1 = [-0.029171964726699;-0.0278570165494982;0.0331454732535324;0.187656956432487;0.358856370923984;0.449974662483267;0.391341738643094;0.244800719791534;0.111797007617227;0.0721767235173722;0.0854437239807415;0.143888234591602;0.251750993723227;0.478953530572365;0.748209818420035;0.908044924557262;0.811960826711455;0.512568916956487;0.22669198638799;0.168136111568694;0.365578085161896;0.644996661336714;0.823562159983554;0.792812945867018;0.656803251999341;0.545799498053254;0.587013303815021;0.777464637372241;0.962722388208354;0.980537136457874;0.734416947254272;0.375435649393553;0.106489547770962;0.0892376361668696;0.242467741982851;0.40610516900965;0.427497319032133;0.301874099075184;0.128396341665384;0.00246347624097456;-0.0322120242872125];

figure(1), clf, hold on

h(1) = subplot(2,1,1); hold on

plot(f1);

legend('Original')

h(2) = subplot(2,1,2); hold on

plot(f2);

linkaxes(h)

axis([0 max(length(f1),length(f2)), min(min(f1),min(f2)),max(max(f1),max(f2))])

%% make cubic interpolators and test points

pp1 = spline(1:numel(f1), f1);

pp2 = spline(1:numel(f2), f2);

maxX = max(numel(f1), numel(f2));

N = 100 * maxX;

x2 = linspace(1, maxX, N);

y1 = ppval(pp1, x2);

%% search for parameters

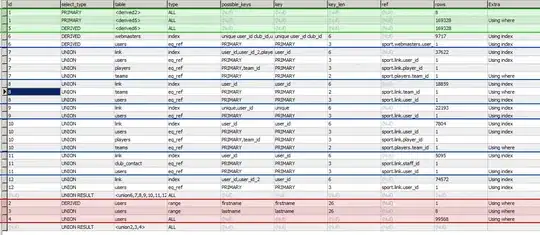

s = fminsearch(@(X) sum( (y1 - ppval(pp2,X(1)*x2+X(2))).^2 ), [0 0])

%% plot results

y2 = ppval( pp2, s(1)*x2+s(2));

figure(1), hold on

subplot(2,1,2), hold on

plot(x2,y2, 'r')

legend('before', 'after')

end

Results:

s =

2.886234493867320e-001 3.734482822175923e-001

Note that this computes the opposite transformation from the one you generated the data with. Reversing the numbers:

>> 1/s(1)

ans =

3.464721948700991e+000 % seems pretty decent

>> -s(2)

ans =

-3.734482822175923e-001 % hmmm...rather different from 7/11!

(I'm not sure about the 7/11 value you provided; using the exact values you gave to make a plot results in a less accurate approximation to the source function...Are you sure about the 7/11?)

Accuracy can be improved by either

- using a different optimizer (

fmincon, fminunc, etc.)

- demanding a higher accuracy from

fminsearch through optimset

- having more sample points in both

f1 and f2 to improve the quality of the interpolations

- Using a better initial estimate

Anyway, this approach is pretty general and gives nice results. It also requires no toolboxes.

It has one major drawback though -- the solution found may not be the global optimizer, e.g., the quality of the outcomes of this method could be quite sensitive to the initial estimate you provide. So, always make a (difference) plot to make sure the final solution is accurate, or if you have a large number of such things to do, compute some sort of quality factor upon which you decide to re-start the optimization with a different initial estimate.

It is of course very possible to use the results of the Fourier+Mellin transforms (as suggested by chaohuang below) as an initial estimate to this method. That might be overkill for the simple example you provide, but I can easily imagine situations where this could indeed be very useful.

This method is accurate but exhaustive and may take some time. Another disadvantage is that it finds only a local minima, and may give false results if initial guess (x0) is far.

This method is accurate but exhaustive and may take some time. Another disadvantage is that it finds only a local minima, and may give false results if initial guess (x0) is far.