How do I remove unwanted parts from strings in a column?

6 years after the original question was posted, pandas now has a good number of "vectorised" string functions that can succinctly perform these string manipulation operations.

This answer will explore some of these string functions, suggest faster alternatives, and go into a timings comparison at the end.

Specify the substring/pattern to match, and the substring to replace it with.

pd.__version__

# '0.24.1'

df

time result

1 09:00 +52A

2 10:00 +62B

3 11:00 +44a

4 12:00 +30b

5 13:00 -110a

df['result'] = df['result'].str.replace(r'\D', '', regex=True)

df

time result

1 09:00 52

2 10:00 62

3 11:00 44

4 12:00 30

5 13:00 110

If you need the result converted to an integer, you can use Series.astype,

df['result'] = df['result'].str.replace(r'\D', '', regex=True).astype(int)

df.dtypes

time object

result int64

dtype: object

If you don't want to modify df in-place, use DataFrame.assign:

df2 = df.assign(result=df['result'].str.replace(r'\D', '', regex=True))

df

# Unchanged

Useful for extracting the substring(s) you want to keep.

df['result'] = df['result'].str.extract(r'(\d+)', expand=False)

df

time result

1 09:00 52

2 10:00 62

3 11:00 44

4 12:00 30

5 13:00 110

With extract, it is necessary to specify at least one capture group. expand=False will return a Series with the captured items from the first capture group.

###.str.split and .str.get

Splitting works assuming all your strings follow this consistent structure.

# df['result'] = df['result'].str.split(r'\D').str[1]

df['result'] = df['result'].str.split(r'\D').str.get(1)

df

time result

1 09:00 52

2 10:00 62

3 11:00 44

4 12:00 30

5 13:00 110

Do not recommend if you are looking for a general solution.

If you are satisfied with the succinct and readable str

accessor-based solutions above, you can stop here. However, if you are

interested in faster, more performant alternatives, keep reading.

Optimizing: List Comprehensions

In some circumstances, list comprehensions should be favoured over pandas string functions. The reason is because string functions are inherently hard to vectorize (in the true sense of the word), so most string and regex functions are only wrappers around loops with more overhead.

My write-up, Are for-loops in pandas really bad? When should I care?, goes into greater detail.

The str.replace option can be re-written using re.sub

import re

# Pre-compile your regex pattern for more performance.

p = re.compile(r'\D')

df['result'] = [p.sub('', x) for x in df['result']]

df

time result

1 09:00 52

2 10:00 62

3 11:00 44

4 12:00 30

5 13:00 110

The str.extract example can be re-written using a list comprehension with re.search,

p = re.compile(r'\d+')

df['result'] = [p.search(x)[0] for x in df['result']]

df

time result

1 09:00 52

2 10:00 62

3 11:00 44

4 12:00 30

5 13:00 110

If NaNs or no-matches are a possibility, you will need to re-write the above to include some error checking. I do this using a function.

def try_extract(pattern, string):

try:

m = pattern.search(string)

return m.group(0)

except (TypeError, ValueError, AttributeError):

return np.nan

p = re.compile(r'\d+')

df['result'] = [try_extract(p, x) for x in df['result']]

df

time result

1 09:00 52

2 10:00 62

3 11:00 44

4 12:00 30

5 13:00 110

We can also re-write @eumiro's and @MonkeyButter's answers using list comprehensions:

df['result'] = [x.lstrip('+-').rstrip('aAbBcC') for x in df['result']]

And,

df['result'] = [x[1:-1] for x in df['result']]

Same rules for handling NaNs, etc, apply.

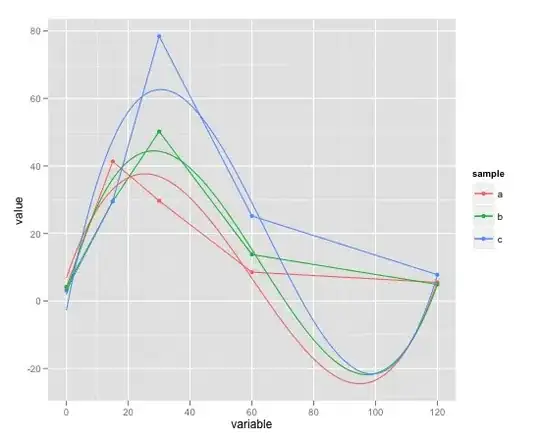

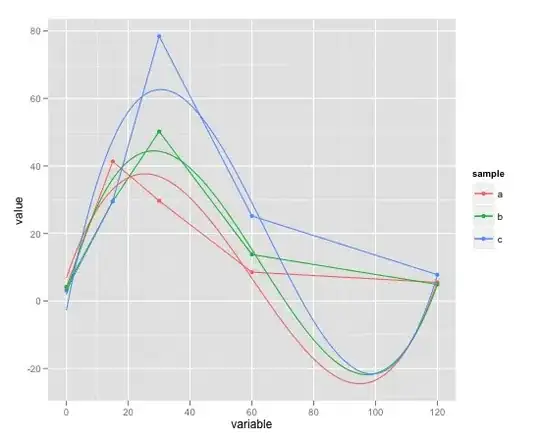

Performance Comparison

Graphs generated using perfplot. Full code listing, for your reference. The relevant functions are listed below.

Some of these comparisons are unfair because they take advantage of the structure of OP's data, but take from it what you will. One thing to note is that every list comprehension function is either faster or comparable than its equivalent pandas variant.

Functions

def eumiro(df):

return df.assign(

result=df['result'].map(lambda x: x.lstrip('+-').rstrip('aAbBcC')))

def coder375(df):

return df.assign(

result=df['result'].replace(r'\D', r'', regex=True))

def monkeybutter(df):

return df.assign(result=df['result'].map(lambda x: x[1:-1]))

def wes(df):

return df.assign(result=df['result'].str.lstrip('+-').str.rstrip('aAbBcC'))

def cs1(df):

return df.assign(result=df['result'].str.replace(r'\D', ''))

def cs2_ted(df):

# `str.extract` based solution, similar to @Ted Petrou's. so timing together.

return df.assign(result=df['result'].str.extract(r'(\d+)', expand=False))

def cs1_listcomp(df):

return df.assign(result=[p1.sub('', x) for x in df['result']])

def cs2_listcomp(df):

return df.assign(result=[p2.search(x)[0] for x in df['result']])

def cs_eumiro_listcomp(df):

return df.assign(

result=[x.lstrip('+-').rstrip('aAbBcC') for x in df['result']])

def cs_mb_listcomp(df):

return df.assign(result=[x[1:-1] for x in df['result']])