The coefficients are identical to those given in the Handbook of Mathematical Functions, ed. Abramowitz and Stegan (1964), page 76, and are attributed to Carlson and Goldstein, Rational approximations of functions, Los Alamos Scientific Laboratory (1955).

The first can be found in http://www.jonsson.eu/resources/hmf/pdfwrite_600dpi/hmf_600dpi_page_76.pdf.

And the second at http://www.osti.gov/bridge/servlets/purl/4374577-0deJO9/4374577.pdf. Page 37 gives:

Regarding your third question, "Can I extend this to get more precision?", http://lol.zoy.org/wiki/doc/maths/remez has a downloadable C++ implementation of the Remez algorithm; it provides (unchecked by me) the coefficients for the 6th-order polynomial for sin:

error: 3.9e-14

9.99999999999624e-1

-1.66666666660981e-1

8.33333330841468e-3

-1.98412650240363e-4

2.75568408741356e-6

-2.50266363478673e-8

1.53659375573646e-10

Or course, you would need to change from float to double to realize any improvement. And this may also answer your second question, regarding cos and tan.

Also, I see in the comments that a fixed-point answer is required in the end. I implemented a 32-bit fixed-point version in 8031-assembler about 26 years ago; I'll try digging it up to see whether it has anything useful in it.

Update: If you are stuck with 32-bit doubles, then the only way I can see for you to increase the accuracy by a "digit or two" is to forget floating-point and use fixed-point. Surprisingly, google doesn't seem to turn up anything. The following code provides proof-of-concept, run on a standard Linux machine:

#include <stdio.h>

#include <math.h>

#include <stdint.h>

// multiply two 32-bit fixed-point fractions (no rounding)

#define MUL32(a, b) ((uint64_t)(a) * (b) >> 32)

// sin32: Fixed-point sin calculation for first octant, coefficients from

// Handbook for Computing Elementary Functions, by Lyusternik et al, p. 89.

// input: 0 to 0xFFFFFFFF, giving fraction of octant 0 to PI/8, relative to 2**32

// output: 0 to 0.7071, relative to 2**32

static uint32_t sin32(uint32_t x) { // x in 1st octant, = radians/PI*8*2**32

uint32_t y, x2 = MUL32(x, x); // x2 = x * x

y = 0x000259EB; // a7 = 0.000 035 877 1

y = 0x00A32D1E - MUL32(x2, y); // a5 = 0.002 489 871 8

y = 0x14ABBA77 - MUL32(x2, y); // a3 = 0.080 745 367 2

y = 0xC90FDA73u - MUL32(x2, y); // a1 = 0.785 398 152 4

return MUL32(x, y);

}

int main(void) {

int i;

for (i = 0; i < 45; i += 2) { // 0 to 44 degrees

const double two32 = 1LL << 32;

const double radians = i * M_PI / 180;

const uint32_t octant = i / 45. * two32; // fraction of 1st octant

printf("%2d %+.10f %+.10f %+.10f %+.0f\n", i,

sin(radians) - sin32(octant) / two32,

sin(radians) - sinf(radians),

sin(radians) - (float)sin(radians),

sin(radians) * two32 - sin32(octant));

}

return 0;

}

The coefficients are from the Handbook for Computing Elementary Functions, by Lyusternik et al, p. 89, here:

The only reason I choose this particular function is that it has one less term than your original series.

The results are:

0 +0.0000000000 +0.0000000000 +0.0000000000 +0

2 +0.0000000007 +0.0000000003 +0.0000000012 +3

4 +0.0000000010 +0.0000000005 +0.0000000031 +4

6 +0.0000000012 -0.0000000029 -0.0000000011 +5

8 +0.0000000014 +0.0000000011 -0.0000000044 +6

10 +0.0000000014 +0.0000000050 -0.0000000009 +6

12 +0.0000000011 -0.0000000057 +0.0000000057 +5

14 +0.0000000006 -0.0000000018 -0.0000000061 +3

16 -0.0000000000 +0.0000000021 -0.0000000026 -0

18 -0.0000000005 -0.0000000083 -0.0000000082 -2

20 -0.0000000009 +0.0000000095 -0.0000000107 -4

22 -0.0000000010 -0.0000000007 +0.0000000139 -4

24 -0.0000000009 -0.0000000106 +0.0000000010 -4

26 -0.0000000005 +0.0000000065 -0.0000000049 -2

28 -0.0000000001 -0.0000000032 -0.0000000110 -0

30 +0.0000000005 -0.0000000126 -0.0000000000 +2

32 +0.0000000010 +0.0000000037 -0.0000000025 +4

34 +0.0000000015 +0.0000000193 +0.0000000076 +7

36 +0.0000000013 -0.0000000141 +0.0000000083 +6

38 +0.0000000007 +0.0000000011 -0.0000000266 +3

40 -0.0000000005 +0.0000000156 -0.0000000256 -2

42 -0.0000000009 -0.0000000152 -0.0000000170 -4

44 -0.0000000005 -0.0000000011 -0.0000000282 -2

Thus we see that this fixed-point calculation is about ten times more accurate than sinf() or (float)sin(), and is correct to 29 bits. Using rounding rather than truncation in MUL32() made only a marginal improvement.

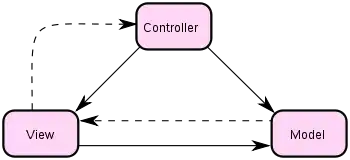

Plot of the error of the "fast" sine described above against a Taylor polynomial of equal degree.

Plot of the error of the "fast" sine described above against a Taylor polynomial of equal degree.