I recorded a video with a bluescreen. We have the software to convert that video to a transparent background. What's the best way to play this video overlaid on a custom UIView? Anytime I've seen videos on the iPhone it always launches that player interface. Any way to avoid this?

8 Answers

Don't know if anyone is still interested in this besides me, but I'm using GPUImage and the Chromakey filter to achieve this^^ https://github.com/BradLarson/GPUImage

EDIT: example code of what I did (may be dated now):

-(void)AnimationGo:(GPUImageView*)view {

NSURL *url = [[NSBundle mainBundle] URLForResource:@"test" withExtension:@"mov"];

movieFile = [[GPUImageMovie alloc] initWithURL:url];

filter = [[GPUImageChromaKeyBlendFilter alloc] init];

[movieFile addTarget:filter];

GPUImageView* imageView = (GPUImageView*)view;

[imageView setBackgroundColorRed:0.0 green:0.0 blue:0.0 alpha:0.0];

imageView.layer.opaque = NO;

[filter addTarget:imageView];

[movieFile startProcessing];

//to loop

[imageView setCompletionBlock:^{

[movieFile removeAllTargets];

[self AnimationGo:view];

}];

}

I may have had to modify GPUImage a bit, and it may not work with the latest version of GPUImage but that's what we used

- 7,169

- 1

- 44

- 45

- 571

- 1

- 9

- 33

-

very. I had to make a few changes to GPUImage to make it so that it would loop, but the rest was pretty much just drop-in.^^ – Matthew Clark Jul 27 '12 at 17:08

-

Can you play the video and apply the filter at the same time? (real time transformation, in other words) – zakdances Jul 27 '12 at 19:22

-

1yeah, it uses the GPU using opengles to filter everything so as long as you are only doing 1 or 2 filters it's VERY responsive^^ – Matthew Clark Jul 28 '12 at 01:37

-

and you are able to see the UIView below the transparent parts of the chroma keyed video? could you post some sample code maybe? i'm stuck on this for days. – Etienne678 Dec 19 '12 at 16:26

-

Could you explain what you did to implement imageView setCompletionBlock ? – thomers Nov 05 '15 at 16:35

-

2This answer is missing a key detail: You need to create a transparent `GPUImagePicture` (e.g. from a transparent PNG) to blend with `GPUImageMovie`, since `GPUImageChromaKeyBlendFilter` expects two inputs. – Jason Moore Jun 07 '16 at 20:28

-

I imagine a good bit has changed since 2012^^ If you are reading this please check the current docs for GPUImage for details^^ – Matthew Clark Jun 07 '16 at 23:41

-

Here's an answer with using a transparent `GPUImagePicture`: https://stackoverflow.com/questions/33244347/video-with-gpuimagechromakeyfilter-has-tint-when-played-in-transparent-gpuimagev – Andrey Gordeev May 25 '17 at 07:44

You'll need to build a custom player using AVFoundation.framework and then use a video with alpha channel. The AVFoundation framework allows much more robust handeling of video without many of the limitations of MPMedia framework. Building a custom player isn't as hard as people make it out to be. I've written a tutorial on it here: http://www.sdkboy.com/?p=66

- 297

- 2

- 10

-

1link dead, answer became worthless... apart from the fact that AVFoundation did never support alpha channel playback out of the box. – Till Jul 26 '13 at 11:28

I'm assuming what you're trying to do is actually remove the blue screen in real-time from your video: you'll need to play the video through OpenGL, run pixel shaders on the frames and finally render everything using an OpenGL layer with a transparent background.

See the Capturing from the Camera using AV Foundation on iOS 5 session from WWDC 2011 which explains techniques to do exactly that (watch Chroma Key demo at 9:00). Presumably the source can be downloaded but I can't find the link right now.

- 3,848

- 1

- 38

- 55

The GPUImage would work, but it is not perfect because the iOS device is not the place to do your video processing. You should do all your on the desktop using a professional video tool that handles chromakey, then export a video with an alpha channel. Then import the video into your iOS application bundle as described at playing-movies-with-an-alpha-channel-on-the-ipad. There are a lot of quality and load time issues you can avoid by making sure your video is properly turned into an alpha channel video before it is loaded onto the iOS device.

- 4,309

- 2

- 30

- 65

-

4We could debate quality, but in terms of performance, the chroma keying operations in GPUImage are all simple OpenGL ES shaders. They run at 60 FPS for all but the largest videos on even older iOS devices. The overhead on that is negligible compared to the video decoding time. How does AVAnimator compare to the hardware-accelerated decoding in AV Foundation when it comes to playback performance? – Brad Larson Jun 25 '13 at 22:43

-

The problem with ChromaKey is when there are problems with the source video, like so http://www.sonycreativesoftware.com/solving_tough_keying_problems_in_vegas_software. If we lived in a perfect world, then all video would be perfect and there would never be issues. But, doing your video processing on the graphics card means it is really hard to detect when there are problems. It may look okay on one iPhone model but might look awful on the iPad. My point is that these problems have already been solved on the desktop with professional video packages. – MoDJ Jun 25 '13 at 23:39

-

As far as performance goes, AVAnimator just does a blit from mapped memory or a load from mapped memory into a texture without a transfer to the graphics card (CoreGraphics implements this), so it can also get 60FPS results. The time to decode a h.264 can actually take longer to start when compared to AVAnimator, but AVAnimator has to decode from a 7zip project resource to begin with so it really depends on the way the app is setup. – MoDJ Jun 25 '13 at 23:45

-

http://stackoverflow.com/questions/3410003/iphone-smooth-transition-from-one-video-to-another/17138580#17138580 – MoDJ Jun 25 '13 at 23:48

-

It is true that GPUImage has a real performance advantage. Older iOS devices had much smaller screens and as a result the W x H size of a framebuffer was not that large. But, on more recent iOS hardware like an iPad with a 2048 x 1536 display, it is just not possible for the hardware to even memcpy() that much data fast enough to blit full screen video at 30 FPS. Even so, quality of the green screen approach in GPUImage is still an issue: http://www.modejong.com/blog/post18_green_screen/ – MoDJ Dec 02 '15 at 23:57

Only way to avoid using the player interface is to roll your own video player, which is pretty difficult to do right. You can insert a custom overlay on top of the player interface to make it look like the user is still in your app, but you don't actually have control of the view. You might want to try playing your transparent video in the player interface and see if it shows up as transparent. See if there is a property for the background color in the player. You would want to set that to be transparent too.

--Mike

I'm not sure the iPhone APIs will let you have a movie view over the top of another view and still have transparency.

- 23,254

- 14

- 71

- 91

You can't avoid launching the player interface if you want to use the built-in player.

Here's what I would try:

- get the new window that is created by MPMoviePlayerController (see here)

- explore the view hierarchy in that window, using [window subviews] and [view subviews]

- try to figure out which one of those views is the actual player view

- try to insert a view BEHIND the player view (sendSubviewToBack)

- find out if the player supports transparency or not.

I don't think you can do much better than this without writing your own player, and I have no idea if this method would work or not.

Depending on the size of your videos and what you're trying to do, you could also try messing around with animated GIFs.

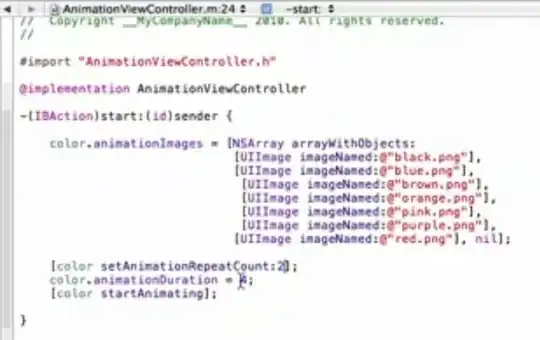

if you could extract the frames from your video and save them as images then your video could be reproduced by changing the images. Here is an example of how you could reproduce your images so that it looks like a video:

in this image that I uploaded the names of the images have a different name but if you name your images as: frame1, fram2, fram3.... then you could place that inside a loop.

I have never tried it I just know it works for simple animations. Hope it works.

- 34,064

- 78

- 298

- 470