I am seeing something odd with storing doubles in a dictionary, and am confused as to why.

Here's the code:

Dictionary<string, double> a = new Dictionary<string, double>();

a.Add("a", 1e-3);

if (1.0 < a["a"] * 1e3)

Console.WriteLine("Wrong");

if (1.0 < 1e-3 * 1e3)

Console.WriteLine("Wrong");

The second if statement works as expected; 1.0 is not less than 1.0. Now, the first if statement evaluates as true. The very odd thing is that when I hover over the if, the intellisense tells me false, yet the code happily moves to the Console.WriteLine.

This is for C# 3.5 in Visual Studio 2008.

Is this a floating point accuracy problem? Then why does the second if statement work? I feel I am missing something very fundamental here.

Any insight is appreciated.

Edit2 (Re-purposing question a little bit):

I can accept the math precision problem, but my question now is: why does the hover over evaluate properly? This is also true of the immediate window. I paste the code from the first if statement into the immediate window and it evaluates false.

Update

First of all, thanks very much for all the great answers.

I am also having problems recreating this in another project on the same machine. Looking at the project settings, I see no differences. Looking at the IL between the projects, I see no differences. Looking at the disassembly, I see no apparent differences (besides memory addresses). Yet when I debug the original project, I see:

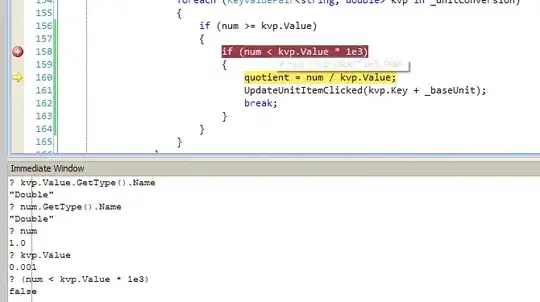

The immediate window tells me the if is false, yet the code falls into the conditional.

At any rate, the best answer, I think, is to prepare for floating point arithmetic in these situations. The reason I couldn't let this go has more to do with the debugger's calculations differing from the runtime. So thanks very much to Brian Gideon and stephentyrone for some very insightful comments.