I am using pandas.DataFrame in a multi-threaded code (actually a custom subclass of DataFrame called Sound). I have noticed that I have a memory leak, since the memory usage of my program augments gradually over 10mn, to finally reach ~100% of my computer memory and crash.

I used objgraph to try tracking this leak, and found out that the count of instances of MyDataFrame is going up all the time while it shouldn't : every thread in its run method creates an instance, makes some calculations, saves the result in a file and exits ... so no references should be kept.

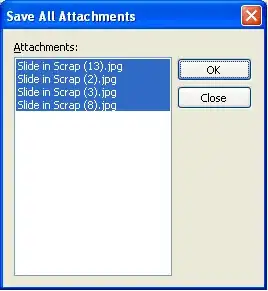

Using objgraph I found that all the data frames in memory have a similar reference graph :

I have no idea if that's normal or not ... it looks like this is what is keeping my objects in memory. Any idea, advice, insight ?