(lensum - ldist) / lensum

ldist is not the distance, is the sum of costs

Each number of the array that is not match comes from above, from left or diagonal

If the number comes from the left he is an Insertion, it comes from above it is a deletion, it comes from the diagonal it is a replacement

The insert and delete have cost 1, and the substitution has cost 2.

The replacement cost is 2 because it is a delete and insert

ab ac cost is 2 because it is a replacement

>>> import Levenshtein as lev

>>> lev.distance("ab","ac")

1

>>> lev.ratio("ab","ac")

0.5

>>> (4.0-1.0)/4.0 #Erro, the distance is 1 but the cost is 2 to be a replacement

0.75

>>> lev.ratio("ab","a")

0.6666666666666666

>>> lev.distance("ab","a")

1

>>> (3.0-1.0)/3.0 #Coincidence, the distance equal to the cost of insertion that is 1

0.6666666666666666

>>> x="ab"

>>> y="ac"

>>> lev.editops(x,y)

[('replace', 1, 1)]

>>> ldist = sum([2 for item in lev.editops(x,y) if item[0] == 'replace'])+ sum([1 for item in lev.editops(x,y) if item[0] != 'replace'])

>>> ldist

2

>>> ln=len(x)+len(y)

>>> ln

4

>>> (4.0-2.0)/4.0

0.5

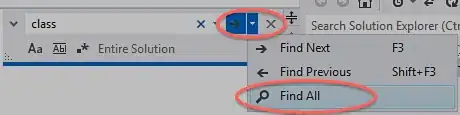

For more information: python-Levenshtein ratio calculation

Another example:

The cost is 9 (4 replace => 4*2=8 and 1 delete 1*1=1, 8+1=9)

str1=len("google") #6

str2=len("look-at") #7

str1 + str2 #13

distance = 5 (According the vector (7, 6) = 5 of matrix)

ratio is (13-9)/13 = 0.3076923076923077

>>> c="look-at"

>>> d="google"

>>> lev.editops(c,d)

[('replace', 0, 0), ('delete', 3, 3), ('replace', 4, 3), ('replace', 5, 4), ('replace', 6, 5)]

>>> lev.ratio(c,d)

0.3076923076923077

>>> lev.distance(c,d)

5