I've spent the last week porting a recursive Branch&Cut algorithm from Matlab to C++ and hoped to see a significant decrease in solution time, but as incredible as it may sound, the opposite was the case. Now I am not really an expert on C++, so I downloaded sleepy profiler and tried to find potential bottlenecks. I'd like to ask if I am drawing the right conclusions from this, or if I am looking in a completely wrong direction.

I let the code run for 137 seconds, and this is what the profiler displays (many other entries below, but they don't matter):

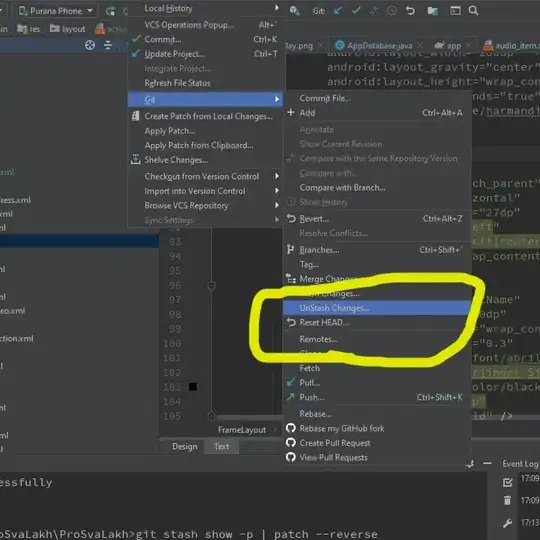

So if I get this right, 98 seconds were spent creating new objects, and 34 seconds were spent freeing up memory (i.e. deleting objects).

I will go through my code and look where I could do things better, but I also wanted to ask if you have any hints on frequent mistakes or bad habits that generate such behavior. The one thing that comes to my mind is that I use a lot of temporary std::vectors in my code to compute stuff, so that might be slow.

To keep you from going blind, I won't post my code before I looked it through a bit, but if I can't solve this on my own I'll be back.