This is a continuation of my question about downloading files in chunks. The explanation will be quite big, so I'll try to divide it to several parts.

1) What I tried to do?

I was creating a download manager for a Window-Phone application. First, I tried to solve the problem of downloading large files (the explanation is in the previous question). No I want to add "resumable download" feature.

2) What I've already done.

At the current moment I have a well-working download manager, that allows to outflank the Windows Phone RAM limit. The plot of this manager, is that it allows to download small chunks of file consequently, using HTTP Range header.

A fast explanation of how it works:

The file is downloaded in chunks of constant size. Let's call this size "delta". After the file chunk was downloaded, it is saved to local storage (hard disk, on WP it's called Isolated Storage) in Append mode (so, the downloaded byte array is always added to the end of the file). After downloading a single chunk the statement

if (mediaFileLength >= delta) // mediaFileLength is a length of downloaded chunk

is checked. If it's true, that means, there's something left for download and this method is invoked recursively. Otherwise it means, that this chunk was last, and there's nothing left to download.

3) What's the problem?

Until I used this logic at one-time downloads (By one-time I mean, when you start downloading file and wait until the download is finished) that worked well. However, I decided, that I need "resume download" feature. So, the facts:

3.1) I know, that the file chunk size is a constant.

3.2) I know, when the file is completely downloaded or not. (that's a indirect result of my app logic, won't weary you by explanation, just suppose, that this is a fact)

On the assumption of these two statements I can prove, that the number of downloaded chunks is equal to (CurrentFileLength)/delta. Where CurrentFileLenght is a size of already downloaded file in bytes.

To resume downloading file I should simply set the required headers and invoke download method. That seems logic, isn't it? And I tried to implement it:

// Check file size

using (IsolatedStorageFileStream fileStream = isolatedStorageFile.OpenFile("SomewhereInTheIsolatedStorage", FileMode.Open, FileAccess.Read))

{

int currentFileSize = Convert.ToInt32(fileStream.Length);

int currentFileChunkIterator = currentFileSize / delta;

}

And what I see as a result? The downloaded file length is equal to 2432000 bytes (delta is 304160, Total file size is about 4,5 MB, we've downloaded only half of it). So the result is approximately 7,995. (it's actually has long/int type, so it's 7 and should be 8 instead!) Why is this happening? Simple math tells us, that the file length should be 2433280, so the given value is very close, but not equal.

Further investigations showed, that all values, given from the fileStream.Length are not accurate, but all are close.

Why is this happening? I don't know precisely, but perhaps, the .Length value is taken somewhere from file metadata. Perhaps, such rounding is normal for this method. Perhaps, when the download was interrupted, the file wasn't saved totally...(no, that's real fantastic, it can't be)

So the problem is set - it's "How to determine number of the chunks downloaded". Question is how to solve it.

4) My thoughts about solving the problem.

My first thought was about using maths here. Set some epsilon-neiborhood and use it in currentFileChunkIterator = currentFileSize / delta; statement.

But that will demand us to remember about type I and type II errors (or false alarm and miss, if you don't like the statistics terms.) Perhaps, there's nothing left to download.

Also, I didn't checked, if the difference of the provided value and the true value is supposed to grow permanently

or there will be cyclical fluctuations. With the small sizes (about 4-5 MB) I've seen only growth, but that doesn't prove anything.

So, I'm asking for help here, as I don't like my solution.

5) What I would like to hear as answer:

What causes the difference between real value and received value?

Is there a way to receive a true value?

If not, is my solution good for this problem?

Are there other better solutions?

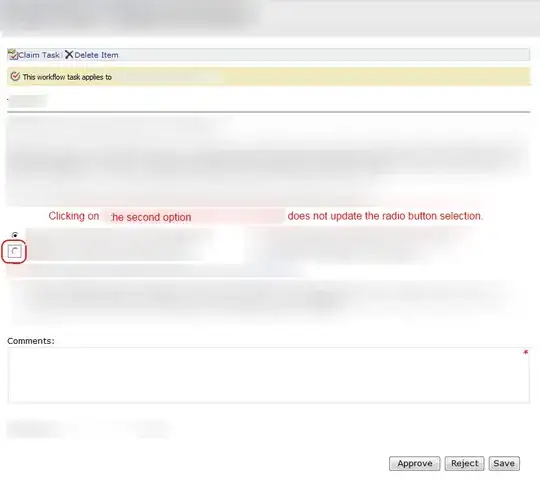

P.S. I won't set a Windows-Phone tag, because I'm not sure that this problem is OS-related. I used the Isolated Storage Tool to check the size of downloaded file, and it showed me the same as the received value(I'm sorry about Russian language at screenshot):