I've to transfer the data from datatable to database. The total record is around 15k per time. I want to redue the time for data inserting. Should I use SqlBulk? or anything else ?

4 Answers

If you use C# take a look on SqlBulkCopy class. There is a good article on CodeProject about how to use it with DataTable

- 30,606

- 13

- 135

- 162

If you just aim for speed, then bulk copy might suite you well. Depending on the requirements you can also reduce logging level.

How to copy a huge table data into another table in SQL Server

-

I just concern on about speed. – kst Feb 14 '13 at 08:50

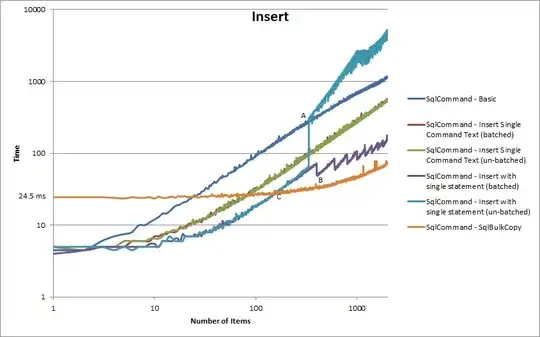

SQL bulk copy is definitively fastest with this kind of sample size as it streams the data rather than actually using regular sql commands. This gives it a slightly longer startup time but with more than ~120 rows at a time (according to my tests)

Check out my post here on how to get here if you want some stats on how sql bulk copy stacks up against a few other methods.

http://blog.staticvoid.co.nz/2012/8/17/mssql_and_large_insert_statements

- 33,537

- 22

- 129

- 198

15k records is not that much really. I have a laptop with i5 CPU and 4GB ram and it inserts 15k records in several seconds. I wouldn't really bother spending too much time on finding an ideal solution.

- 1,268

- 14

- 9