My goal is to overlay a standard UIKit view (for the moment, I'm just creating a UILabel but eventually I'll have custom content) over a detected shape using image tracking and the Vuforia AR SDK. I have something that works, but with a "fudge" term that I cannot account for. I'd like to understand where my error is, so I can either justify the existence of this correction or use a different algorithm that's known to be valid.

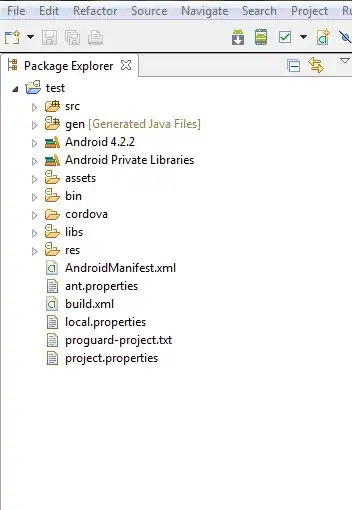

My project is based on the ImageTargets sample project in the Vuforia SDK. Where their EAGLView iterates over the results to render the OpenGL teapots, I've replaced this with a call out to my ObjC++ class TrackableObjectController. For each trackable result, it does this:

- (void)augmentedRealityView:(EAGLView *)view foundTrackableResult:(const QCAR::TrackableResult *)trackableResult

{

// find out where the target is in Core Animation space

const QCAR::ImageTarget* imageTarget = static_cast<const QCAR::ImageTarget*>(&(trackableResult->getTrackable()));

TrackableObject *trackable = [self trackableForName: TrackableName(imageTarget)];

trackable.tracked = YES;

QCAR::Vec2F size = imageTarget->getSize();

QCAR::Matrix44F modelViewMatrix = QCAR::Tool::convertPose2GLMatrix(trackableResult->getPose());

CGFloat ScreenScale = [[UIScreen mainScreen] scale];

float xscl = qUtils.viewport.sizeX/ScreenScale/2;

float yscl = qUtils.viewport.sizeY/ScreenScale/2;

QCAR::Matrix44F projectedTransform = {1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1};

QCAR::Matrix44F qcarTransform = {1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1};

/* this sizeFudge constant is here to put the label in the correct place in this demo; I had thought that

* the problem was related to the units used (I defined the length of the target in mm in the Target Manager;

* the fact that I've got to multiply by ten here could be indicative of the app interpreting length in cm).

* That turned out not to be the case, when I changed the reported length of the target it stopped drawing the

* label at the correct size. Currently, therefore, the app and the target database are both using mm, but

* there's the following empirically-divised fudge factor to get the app to position the correctly-sized view

* in the correct position relative to the detected object.

*/

const double sizeFudge = 10.0;

ShaderUtils::translatePoseMatrix(sizeFudge * size.data[0] / 2, sizeFudge * size.data[1] / 2, 0, projectedTransform.data);

ShaderUtils::scalePoseMatrix(xscl, -yscl, 1.0, projectedTransform.data); // flip along y axis

ShaderUtils::multiplyMatrix(projectedTransform.data, qUtils.projectionMatrix.data, qcarTransform.data);

ShaderUtils::multiplyMatrix(qcarTransform.data, modelViewMatrix.data, qcarTransform.data);

CATransform3D transform = *((CATransform3D*)qcarTransform.data); // both are array[16] of float

transform = CATransform3DScale(transform,1,-1,0); //needs flipping to draw

trackable.transform = transform;

}

There is then other code, called on the main thread, that looks at my TrackableObject instances, applies the computed CATransform3D to the overlay view's layer and sets the overlay view as a subview of the EAGLView.

My problem is, as the comment in the code sample has given away, with this sizeFudge factor. Apart from this factor, the code I have does the same thing as this answer; but that's putting my view in the wrong place.

Empirically I find that if I do not include the sizeFudge term, then my overlay view tracks the orientation and translation of the tracked object well but is offset down and to the right on the iPad screen—this is a translation difference, so it makes sense that changing the term used for . I first thought that the problem was the size of the object as specified in Vuforia's Target Manager. This turns out not to be the case; if I create a target ten times the size then the overlay view is drawn in the same, incorrect place but ten times smaller (as the AR assumes the object it's tracking is further away, I suppose).

It's only translating the pose here that gets me where I want to be, but this is unsatisfactory as it doesn't make any sense to me. Can anyone please explain the correct way to translate from a OpenGL coordinates as supplied by Vuforia to a CATransform3D that doesn't rely on magic numbers?

** Some more data **

The problem is more complicated than I thought when I wrote this question. The location of the label actually seems to depend on the distance from the iPad to the tracked object, though not linearly. There's also an apparent systematic error.

Here's a chart constructed by moving the iPad to a certain distance away from the target (located over the black square), and marking with a pen where the centre of the view appeared. Points above and to the left of the square have the translation fudge as described above, points below and to the right have sizeFudge==0. Hopefully the relationship between distance and offset shown here clue someone with more knowledge of 3D graphics than me into what the problem with the transform is.