In a word, no!

library(microbenchmark)

f1 <- function(x){

j <- rnorm( x , mean = 0 , sd = 1 ) ;

k <- j * 2 ;

return( k )

}

f2 <- function(x){j<-rnorm(x,mean=0,sd=1);k<-j*2;return(k)}

microbenchmark( f1(1e3) , f2(1e3) , times= 1e3 )

Unit: microseconds

expr min lq median uq max neval

f1(1000) 110.763 112.8430 113.554 114.319 677.996 1000

f2(1000) 110.386 112.6755 113.416 114.151 5717.811 1000

#Even more runs and longer sampling

microbenchmark( f1(1e4) , f2(1e4) , times= 1e4 )

Unit: milliseconds

expr min lq median uq max neval

f1(10000) 1.060010 1.074880 1.079174 1.083414 66.791782 10000

f2(10000) 1.058773 1.074186 1.078485 1.082866 7.491616 10000

EDIT

It seems like using microbenchmark would be unfair because the expressions are parsed before ever they are run in the loop. However using source should mean that with each iteration the sourced code must be parsed and whitespace removed. So I saved the functions to two seperate files, with the last line of the file being a call of the function, e.g.so my file f2.R looks like this:

f2 <- function(x){j<-rnorm(x,mean=0,sd=1);k<-j*2;return(k)};f2(1e3)

And I test them like so:

microbenchmark( eval(source("~/Desktop/f2.R")) , eval(source("~/Desktop/f1.R")) , times = 1e3)

Unit: microseconds

expr min lq median uq max neval

eval(source("~/Desktop/f2.R")) 649.786 658.6225 663.6485 671.772 7025.662 1000

eval(source("~/Desktop/f1.R")) 687.023 697.2890 702.2315 710.111 19014.116 1000

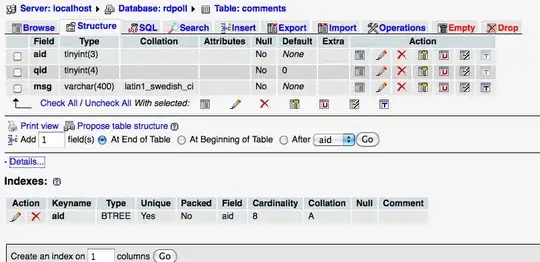

And a visual representation of the difference with 1e4 replications....

Maybe it does make a minuscule difference in the situation where functions are repeatedly parsed but this wouldn't happen in normal use cases.