For example, given the list ['one', 'two', 'one'], the algorithm should return True, whereas given ['one', 'two', 'three'] it should return False.

15 Answers

Use set() to remove duplicates if all values are hashable:

>>> your_list = ['one', 'two', 'one']

>>> len(your_list) != len(set(your_list))

True

- 15,395

- 32

- 113

- 196

- 32,032

- 8

- 79

- 100

-

23Before reading this I had tried your_list != list(set(your_list)) which will not work as the order of the elements will change. Using len is a good way to solve this problem – igniteflow May 31 '12 at 14:37

-

1often doesn't work for array of floating points.See https://stackoverflow.com/questions/60914705/ – Manas Dogra Mar 29 '20 at 14:44

-

1The fastest way to check / remove duplicate according to https://www.peterbe.com/plog/fastest-way-to-uniquify-a-list-in-python-3.6 – Vincent Oct 13 '20 at 14:00

-

I think a natural way to apply this great answer to a generic hypothesis test is to use len(your_list) == len(set(your_list)) instead of != as you either accept (True) or reject (False) that they are the same length thus there are no duplicates. – Joe Emmens Feb 25 '21 at 19:27

-

This does not answer the question. The question isn't about removing duplicated but checking whether there are any. – theberzi Oct 25 '22 at 13:30

Recommended for short lists only:

any(thelist.count(x) > 1 for x in thelist)

Do not use on a long list -- it can take time proportional to the square of the number of items in the list!

For longer lists with hashable items (strings, numbers, &c):

def anydup(thelist):

seen = set()

for x in thelist:

if x in seen: return True

seen.add(x)

return False

If your items are not hashable (sublists, dicts, etc) it gets hairier, though it may still be possible to get O(N logN) if they're at least comparable. But you need to know or test the characteristics of the items (hashable or not, comparable or not) to get the best performance you can -- O(N) for hashables, O(N log N) for non-hashable comparables, otherwise it's down to O(N squared) and there's nothing one can do about it:-(.

- 854,459

- 170

- 1,222

- 1,395

-

30Denis Otkidach offered a solution where you just build a new set from the list, then check its length. Its advantage is that it's letting the C code inside Python do the heavy lifting. Your solution loops in Python code, but has the advantage of short-circuiting when a single match has been found. If the odds are that the list probably has no duplicates, I like Denis Otkidach's version, but if the odds are that there might well be a duplicate early on in the list, this solution is better. – steveha Oct 09 '09 at 05:26

-

1Worth an up for the detail, even though I think Denis had the neater solution. – Oct 09 '09 at 05:30

-

-

@Steve314, what premature optimization? I would have written it the way Denis Otkidach wrote it, so I was trying to understand why Alex Martelli (of Python Cookbook fame) wrote it differently. After I thought about it a bit I realized that Alex's version short-circuits, and I posted a few thoughts on the differences. How do you go from a discussion of differences to premature optimization, the root of all evil? – steveha Oct 09 '09 at 16:47

-

Premature optimization rears its head by a) making you waste time writing complicated code or b) making it hard for others to read or c) causing a large-scale design to be unduly complex. This has none of those risks, it's just a drop-in piece of code hidden in a clearly-named method. – Scott Stafford Jun 25 '14 at 12:44

-

While I like Denis' solution, if you were to implement it like this, why not implement it with a dict with the list items as the keys and set the value to the number of times it shows up or just a simple True. So you would have {"one": 2, "two": 1}. It would have a time complexity of O(n). – heri0n Mar 03 '16 at 20:57

-

3If the items are hashable, a set solution is more direct, and, the way I expressed it, faster (exits as soon as the answer is known -- "short-circuits", steveha put it). Building the dict you propose (fastest as a collections.Counter) is of course far slower (needs an `all` on counts all being 1). A dict with all values True, which you also mention, is a ridiculously, uselessly bloated mimickry of a `set`, with no added value whatsoever. Big-O is not everything in programming. – Alex Martelli Mar 03 '16 at 22:30

-

1anecdote, `set.__contains__` (4.42 usec / 256 calls, 2.55% time) is *hugely* quicker than `list.__contains__` (173.00 usec / 256 calls 100% time), so much so that the marginal overhead of `set.add` versus `list.append` is worth it even though adding items to a set is notoriously less quick. defiantly use a set not a list here, for really large lists I gave up after multiple hours were a set finished in seconds. – ThorSummoner Jun 03 '20 at 02:12

-

What does seen = set() do? you need to pass the list in set, I don't understand where that happens in your code. – Toma Nov 12 '21 at 19:09

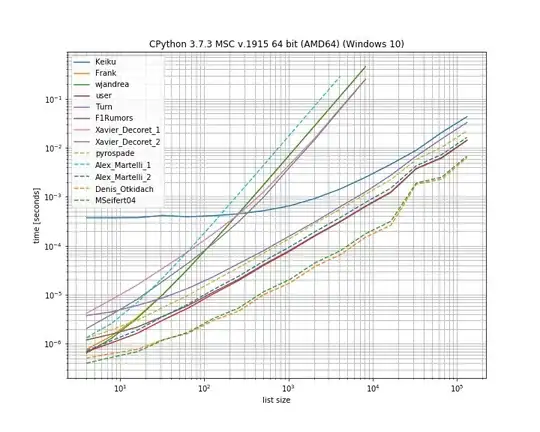

I thought it would be useful to compare the timings of the different solutions presented here. For this I used my own library simple_benchmark:

So indeed for this case the solution from Denis Otkidach is fastest.

Some of the approaches also exhibit a much steeper curve, these are the approaches that scale quadratic with the number of elements (Alex Martellis first solution, wjandrea and both of Xavier Decorets solutions). Also important to mention is that the pandas solution from Keiku has a very big constant factor. But for larger lists it almost catches up with the other solutions.

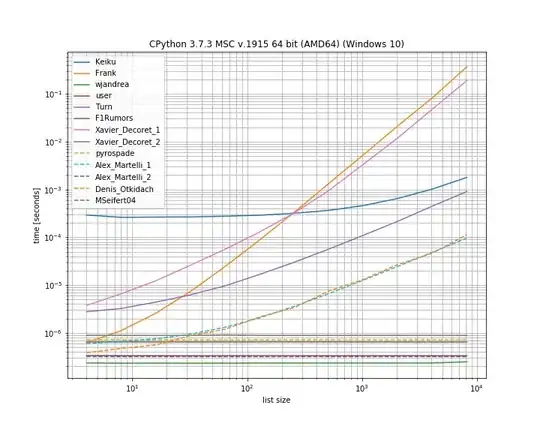

And in case the duplicate is at the first position. This is useful to see which solutions are short-circuiting:

Here several approaches don't short-circuit: Kaiku, Frank, Xavier_Decoret (first solution), Turn, Alex Martelli (first solution) and the approach presented by Denis Otkidach (which was fastest in the no-duplicate case).

I included a function from my own library here: iteration_utilities.all_distinct which can compete with the fastest solution in the no-duplicates case and performs in constant-time for the duplicate-at-begin case (although not as fastest).

The code for the benchmark:

from collections import Counter

from functools import reduce

import pandas as pd

from simple_benchmark import BenchmarkBuilder

from iteration_utilities import all_distinct

b = BenchmarkBuilder()

@b.add_function()

def Keiku(l):

return pd.Series(l).duplicated().sum() > 0

@b.add_function()

def Frank(num_list):

unique = []

dupes = []

for i in num_list:

if i not in unique:

unique.append(i)

else:

dupes.append(i)

if len(dupes) != 0:

return False

else:

return True

@b.add_function()

def wjandrea(iterable):

seen = []

for x in iterable:

if x in seen:

return True

seen.append(x)

return False

@b.add_function()

def user(iterable):

clean_elements_set = set()

clean_elements_set_add = clean_elements_set.add

for possible_duplicate_element in iterable:

if possible_duplicate_element in clean_elements_set:

return True

else:

clean_elements_set_add( possible_duplicate_element )

return False

@b.add_function()

def Turn(l):

return Counter(l).most_common()[0][1] > 1

def getDupes(l):

seen = set()

seen_add = seen.add

for x in l:

if x in seen or seen_add(x):

yield x

@b.add_function()

def F1Rumors(l):

try:

if next(getDupes(l)): return True # Found a dupe

except StopIteration:

pass

return False

def decompose(a_list):

return reduce(

lambda u, o : (u[0].union([o]), u[1].union(u[0].intersection([o]))),

a_list,

(set(), set()))

@b.add_function()

def Xavier_Decoret_1(l):

return not decompose(l)[1]

@b.add_function()

def Xavier_Decoret_2(l):

try:

def func(s, o):

if o in s:

raise Exception

return s.union([o])

reduce(func, l, set())

return True

except:

return False

@b.add_function()

def pyrospade(xs):

s = set()

return any(x in s or s.add(x) for x in xs)

@b.add_function()

def Alex_Martelli_1(thelist):

return any(thelist.count(x) > 1 for x in thelist)

@b.add_function()

def Alex_Martelli_2(thelist):

seen = set()

for x in thelist:

if x in seen: return True

seen.add(x)

return False

@b.add_function()

def Denis_Otkidach(your_list):

return len(your_list) != len(set(your_list))

@b.add_function()

def MSeifert04(l):

return not all_distinct(l)

And for the arguments:

# No duplicate run

@b.add_arguments('list size')

def arguments():

for exp in range(2, 14):

size = 2**exp

yield size, list(range(size))

# Duplicate at beginning run

@b.add_arguments('list size')

def arguments():

for exp in range(2, 14):

size = 2**exp

yield size, [0, *list(range(size)]

# Running and plotting

r = b.run()

r.plot()

- 145,886

- 38

- 333

- 352

-

For reference: The all_distinct function is [written in C](https://github.com/MSeifert04/iteration_utilities/blob/384948b4e82e41de47fa79fb73efc56c08549b01/src/alldistinct.c#L6). – Evandro Coan Jun 25 '19 at 22:48

-

1It's nice to see that the most beautiful (aka Pythonic) solution is also the fastest one. You've got to appreciate this Python's feature. – Jeyekomon Jan 11 '22 at 15:00

This is old, but the answers here led me to a slightly different solution. If you are up for abusing comprehensions, you can get short-circuiting this way.

xs = [1, 2, 1]

s = set()

any(x in s or s.add(x) for x in xs)

# You can use a similar approach to actually retrieve the duplicates.

s = set()

duplicates = set(x for x in xs if x in s or s.add(x))

- 7,870

- 4

- 36

- 52

If you are fond of functional programming style, here is a useful function, self-documented and tested code using doctest.

def decompose(a_list):

"""Turns a list into a set of all elements and a set of duplicated elements.

Returns a pair of sets. The first one contains elements

that are found at least once in the list. The second one

contains elements that appear more than once.

>>> decompose([1,2,3,5,3,2,6])

(set([1, 2, 3, 5, 6]), set([2, 3]))

"""

return reduce(

lambda (u, d), o : (u.union([o]), d.union(u.intersection([o]))),

a_list,

(set(), set()))

if __name__ == "__main__":

import doctest

doctest.testmod()

From there you can test unicity by checking whether the second element of the returned pair is empty:

def is_set(l):

"""Test if there is no duplicate element in l.

>>> is_set([1,2,3])

True

>>> is_set([1,2,1])

False

>>> is_set([])

True

"""

return not decompose(l)[1]

Note that this is not efficient since you are explicitly constructing the decomposition. But along the line of using reduce, you can come up to something equivalent (but slightly less efficient) to answer 5:

def is_set(l):

try:

def func(s, o):

if o in s:

raise Exception

return s.union([o])

reduce(func, l, set())

return True

except:

return False

- 103

- 3

- 519

- 6

- 6

-

Should have read related questions first. This is described in http://stackoverflow.com/questions/1723072/find-and-keep-duplicates-of-sublist-in-python – Xavier Decoret Jun 06 '11 at 10:46

-

1It throws me an "invalid syntax" error on the lambda function of decompose() – robertspierre May 13 '19 at 10:43

-

That's because unpacking in lambda argument lists has been removed in Python 3.x. – MSeifert Jun 25 '19 at 20:38

I recently answered a related question to establish all the duplicates in a list, using a generator. It has the advantage that if used just to establish 'if there is a duplicate' then you just need to get the first item and the rest can be ignored, which is the ultimate shortcut.

This is an interesting set based approach I adapted straight from moooeeeep:

def getDupes(l):

seen = set()

seen_add = seen.add

for x in l:

if x in seen or seen_add(x):

yield x

Accordingly, a full list of dupes would be list(getDupes(etc)). To simply test "if" there is a dupe, it should be wrapped as follows:

def hasDupes(l):

try:

if getDupes(l).next(): return True # Found a dupe

except StopIteration:

pass

return False

This scales well and provides consistent operating times wherever the dupe is in the list -- I tested with lists of up to 1m entries. If you know something about the data, specifically, that dupes are likely to show up in the first half, or other things that let you skew your requirements, like needing to get the actual dupes, then there are a couple of really alternative dupe locators that might outperform. The two I recommend are...

Simple dict based approach, very readable:

def getDupes(c):

d = {}

for i in c:

if i in d:

if d[i]:

yield i

d[i] = False

else:

d[i] = True

Leverage itertools (essentially an ifilter/izip/tee) on the sorted list, very efficient if you are getting all the dupes though not as quick to get just the first:

def getDupes(c):

a, b = itertools.tee(sorted(c))

next(b, None)

r = None

for k, g in itertools.ifilter(lambda x: x[0]==x[1], itertools.izip(a, b)):

if k != r:

yield k

r = k

These were the top performers from the approaches I tried for the full dupe list, with the first dupe occurring anywhere in a 1m element list from the start to the middle. It was surprising how little overhead the sort step added. Your mileage may vary, but here are my specific timed results:

Finding FIRST duplicate, single dupe places "n" elements in to 1m element array

Test set len change : 50 - . . . . . -- 0.002

Test in dict : 50 - . . . . . -- 0.002

Test in set : 50 - . . . . . -- 0.002

Test sort/adjacent : 50 - . . . . . -- 0.023

Test sort/groupby : 50 - . . . . . -- 0.026

Test sort/zip : 50 - . . . . . -- 1.102

Test sort/izip : 50 - . . . . . -- 0.035

Test sort/tee/izip : 50 - . . . . . -- 0.024

Test moooeeeep : 50 - . . . . . -- 0.001 *

Test iter*/sorted : 50 - . . . . . -- 0.027

Test set len change : 5000 - . . . . . -- 0.017

Test in dict : 5000 - . . . . . -- 0.003 *

Test in set : 5000 - . . . . . -- 0.004

Test sort/adjacent : 5000 - . . . . . -- 0.031

Test sort/groupby : 5000 - . . . . . -- 0.035

Test sort/zip : 5000 - . . . . . -- 1.080

Test sort/izip : 5000 - . . . . . -- 0.043

Test sort/tee/izip : 5000 - . . . . . -- 0.031

Test moooeeeep : 5000 - . . . . . -- 0.003 *

Test iter*/sorted : 5000 - . . . . . -- 0.031

Test set len change : 50000 - . . . . . -- 0.035

Test in dict : 50000 - . . . . . -- 0.023

Test in set : 50000 - . . . . . -- 0.023

Test sort/adjacent : 50000 - . . . . . -- 0.036

Test sort/groupby : 50000 - . . . . . -- 0.134

Test sort/zip : 50000 - . . . . . -- 1.121

Test sort/izip : 50000 - . . . . . -- 0.054

Test sort/tee/izip : 50000 - . . . . . -- 0.045

Test moooeeeep : 50000 - . . . . . -- 0.019 *

Test iter*/sorted : 50000 - . . . . . -- 0.055

Test set len change : 500000 - . . . . . -- 0.249

Test in dict : 500000 - . . . . . -- 0.145

Test in set : 500000 - . . . . . -- 0.165

Test sort/adjacent : 500000 - . . . . . -- 0.139

Test sort/groupby : 500000 - . . . . . -- 1.138

Test sort/zip : 500000 - . . . . . -- 1.159

Test sort/izip : 500000 - . . . . . -- 0.126

Test sort/tee/izip : 500000 - . . . . . -- 0.120 *

Test moooeeeep : 500000 - . . . . . -- 0.131

Test iter*/sorted : 500000 - . . . . . -- 0.157

-

The `.next()` call in your second code block doesn't work on Python 3.x. I think `next(getDupes(l))` should work across Python versions, so it may make sense to change that. – MSeifert Jun 25 '19 at 20:36

-

Also `ifilter` and `ìzip` can be simply replaced by the built-in `filter` and `zip` in Python 3.x. – MSeifert Jun 25 '19 at 20:40

-

@MSeifert the solution works for python 2.x as written, and yes, for py3 you can use filter and map directly ... but someone using the py3 solution in py2 code base would not get the benefits because it would not operate as a generator. Explicit is better than implicit in this case ;) – F1Rumors Jul 17 '19 at 17:03

Another way of doing this succinctly is with Counter.

To just determine if there are any duplicates in the original list:

from collections import Counter

def has_dupes(l):

# second element of the tuple has number of repetitions

return Counter(l).most_common()[0][1] > 1

Or to get a list of items that have duplicates:

def get_dupes(l):

return [k for k, v in Counter(l).items() if v > 1]

-

1Outstanding answer, succinct is right. No need for a bunch of lambda x: reduce(union(intersection)) etc.... :) – Colin D Bennett Feb 01 '23 at 21:35

my_list = ['one', 'two', 'one']

duplicates = []

for value in my_list:

if my_list.count(value) > 1:

if value not in duplicates:

duplicates.append(value)

print(duplicates) //["one"]

- 475

- 3

- 10

- 27

I found this to do the best performance because it short-circuit the operation when the first duplicated it found, then this algorithm has time and space complexity O(n) where n is the list's length:

def has_duplicated_elements(iterable):

""" Given an `iterable`, return True if there are duplicated entries. """

clean_elements_set = set()

clean_elements_set_add = clean_elements_set.add

for possible_duplicate_element in iterable:

if possible_duplicate_element in clean_elements_set:

return True

else:

clean_elements_set_add( possible_duplicate_element )

return False

- 145,886

- 38

- 333

- 352

- 8,560

- 11

- 83

- 144

If the list contains unhashable items, you can use Alex Martelli's solution but with a list instead of a set, though it's slower for larger inputs: O(N^2).

def has_duplicates(iterable):

seen = []

for x in iterable:

if x in seen:

return True

seen.append(x)

return False

- 28,235

- 9

- 60

- 81

-

Coming back to this now, I'm not sure why you would use this instead of Alex's other solution, `any(thelist.count(x) > 1 for x in thelist)`. The only advantage I see is that this works for any iterable while Alex's only works for lists (or other types with a `.count()` method). – wjandrea Nov 15 '21 at 20:00

I used pyrospade's approach, for its simplicity, and modified that slightly on a short list made from the case-insensitive Windows registry.

If the raw PATH value string is split into individual paths all 'null' paths (empty or whitespace-only strings) can be removed by using:

PATH_nonulls = [s for s in PATH if s.strip()]

def HasDupes(aseq) :

s = set()

return any(((x.lower() in s) or s.add(x.lower())) for x in aseq)

def GetDupes(aseq) :

s = set()

return set(x for x in aseq if ((x.lower() in s) or s.add(x.lower())))

def DelDupes(aseq) :

seen = set()

return [x for x in aseq if (x.lower() not in seen) and (not seen.add(x.lower()))]

The original PATH has both 'null' entries and duplicates for testing purposes:

[list] Root paths in HKLM\SYSTEM\CurrentControlSet\Control\Session Manager\Environment:PATH[list] Root paths in HKLM\SYSTEM\CurrentControlSet\Control\Session Manager\Environment

1 C:\Python37\

2

3

4 C:\Python37\Scripts\

5 c:\python37\

6 C:\Program Files\ImageMagick-7.0.8-Q8

7 C:\Program Files (x86)\poppler\bin

8 D:\DATA\Sounds

9 C:\Program Files (x86)\GnuWin32\bin

10 C:\Program Files (x86)\Intel\iCLS Client\

11 C:\Program Files\Intel\iCLS Client\

12 D:\DATA\CCMD\FF

13 D:\DATA\CCMD

14 D:\DATA\UTIL

15 C:\

16 D:\DATA\UHELP

17 %SystemRoot%\system32

18

19

20 D:\DATA\CCMD\FF%SystemRoot%

21 D:\DATA\Sounds

22 %SystemRoot%\System32\Wbem

23 D:\DATA\CCMD\FF

24

25

26 c:\

27 %SYSTEMROOT%\System32\WindowsPowerShell\v1.0\

28

Null paths have been removed, but still has duplicates, e.g., (1, 3) and (13, 20):

[list] Null paths removed from HKLM\SYSTEM\CurrentControlSet\Control\Session Manager\Environment:PATH

1 C:\Python37\

2 C:\Python37\Scripts\

3 c:\python37\

4 C:\Program Files\ImageMagick-7.0.8-Q8

5 C:\Program Files (x86)\poppler\bin

6 D:\DATA\Sounds

7 C:\Program Files (x86)\GnuWin32\bin

8 C:\Program Files (x86)\Intel\iCLS Client\

9 C:\Program Files\Intel\iCLS Client\

10 D:\DATA\CCMD\FF

11 D:\DATA\CCMD

12 D:\DATA\UTIL

13 C:\

14 D:\DATA\UHELP

15 %SystemRoot%\system32

16 D:\DATA\CCMD\FF%SystemRoot%

17 D:\DATA\Sounds

18 %SystemRoot%\System32\Wbem

19 D:\DATA\CCMD\FF

20 c:\

21 %SYSTEMROOT%\System32\WindowsPowerShell\v1.0\

And finally, the dupes have been removed:

[list] Massaged path list from in HKLM\SYSTEM\CurrentControlSet\Control\Session Manager\Environment:PATH

1 C:\Python37\

2 C:\Python37\Scripts\

3 C:\Program Files\ImageMagick-7.0.8-Q8

4 C:\Program Files (x86)\poppler\bin

5 D:\DATA\Sounds

6 C:\Program Files (x86)\GnuWin32\bin

7 C:\Program Files (x86)\Intel\iCLS Client\

8 C:\Program Files\Intel\iCLS Client\

9 D:\DATA\CCMD\FF

10 D:\DATA\CCMD

11 D:\DATA\UTIL

12 C:\

13 D:\DATA\UHELP

14 %SystemRoot%\system32

15 D:\DATA\CCMD\FF%SystemRoot%

16 %SystemRoot%\System32\Wbem

17 %SYSTEMROOT%\System32\WindowsPowerShell\v1.0\

- 129

- 3

Another solution is to use slicing, which will also work with strings and other enumerable things.

def has_duplicates(x):

for idx, item in enumerate(x):

if item in x[(idx + 1):]:

return True

return False

>>> has_duplicates(["a", "b", "c"])

False

>>> has_duplicates(["a", "b", "b", "c"])

True

>>> has_duplicates("abc")

False

>>> has_duplicates("abbc")

True

- 2,347

- 2

- 15

- 24

I dont really know what set does behind the scenes, so I just like to keep it simple.

def dupes(num_list):

unique = []

dupes = []

for i in num_list:

if i not in unique:

unique.append(i)

else:

dupes.append(i)

if len(dupes) != 0:

return False

else:

return True

- 57

- 8

A more simple solution is as follows. Just check True/False with pandas .duplicated() method and then take sum. Please also see pandas.Series.duplicated — pandas 0.24.1 documentation

import pandas as pd

def has_duplicated(l):

return pd.Series(l).duplicated().sum() > 0

print(has_duplicated(['one', 'two', 'one']))

# True

print(has_duplicated(['one', 'two', 'three']))

# False

- 8,205

- 4

- 41

- 44

def check_duplicates(my_list):

seen = {}

for item in my_list:

if seen.get(item):

return True

seen[item] = True

return False

- 844

- 1

- 9

- 15

-

1How does the function work? I am curious as to how the dictionary "seen" is populated. – mountainscaler May 06 '20 at 06:38

-

Each time the ```for``` loop passes it checks the ```dict``` ```seen``` for the ```key``` ```item```, if ```item``` exists then it terminates with ```return True```, otherwise it adds ```item``` as a ```key``` and ```True``` as a ```value``` in ```seen```. ie ```seen[item] = True``` adds a new ```dict``` element, if the key does not already exist, and replaces the ```value``` if the ```key``` does exist. – typonaut Nov 12 '22 at 12:01