I want to find out the time complexity of the program using recurrence equations. That is ..

int f(int x)

{

if(x<1) return 1;

else return f(x-1)+g(x);

}

int g(int x)

{

if(x<2) return 1;

else return f(x-1)+g(x/2);

}

I write its recurrence equation and tried to solve it but it keep on getting complex

T(n) =T(n-1)+g(n)+c

=T(n-2)+g(n-1)+g(n)+c+c

=T(n-3)+g(n-2)+g(n-1)+g(n)+c+c+c

=T(n-4)+g(n-3)+g(n-2)+g(n-1)+g(n)+c+c+c+c

……………………….

……………………..

Kth time …..

=kc+g(n)+g(n-1)+g(n-3)+g(n-4).. .. . … +T(n-k)

Let at kth time input become 1

Then n-k=1

K=n-1

Now i end up with this..

T(n)= (n-1)c+g(n)+g(n-1)+g(n-2)+g(n-3)+….. .. g(1)

I ‘m not able to solve it further. Any way if we count the number of function calls in this program , it can be easily seen that time complexity is exponential but I want proof it using recurrence . how can it be done ?

Explanation in Anwer 1, looks correct , similar work I did.

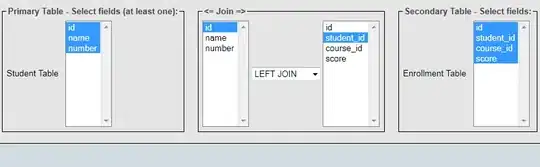

The most difficult task in this code is to write its recursion equation. I have drawn another diagram , I identified some patterns , I think we can get some help form this diagram what could be the possible recurrence equation.

And I came up with this equation , not sure if it is right ??? Please help.

T(n) = 2*T(n-1) + c * logn