I came across something really strange with while manipulating pixels using CGBitmapContext. Basically I'm changing the Alpha values of PNGs. I have an example where I can successfully Zero the Alpha value of a PNGs pixel EXCEPT when my ImageView's superview is an ImageView with an image… Why this is? I'm not sure if it's a bug or if something in the Context is set up incorrectly.

Below is an example. It zeros' alpha values successfully, but when you give the superview's UIImageView an Image, it doesn't work right. The pixels that should have an Alpha of zero are slightly visible. But when you remove the image from the superview's UIImageView the Alpha values are zeroed correctly, you see the white background behind it perfectly. I've tried using several different CGBlendModes but that doesn't seem to do it.

Here is a dropbox link to an example project demonstrating this strange event: https://www.dropbox.com/s/e93hzxl5ru5wnss/TestAlpha.zip

And here is the code copy/pasted:

// Create a Background ImageView

UIImageView Background = new UIImageView(this.View.Bounds);

// Comment out the below line to see the Pixels alpha values change correctly, UnComment the below line to see the pixel's alpha values change incorrectly. Why is this?

// When Commented out, The pixels whose alpha value I set to zero become transparent and I can see the white background through the image.

// When Commented in, I SHOULD see the "GrayPattern.png" Image through the transparent pixels. I can kind of see it, but for some reason I still see the dirt pixels who's alpha is set to zero! Is this a bug? Is the CGBitmapContext set up incorrectly?

Background.Image = UIImage.FromFile("GrayPattern.png");

this.View.AddSubview(Background);

UIImageView MyImageView = new UIImageView(UIScreen.MainScreen.Bounds);

UIImage sourceImage = UIImage.FromFile("Dirt.png");

MyImageView.Image = sourceImage;

Background.AddSubview(MyImageView);

CGImage image = MyImageView.Image.CGImage;

Console.WriteLine(image.AlphaInfo);

int width = image.Width;

int height = image.Height;

CGColorSpace colorSpace = CGColorSpace.CreateDeviceRGB();

int bytesPerRow = image.BytesPerRow;

int bytesPerPixel = bytesPerRow / width;

int bitmapByteCount = bytesPerRow * height;

int bitsPerComponent = image.BitsPerComponent;

CGImageAlphaInfo alphaInfo = CGImageAlphaInfo.PremultipliedLast;

// Allocate memory because the BitmapData is unmanaged

IntPtr BitmapData = Marshal.AllocHGlobal(bitmapByteCount);

CGBitmapContext context = new CGBitmapContext(BitmapData, width, height, bitsPerComponent, bytesPerRow, colorSpace, alphaInfo);

context.SetBlendMode(CGBlendMode.Copy);

context.DrawImage(new RectangleF(0, 0, width, height), image);

UIImageView MyImageView_brush = new UIImageView(new RectangleF(0, 0, 100, 100));

UIImage sourceImage_brush = UIImage.FromFile("BB_Brush_Rect_MoreFeather.png");

MyImageView_brush.Image = sourceImage_brush;

MyImageView_brush.Hidden = true;

Background.AddSubview(MyImageView_brush);

CGImage image_brush = sourceImage_brush.CGImage;

Console.WriteLine(image_brush.AlphaInfo);

int width_brush = image_brush.Width;

int height_brush = image_brush.Height;

CGColorSpace colorSpace_brush = CGColorSpace.CreateDeviceRGB();

int bytesPerRow_brush = image_brush.BytesPerRow;

int bytesPerPixel_brush = bytesPerRow_brush / width_brush;

int bitmapByteCount_brush = bytesPerRow_brush * height_brush;

int bitsPerComponent_brush = image_brush.BitsPerComponent;

CGImageAlphaInfo alphaInfo_brush = CGImageAlphaInfo.PremultipliedLast;

// Allocate memory because the BitmapData is unmanaged

IntPtr BitmapData_brush = Marshal.AllocHGlobal(bitmapByteCount_brush);

CGBitmapContext context_brush = new CGBitmapContext(BitmapData_brush, width_brush, height_brush, bitsPerComponent_brush, bytesPerRow_brush, colorSpace_brush, alphaInfo_brush);

context_brush.SetBlendMode(CGBlendMode.Copy);

context_brush.DrawImage(new RectangleF(0, 0, width_brush, height_brush), image_brush);

for ( int x = 0; x < width_brush; x++ )

{

for ( int y = 0; y < height_brush; y++ )

{

int byteIndex_brush = (bytesPerRow_brush * y) + x * bytesPerPixel_brush;

byte alpha_brush = GetByte(byteIndex_brush+3, BitmapData_brush);

// Console.WriteLine("alpha_brush = " + alpha_brush);

byte setValue = (byte)(255 - alpha_brush);

// Console.WriteLine("setValue = " + setValue);

int byteIndex = (bytesPerRow * y) + x * bytesPerPixel;

SetByte(byteIndex+3, BitmapData, setValue);

}

}

// Set the MyImageView Image equal to the context image, but I still see all top image, I dont' see any bottom image showing through.

MyImageView.Image = UIImage.FromImage (context.ToImage());

context.Dispose();

context_brush.Dispose();

// Free memory used by the BitmapData now that we're finished

Marshal.FreeHGlobal(BitmapData);

Marshal.FreeHGlobal(BitmapData_brush);

public unsafe byte GetByte(int offset, IntPtr buffer)

{

byte* bufferAsBytes = (byte*) buffer;

return bufferAsBytes[offset];

}

public unsafe void SetByte(int offset, IntPtr buffer, byte setValue)

{

byte* bufferAsBytes = (byte*) buffer;

bufferAsBytes[offset] = setValue;

}

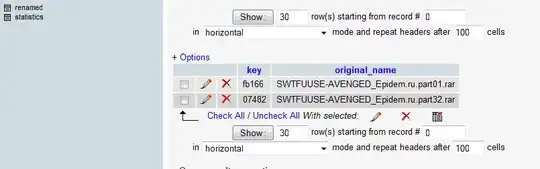

Update I've found a workaround (though its performance is pretty unacceptable for what I"m doing) The workaround is simply taking the result image of from my above code, convert it to NSData, then convert the NSData back to a UIIMage... Magically the image looks correct! This workaround; however, is not acceptable for what I'm doing because it literally takes 2 seconds to do this with my Image and that is way too long for my App. A couple hundred ms would be fun, but 2 seconds is way to long for me. If this is a bug hopefully it gets resolved!

Here is the workaround (poor performance) code. Just add this code to the end of the code above (or download my dropbox project and add this code to the end of it and run):

NSData test = new NSData();

test = MyImageView.Image.AsPNG();

UIImage workAroundImage = UIImage.LoadFromData(test);

MyImageView.Image = workAroundImage;

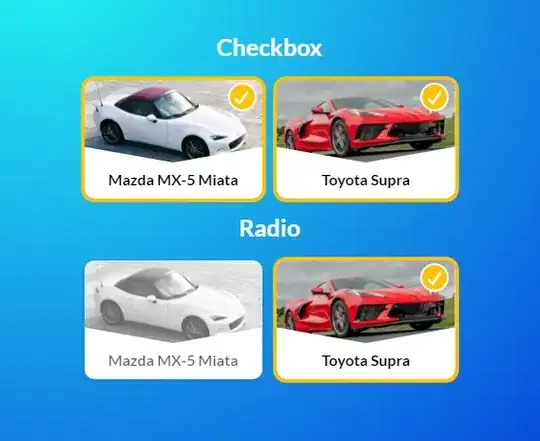

Here are before and after pictures. Before is without the workaround, After includes the workaround.

Notice the Gray doens't show up completely. It's covered in some light brown as if the alpha value isn't full zeroed.

Notice the Gray doens't show up completely. It's covered in some light brown as if the alpha value isn't full zeroed.

Notice with the workaround you can see the gray perfectly and clearly, no light brown over it at all. The only thing I did was Convert the image to NSData, then back to UIImage again! Very strange...

Notice with the workaround you can see the gray perfectly and clearly, no light brown over it at all. The only thing I did was Convert the image to NSData, then back to UIImage again! Very strange...