Oprofile takes stack samples. What you need to do is not look at summaries of them, but actually examine the raw samples. If you are spending, say, 30% of time in the kernel, then if you can see 10 stack samples chosen at random, you can expect 3 of them, more or less, to show you the full reason of how you got into the kernel.

That way you will see things the summaries or call graph won't show you.

IN CASE IT ISN'T CLEAR: Since __ticket_spin_lock is on the stack 99.6% of the time, then on each and every stack sample you look at, the probability is 99.6% you will see how you got into that routine.

Then if you don't really need to be doing that, you have possibly a 250x speedup.

That's like from four minutes down to one second. Screw the "correct" or "automated" approach - get the results.

ADDED: The thing about profilers is they are popular and some have very nice UIs,

but sadly, I'm afraid, it's a case of "the emperor's new clothes".

If such a tool doesn't find much to fix, you're going to like it, because it says (probably falsely) that your code, as written, is near-optimal.

There are lots of postings recommending this or that profiler, but

I can't point to any claim of saving more than some percent of time, like 40%, using a profiler.

Maybe there are some.

I have never heard of a profiler being used first to get a speedup, and then being used again to get a second speedup, and so on.

That's how you get real speedup - multiple optimizations.

Something that was just a small performance problem at the beginning is no longer small after you've removed a larger one.

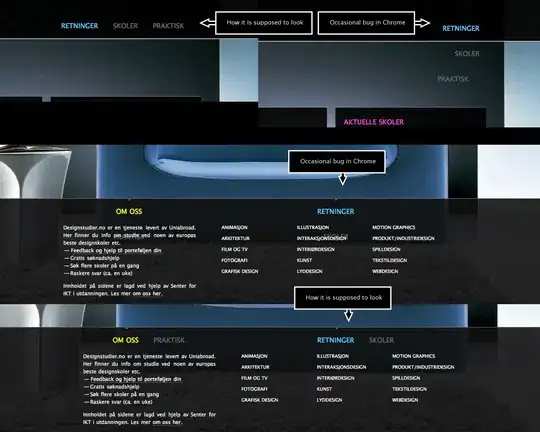

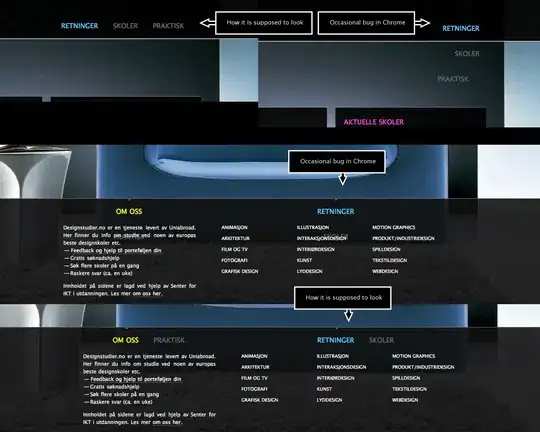

This picture shows how, by removing six problems, the speedup is nearly three orders of magnitude.

You can't necessarily do that, but isn't it worth trying?

APOLOGIES for further editing. I just wanted to show easy it is to fool a call graph.

The red lines represent call stack samples. Here A1 spends all its time calling C2, and vice-versa. Then suppose you keep the same behavior, but you put in a "dispatch" routine B.

Now the call graph loses the information that A1 spends all its time in C2, and vice-versa.

You can easily extend this example to multiple levels.

You can say a call tree would have seen that.

Well, here's how you can fool a call tree. A spends all its time in calls to C.

Now if instead A calls B1, B2, ... Bn, and those call C, the "hot path" from A to C is broken up into pieces, so the relationship between A and C is hidden.

You can say a call tree would have seen that.

Well, here's how you can fool a call tree. A spends all its time in calls to C.

Now if instead A calls B1, B2, ... Bn, and those call C, the "hot path" from A to C is broken up into pieces, so the relationship between A and C is hidden.

There are many other perfectly ordinary programming practices that will confuse these tools, especially when the samples are 10-30 levels deep and the functions are all little, but the relationships cannot hide from a programmer carefully examining a moderate number of samples.

There are many other perfectly ordinary programming practices that will confuse these tools, especially when the samples are 10-30 levels deep and the functions are all little, but the relationships cannot hide from a programmer carefully examining a moderate number of samples.

You can say a call tree would have seen that.

Well, here's how you can fool a call tree. A spends all its time in calls to C.

Now if instead A calls B1, B2, ... Bn, and those call C, the "hot path" from A to C is broken up into pieces, so the relationship between A and C is hidden.

You can say a call tree would have seen that.

Well, here's how you can fool a call tree. A spends all its time in calls to C.

Now if instead A calls B1, B2, ... Bn, and those call C, the "hot path" from A to C is broken up into pieces, so the relationship between A and C is hidden.

There are many other perfectly ordinary programming practices that will confuse these tools, especially when the samples are 10-30 levels deep and the functions are all little, but the relationships cannot hide from a programmer carefully examining a moderate number of samples.

There are many other perfectly ordinary programming practices that will confuse these tools, especially when the samples are 10-30 levels deep and the functions are all little, but the relationships cannot hide from a programmer carefully examining a moderate number of samples.