Backgound: I must call a web service call 1500 times which takes roughly 1.3 seconds to complete. (No control over this 3rd party API.) total Time = 1500 * 1.3 = 1950 seconds / 60 seconds = 32 minutes roughly.

I came up with what I though was a good solution however it did not pan out that great. So I changed the calls to async web calls thinking this would dramatically help my results it did not.

Example Code:

Pre-Optimizations:

foreach (var elmKeyDataElementNamed in findResponse.Keys)

{

var getRequest = new ElementMasterGetRequest

{

Key = new elmFullKey

{

CmpCode = CodaServiceSettings.CompanyCode,

Code = elmKeyDataElementNamed.Code,

Level = filterLevel

}

};

ElementMasterGetResponse getResponse;

_elementMasterServiceClient.Get(new MasterOptions(), getRequest, out getResponse);

elementList.Add(new CodaElement { Element = getResponse.Element, SearchCode = filterCode });

}

With Optimizations:

var tasks = findResponse.Keys.Select(elmKeyDataElementNamed => new ElementMasterGetRequest

{

Key = new elmFullKey

{

CmpCode = CodaServiceSettings.CompanyCode,

Code = elmKeyDataElementNamed.Code,

Level = filterLevel

}

}).Select(getRequest => _elementMasterServiceClient.GetAsync(new MasterOptions(), getRequest)).ToList();

Task.WaitAll(tasks.ToArray());

elementList.AddRange(tasks.Select(p => new CodaElement

{

Element = p.Result.GetResponse.Element,

SearchCode = filterCode

}));

Smaller Sampling Example: So to easily test I did a smaller sampling of 40 records this took 60 seconds with no optimizations with the optimizations it only took 50 seconds. I would have though it would have been closer to 30 or better.

I used wireshark to watch the transactions come through and realized the async way was not sending as fast I assumed it would have.

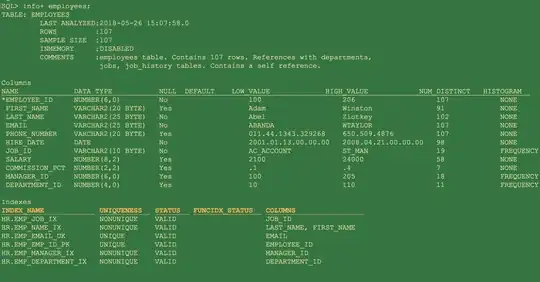

Async requests captured

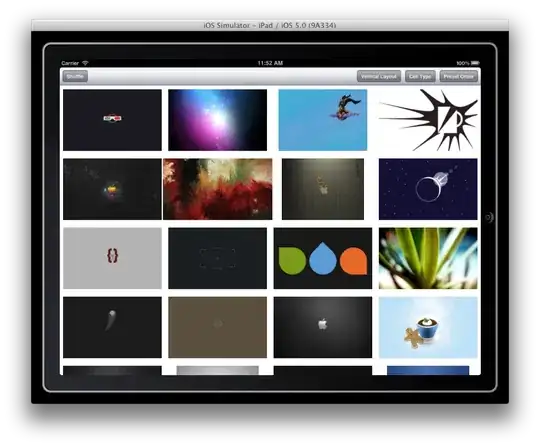

Normal no optimization

You can see that the asnyc pushes a few very fast then drops off...

Also note that between requests 10 and 11 it took nearly 3 seconds.

You can see that the asnyc pushes a few very fast then drops off...

Also note that between requests 10 and 11 it took nearly 3 seconds.

Is the overhead for creating threads for the tasks that slow that it takes seconds? Note: The tasks I am referring to are the 4.5 TAP task library.

Why wouldn't the request come faster than that. I was told the Apache web server I was hitting could hold 200 max threads so I don't see an issue there..

Am I not thinking about this clearly? When calling web services are there little advantages from async requests? Do I have a code mistake? Any ideas would be great.