I am trying to fit a two-part line to data.

Here's some sample data:

x<-c(0.00101959664756622, 0.001929220749155, 0.00165657261751726,

0.00182514724375389, 0.00161532360585458, 0.00126991061099209,

0.00149545009309177, 0.000816386510029308, 0.00164402569283353,

0.00128029006251656, 0.00206892841921455, 0.00132378793976235,

0.000953143467154676, 0.00272964503695939, 0.00169743839571702,

0.00286411493120396, 0.0016464862337286, 0.00155672067449593,

0.000878271561566836, 0.00195872573138819, 0.00255412836538339,

0.00126212428137799, 0.00106206607962734, 0.00169140916371657,

0.000858015581562961, 0.00191955159274793, 0.00243104345247067,

0.000871042201994687, 0.00229814264111745, 0.00226756341241083)

y<-c(1.31893118849162, 0.105150790530179, 0.412732029152914, 0.25589805483046,

0.467147868109498, 0.983984462069833, 0.640007862668818, 1.51429617241365,

0.439777145282391, 0.925550163462951, -0.0555942758921906, 0.870117027565708,

1.38032147826294, -0.96757052387814, 0.346370836378525, -1.08032147826294,

0.426215616848312, 0.55151485221263, 1.41306889485598, 0.0803478641720901,

-0.86654892295057, 1.00422341998656, 1.26214517662281, 0.359512373951839,

1.4835398594013, 0.154967053938309, -0.680501679226447, 1.44740598234453,

-0.512732029152914, -0.359512373951839)

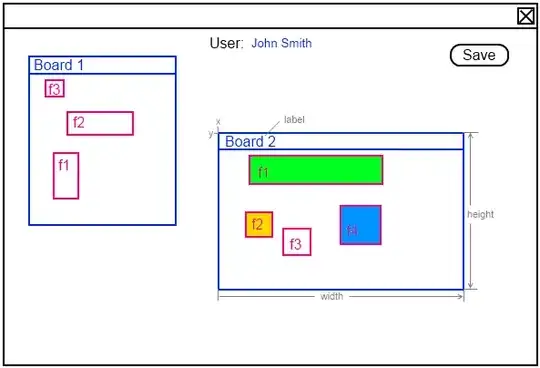

I am hoping to be able to define the best fitting two part line (hand drawn example shown)

I then define a piecewise function that should find a two part linear function. The definition is based on the gradients of the two lines and their intercept with each other, which should completely define the lines.

# A=gradient of first line segment

# B=gradient of second line segment

# Cx=inflection point x coord

# Cy=inflexion point y coord

out_model <- nls(y ~ I(x <= Cx)*Cy-A*(Cx-x)+I(x > Cx)*Cy+B*(x),

data = data.frame(x,y),

start = c(A=-500,B=-500,Cx=0.0001,Cy=-1.5) )

However I get the error:

Error in nls(y ~ I(x <= Cx) * Cy - A * (Cx - x) + I(x > Cx) * Cy + B * : singular gradient

I got the basic method from Finding a curve to match data

Any ideas where I am going wrong?