Non-optimized allocations in common allocators go with some overhead. You can think of two "blocks": An INFO and a STORAGE block.

The Info block will most likely be right in front of your STORAGE block.

So if you allocate you'll have something like that in your memory:

Memory that is actually accessible

vvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvv

--------------------------------------------

| INFO | STORAGE |

--------------------------------------------

^^^^^^^^^

Some informations on the size of the "STORAGE" chunk etc.

Additionally the block will be aligned along a certain granularity (somewhat like 16 bytes in case of int).

I'll write about how this looks like on MSVC12, since I can test on that in the moment.

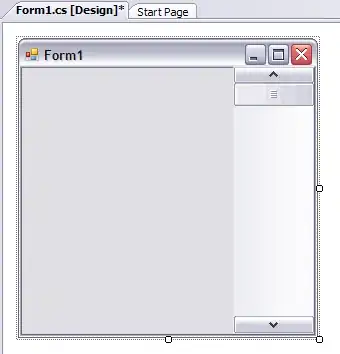

Let's have a look at our memory. The arrows indicate 16 byte boundaries.

If you allocate a single 4 byte integer, you'll get 4 bytes of memory at a certain 16 bytes boundary (the orange square after the second boundary).

The 16 bytes prepending this block (the blue ones) are occupied to store additional information. (I'll skip things like endianess etc. here but keep in mind that this can affect this sort of layout.) If you read the first four bytes of this 16byte block in front of your allocated memory you'll find the number of allocated bytes.

If you now allocate a second 4 byte integer (green box), it's position will be at least 2x the 16 byte boundary away since the INFO block (yellow/red) must fit in front of it which is not the case at the rightnext boundary. The red block is again the one that contains the number of bytes.

As you can easily see: If the green block would have been 16 bytes earlier, the red and the orange block would overlap - impossible.

You can check that for yourself. I am using MSVC 2012 and this worked for me:

char * mem = new char[4096];

cout << "Number of allocated bytes for mem is: " << *(unsigned int*)(mem-16) << endl;

delete [] mem;

double * dmem = new double[4096];

cout << "Number of allocated bytes for dmem is: " << *(unsigned int*)(((char*)dmem)-16) << endl;

delete [] dmem;

prints

Number of allocated bytes for mem is: 4096

Number of allocated bytes for dmem is: 32768

And that is perfectly correct. Therefore a memory allocation using new has in case of MSVC12 an additional "INFO" block which is at least 16 bytes in size.