Updated to use pandas 0.13.1

No. http://pandas.pydata.org/pandas-docs/dev/io.html#notes-caveats. There are various ways to do this, e.g. have your different threads/processes write out the computation results, then have a single process combine.

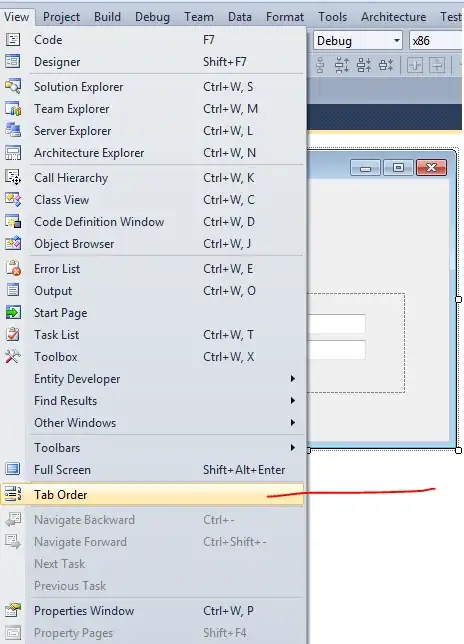

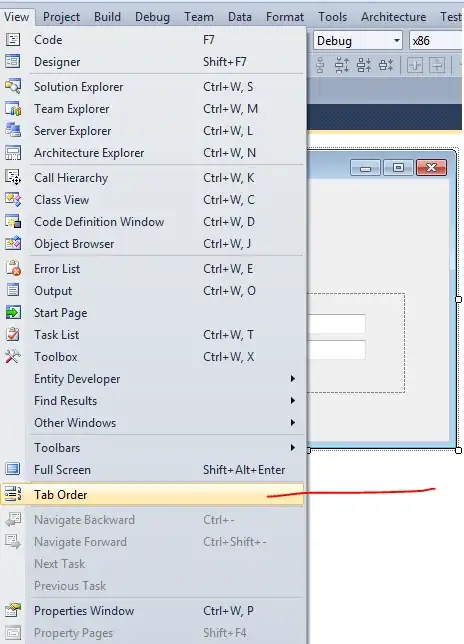

depending the type of data you store, how you do it, and how you want to retrieve, HDF5 can offer vastly better performance. Storing in an HDFStore as a single array, float data, compressed (in other words, not storing it in a format that allows for querying), will be stored/read amazingly fast. Even storing in the table format (which slows down the write performance), will offer quite good write performance. You can look at this for some detailed comparisons (which is what HDFStore uses under the hood). http://www.pytables.org/, here's a nice picture:

Since PyTables 2.3 the queries are now indexed, so performance is actually MUCH better than this.

To answer your question, if you want any kind of performance, HDF5 is the way to go.

Writing:

In [14]: %timeit test_sql_write(df)

1 loops, best of 3: 6.24 s per loop

In [15]: %timeit test_hdf_fixed_write(df)

1 loops, best of 3: 237 ms per loop

In [16]: %timeit test_hdf_table_write(df)

1 loops, best of 3: 901 ms per loop

In [17]: %timeit test_csv_write(df)

1 loops, best of 3: 3.44 s per loop

Reading

In [18]: %timeit test_sql_read()

1 loops, best of 3: 766 ms per loop

In [19]: %timeit test_hdf_fixed_read()

10 loops, best of 3: 19.1 ms per loop

In [20]: %timeit test_hdf_table_read()

10 loops, best of 3: 39 ms per loop

In [22]: %timeit test_csv_read()

1 loops, best of 3: 620 ms per loop

And here's the code

import sqlite3

import os

from pandas.io import sql

In [3]: df = DataFrame(randn(1000000,2),columns=list('AB'))

<class 'pandas.core.frame.DataFrame'>

Int64Index: 1000000 entries, 0 to 999999

Data columns (total 2 columns):

A 1000000 non-null values

B 1000000 non-null values

dtypes: float64(2)

def test_sql_write(df):

if os.path.exists('test.sql'):

os.remove('test.sql')

sql_db = sqlite3.connect('test.sql')

sql.write_frame(df, name='test_table', con=sql_db)

sql_db.close()

def test_sql_read():

sql_db = sqlite3.connect('test.sql')

sql.read_frame("select * from test_table", sql_db)

sql_db.close()

def test_hdf_fixed_write(df):

df.to_hdf('test_fixed.hdf','test',mode='w')

def test_csv_read():

pd.read_csv('test.csv',index_col=0)

def test_csv_write(df):

df.to_csv('test.csv',mode='w')

def test_hdf_fixed_read():

pd.read_hdf('test_fixed.hdf','test')

def test_hdf_table_write(df):

df.to_hdf('test_table.hdf','test',format='table',mode='w')

def test_hdf_table_read():

pd.read_hdf('test_table.hdf','test')

Of course YMMV.