After doing some deeper reading, the javadoc says the Character methods based on char parameters do not support all unicode values, but those taking code points (i.e., int) do.

Hence, I have been performing the following test:

int codePointCopyright = Integer.parseInt("00A9", 16);

System.out.println(Integer.toHexString(codePointCopyright));

System.out.println(Character.isValidCodePoint(codePointCopyright));

char[] toChars = Character.toChars(codePointCopyright);

System.out.println(toChars);

System.out.println();

int codePointAsian = Integer.parseInt("20011", 16);

System.out.println(Integer.toHexString(codePointAsian));

System.out.println(Character.isValidCodePoint(codePointAsian));

char[] toCharsAsian = Character.toChars(codePointAsian);

System.out.println(toCharsAsian);

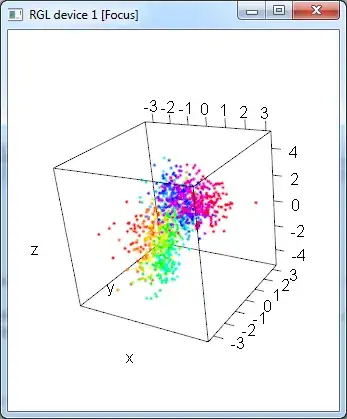

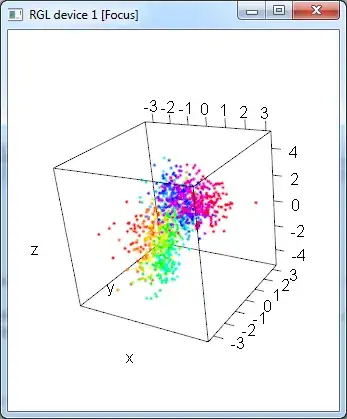

and I am getting:

Therefore, I should not talk about char in my question, but rather about array of chars, since Unicode characters can be represented with more than one char. On the other side, an int covers it all.