For example, I have 100 pictures whose resolution is the same, and I want to merge them into one picture. For the final picture, the RGB value of each pixel is the average of the 100 pictures' at that position. I know the getdata function can work in this situation, but is there a simpler and faster way to do this in PIL(Python Image Library)?

- 45,281

- 39

- 129

- 237

-

1Have you considered using NumPy as well? – Ignacio Vazquez-Abrams Jun 25 '13 at 07:25

-

@SteveBarnes answer https://stackoverflow.com/a/17291771/2836621 is the best one here, IMHO. Building enormous lists of images is an unnecessary, huge, time-consuming waste of RAM. – Mark Setchell Jan 06 '22 at 09:57

6 Answers

Let's assume that your images are all .png files and they are all stored in the current working directory. The python code below will do what you want. As Ignacio suggests, using numpy along with PIL is the key here. You just need to be a little bit careful about switching between integer and float arrays when building your average pixel intensities.

import os, numpy, PIL

from PIL import Image

# Access all PNG files in directory

allfiles=os.listdir(os.getcwd())

imlist=[filename for filename in allfiles if filename[-4:] in [".png",".PNG"]]

# Assuming all images are the same size, get dimensions of first image

w,h=Image.open(imlist[0]).size

N=len(imlist)

# Create a numpy array of floats to store the average (assume RGB images)

arr=numpy.zeros((h,w,3),numpy.float)

# Build up average pixel intensities, casting each image as an array of floats

for im in imlist:

imarr=numpy.array(Image.open(im),dtype=numpy.float)

arr=arr+imarr/N

# Round values in array and cast as 8-bit integer

arr=numpy.array(numpy.round(arr),dtype=numpy.uint8)

# Generate, save and preview final image

out=Image.fromarray(arr,mode="RGB")

out.save("Average.png")

out.show()

The image below was generated from a sequence of HD video frames using the code above.

- 2,558

- 21

- 28

-

1Casting an image to an array of numbers seems... gross. Especially because this is a fairly standard image processing operation. Does PIL/Pillow not have a native functionality for layering images with different blending modes? – Matt Jun 16 '15 at 00:17

-

26

-

There is a chance that the code will throw an error if the image is palletised. There is a simple fix though: https://stackoverflow.com/questions/60681654/cannot-convert-pil-image-from-paint-net-to-numpy-array – Mar 14 '20 at 12:02

-

Faster and more precise averages can be achieved by integer summations followed by one final division. – Jan Heldal Jun 23 '21 at 12:52

-

LOL @Matt how do you represent raster images if not with integers for RGB values? – Virgiliu Aug 02 '23 at 14:39

-

Float values are one alternative, but that's not what I feel is "gross." What seems "gross" is needing to convert an image object into a matrix math object in order to do AN IMAGE OPERATION. This feels like converting a string to a byte array and then doing byte math to convert all the letters to upper-case. Yes, ultimately this is what's going on behind the scenes, but I contend that it's just as silly for "image.alphaOver()" to not exist, as it is for "string.toUpper()" to not exist. – Matt Aug 03 '23 at 15:52

I find it difficult to imagine a situation where memory is an issue here, but in the (unlikely) event that you absolutely cannot afford to create the array of floats required for my original answer, you could use PIL's blend function, as suggested by @mHurley as follows:

# Alternative method using PIL blend function

avg=Image.open(imlist[0])

for i in xrange(1,N):

img=Image.open(imlist[i])

avg=Image.blend(avg,img,1.0/float(i+1))

avg.save("Blend.png")

avg.show()

You could derive the correct sequence of alpha values, starting with the definition from PIL's blend function:

out = image1 * (1.0 - alpha) + image2 * alpha

Think about applying that function recursively to a vector of numbers (rather than images) to get the mean of the vector. For a vector of length N, you would need N-1 blending operations, with N-1 different values of alpha.

However, it's probably easier to think intuitively about the operations. At each step you want the avg image to contain equal proportions of the source images from earlier steps. When blending the first and second source images, alpha should be 1/2 to ensure equal proportions. When blending the third with the the average of the first two, you would like the new image to be made up of 1/3 of the third image, with the remainder made up of the average of the previous images (current value of avg), and so on.

In principle this new answer, based on blending, should be fine. However I don't know exactly how the blend function works. This makes me worry about how the pixel values are rounded after each iteration.

The image below was generated from 288 source images using the code from my original answer:

On the other hand, this image was generated by repeatedly applying PIL's blend function to the same 288 images:

I hope you can see that the outputs from the two algorithms are noticeably different. I expect this is because of accumulation of small rounding errors during repeated application of Image.blend

I strongly recommend my original answer over this alternative.

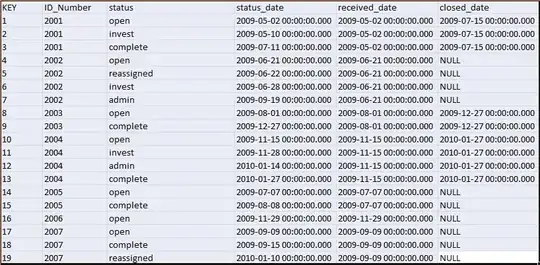

One can also use numpy mean function for averaging. The code looks better and works faster.

Here the comparison of timing and results for 700 noisy grayscale images of faces:

def average_img_1(imlist):

# Assuming all images are the same size, get dimensions of first image

w,h=Image.open(imlist[0]).size

N=len(imlist)

# Create a numpy array of floats to store the average (assume RGB images)

arr=np.zeros((h,w),np.float)

# Build up average pixel intensities, casting each image as an array of floats

for im in imlist:

imarr=np.array(Image.open(im),dtype=np.float)

arr=arr+imarr/N

out = Image.fromarray(arr)

return out

def average_img_2(imlist):

# Alternative method using PIL blend function

N = len(imlist)

avg=Image.open(imlist[0])

for i in xrange(1,N):

img=Image.open(imlist[i])

avg=Image.blend(avg,img,1.0/float(i+1))

return avg

def average_img_3(imlist):

# Alternative method using numpy mean function

images = np.array([np.array(Image.open(fname)) for fname in imlist])

arr = np.array(np.mean(images, axis=(0)), dtype=np.uint8)

out = Image.fromarray(arr)

return out

average_img_1()

100 loops, best of 3: 362 ms per loop

average_img_2()

100 loops, best of 3: 340 ms per loop

average_img_3()

100 loops, best of 3: 311 ms per loop

BTW, the results of averaging are quite different. I think the first method lose information during averaging. And the second one has some artifacts.

average_img_1

average_img_2

average_img_3

- 2,580

- 1

- 22

- 25

-

1The images look very interesting! I am surprised that img_1 and img_3 look so different. It's important to note that the img_3 method, using numpy.mean requires you to load all data into memory at once, which could be a big problem for large batches of images. If the raw data are publicly available, I'd be interested to get to the bottom of the differences between img_1 and img_3. – CnrL Apr 04 '17 at 20:00

in case anybody is interested in a blueprint numpy solution (I was actually looking for it), here's the code:

mean_frame = np.mean(([frame for frame in frames]), axis=0)

- 10,500

- 6

- 27

- 47

I would consider creating an array of x by y integers all starting at (0, 0, 0) and then for each pixel in each file add the RGB value in, divide all the values by the number of images and then create the image from that - you will probably find that numpy can help.

- 27,618

- 6

- 63

- 73

-

1This is the very best answer here - by some margin. Blending images is inaccurate and slow. Building massive lists of all images in memory is a HUGE waste of RAM, especially when 100+ images are involved. Sum the images into a **single** aggregate image, then divide by the number of images. – Mark Setchell Jan 06 '22 at 09:55

I ran into MemoryErrors when trying the method in the accepted answer. I found a way to optimize that seems to produce the same result. Basically, you blend one image at a time, instead of adding them all up and dividing.

N=len(images_to_blend)

avg = Image.open(images_to_blend[0])

for im in images_to_blend: #assuming your list is filenames, not images

img = Image.open(im)

avg = Image.blend(avg, img, 1/N)

avg.save(blah)

This does two things, you don't have to have two very dense copies of the image while you're turning the image into an array, and you don't have to use 64-bit floats at all. You get similarly high precision, with smaller numbers. The results APPEAR to be the same, though I'd appreciate if someone checked my math.

- 775

- 7

- 24

-

1Unfortunately this is not correct. To get such a scheme to work, you would have to blend with a different opacity at each step. Even if you calculate a vector of opacities correctly, you would also have rounding error at each step. – CnrL Jun 16 '15 at 10:26

-

Thanks! Can you walk me through the math? I always have a hard time with this kind of thing... I think the solution might be "for n from 1 to n, blend amount = 1/n" I'm not as worried about the rounding error as I am the MemoryError that prevents the other algorithm from running at all. – Matt Jun 16 '15 at 14:32