After having recently read about a phenomenon known as "catastrophic backtracking", it seems that my very own regex pattern is causing some sort of CPU issues. I use this expression to scan large HTML strings from 100k-200k characters. The pattern matches IP addresses in the format IP:port (e.g. 1.1.1.1:90). The pattern is as follows:

private static Regex regIp = new Regex(@"(25[0-5]|2[0-4][0-9]|[0-1]{1}[0-9]{2}|[1-9]{1}[0-9]{1}|[1-9])\." +

@"(25[0-5]|2[0-4][0-9]|[0-1]{1}[0-9]{2}|[1-9]{1}[0-9]{1}|[1-9]|0)\.(25[0-5]|2[0-4]" +

@"[0-9]|[0-1]{1}[0-9]{2}|[1-9]{1}[0-9]{1}|[1-9]|0)\.(25[0-5]|2[0-4][0-9]|[0-1]{1}" +

@"[0-9]{2}|[1-9]{1}[0-9]{1}|[0-9])\:[0-9]{1,5}",

RegexOptions.IgnoreCase | RegexOptions.Compiled | RegexOptions.CultureInvariant);

The expression is used as follows:

MatchCollection matchCol = regIp.Matches(response);

foreach (Match m in matchCol)

{

doWorkWithMatch(m);

}

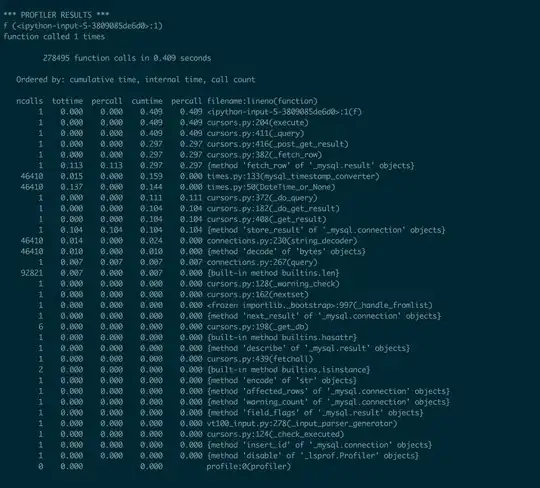

After running about 100 strings through this regex pattern, it starts to choke the computer and use 99% of the CPU. Is there a more logical way to structure this expression to reduce CPU usage and avoid backtracking? I'm not sure if backtracking is even occurring or if it is just an issue of too many threads executing regex evaluations simultaneously - all input is welcome.