I am runing the following simple program using Visual Studio 2010. The purpose is to see what will happen if I define variable c as char or int, since the getchar() function returns an integer (A widely known pitfall in the C programming language, refer to int c = getchar()?).

#include <stdio.h>

int main()

{

char c;

//int c;

while((c = getchar()) != EOF)

putchar(c);

printf("%d\n",c);

return 0;

}

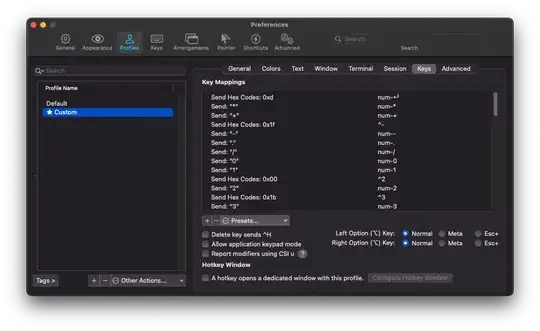

When I input some characters from the console to this program, I found a strange phenomenon, as shown in the following figure. If the EOF as input follows a sequence of characters (the 1st line), it can not be correctly recognized (a small right arrow is ouput, 2nd line). However, if it is input standalone (4th line), it can be correctly recognized and the program terminates.

I didn't test this program on Linux, but can someone explain why this happen?