gprof was invented specifically because prof only gives you "self time".

"self time" tells you what fraction of the total time the program counter is found in each routine.

That's fine if the only kinds of "bottlenecks" you need to consider are solved by shaving cycles at the bottom of the call stack.

What about the kind of "bottleneck" that you solve by reducing the number of calls to subroutines?

That's what gprof was supposed to help you find, by "charging back" self time to callers.

"inclusive time" in a routine consists of its "self time", plus what bubbles up from the routines it calls.

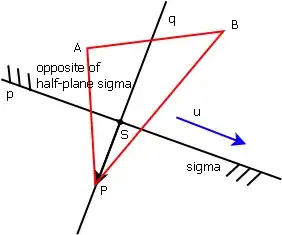

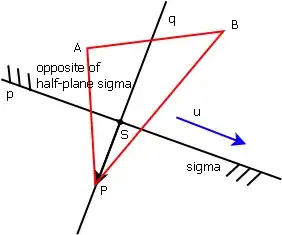

Here's a simple example of what gprof does:

A calls B ten times, and B calls C ten times, and C does some CPU-intensive loop for 10 sample times.

Notice that none of the samples land in A or B because the program counter spends practically all of its time in C.

gprof, by counting the calls, and keeping track of who made them, does some math and propagates time upward.

So you see, a way to speed up this program is to have A call B fewer times, or have B call C fewer times, or both.

prof could not give you that kind of information - you'd have to guess.

If you're thinking of using gprof, be aware of its problems, like not giving line-level information, being blind to I/O, getting confused by recursion, and misleading you into thinking certain things help you find problems, like sample rate, call graph, invocation counts, etc.

Keep in mind that its authors only claimed it was a measurement tool, not a problem-finding tool, even though most people think it's the latter.