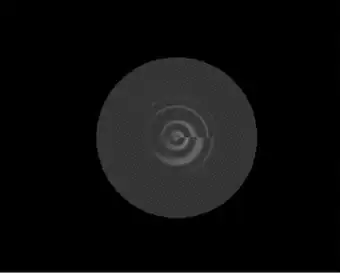

The 3d obj(wavefront) model I'm loading and trying to apply some shading (through fixed pipeline+shaders combined for the purpose of simplicity) is being displayed wrong.

Now there are some problems i think i can spot. The light issue: doesn't seem static. It seems to rotate as i rotate the object with transformations. I am using gluLookAt+gluPerspective to set my view matrix and i've read that if you apply the light in opengl before applying gluLookAt your lights remain static in theory. This doesn't seem to be the case here.

Issue 2 is the way the model is being shaded. It's sort of choppy instead of smooth and i'm not even sure why that ring effect appears. I've loaded my mesh in ShaderMaker and applied my vertex+fragment shader also and everything draws perfect. It seems like i'm doing something wrong in my drawing routine.

My Vertex shader:

#version 120

varying vec3 N;

varying vec3 v;

void main(void)

{

v = vec3(gl_ModelViewMatrix * gl_Vertex);

N = normalize(gl_NormalMatrix * gl_Normal);

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

My Fragment shader:

#version 120

varying vec3 N;

varying vec3 v;

#define MAX_LIGHTS 2

void main (void)

{

vec4 finalColour;

vec4 amb;

amb = vec4(0.2,0.2,0.2,1.0);

vec4 diff;

diff = vec4(1.0,1.0,1.0,1.0);

vec3 L = normalize(gl_LightSource[0].position.xyz - v);

vec3 E = normalize(v);

vec3 R = normalize(reflect(L,-N));

//vec4 Iamb = gl_FrontLightProduct[i].ambient;

vec4 Iamb = amb;

//vec4 Idiff = gl_FrontLightProduct[i].diffuse * max(dot(N,L), 0.0);

vec4 Idiff = diff * max(dot(N,L), 0.0);

Idiff = clamp(Idiff, 0.0, 1.0);

vec4 Ispec = gl_FrontLightProduct[0].specular

* pow(max(dot(R,E),0.0),0.0*gl_FrontMaterial.shininess);

Ispec = clamp(Ispec, 0.0, 1.0);

finalColour += Iamb + Idiff;

gl_FragColor = gl_FrontLightModelProduct.sceneColor + finalColour;

}

EDIT :: Added my drawing routine.

[[self openGLContext] makeCurrentContext];

[sM useProgram];

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnable(GL_DEPTH_TEST);

glEnable(GL_LIGHTING);

glClearColor(0.0f,0.0f,0.0f,1.0f);

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

float aspect = (float)1024 / (float)576;

gluPerspective(15.0, aspect, 1.0, 15.0);

glMatrixMode(GL_MODELVIEW);

float lpos[4] = {0.0,2.0,0.0,1.0};

glLoadIdentity();

glLightfv(GL_LIGHT0, GL_POSITION, lpos);

gluLookAt(0.0, 0.0, 10.0, 0.0, 0.0, 0.0, 0.0, 1.0, 0.0);

glTranslatef(0.0f, 0.0f, 0.0f);

glScalef(0.5f, 0.5f, 0.5f);

glRotatef(self.rotValue, 1.0f, 1.0f, 0.0f);

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_NORMAL_ARRAY);

NSLog(@"%i", self.W);

if(self.W == NO)

{

glPolygonMode(GL_FRONT_AND_BACK, GL_FILL);

}

if (self.W == YES)

{

glPolygonMode(GL_FRONT_AND_BACK, GL_LINE);

}

glColor3f(1.0f,0.0f,0.0f);

glBindBuffer(GL_ARRAY_BUFFER, vBo[0]);

glVertexPointer(3, GL_FLOAT, 0, 0);

glBindBuffer(GL_ARRAY_BUFFER, vBo[1]);

glNormalPointer(GL_FLOAT, 0, 0);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, vBo[2]);

glDrawElements(GL_TRIANGLES, object.indicesCount, GL_UNSIGNED_INT, 0);

glBindBuffer(GL_ARRAY_BUFFER, 0);

glDisableClientState(GL_NORMAL_ARRAY);

glDisableClientState(GL_VERTEX_ARRAY);

GLenum err = glGetError();

if (err != GL_NO_ERROR)

NSLog(@"glGetError(): %i", (int)err);

[[self openGLContext] flushBuffer];

My shaders are actually borrowed from an online source. I have made my own but i wanted to make sure i use a solid one from someone experienced. I don't intend keeping them until i can fix this problem.