I’m working on a Genetic Machine Learning project developed in .Net (as opposed to Matlab – My Norm). I’m no pro .net coder so excuse any noobish implementations.

The project itself is huge so I won’t bore you with the full details but basically a population of Artificial Neural Networks (like decision trees) are each evaluated on a problem domain that in this case uses a stream of sensory inputs. The top performers in the population are allowed to breed and produced offspring (that inherit tendencies from both parents) and the poor performers are killed off or breed-out of the population. Evolution continues until an acceptable solution is found. Once found, the final evolved ‘Network’ is extracted from the lab and placed in a light-weight real-world application. The technique can be used to develop very complex control solution that would be almost impossible or too time consuming to program normally, like automated Car driving, mechanical stability control, datacentre load balancing etc, etc.

Anyway, the project has been a huge success so far and is producing amazing results, but the only problem is the very slow performance once I move to larger datasets. I’m hoping is just my code, so would really appreciate some expert help.

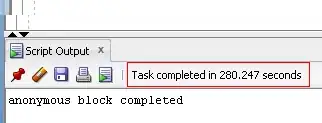

In this project, convergence to a solution close to an ideal can often take around 7 days of processing! Just making a little tweak to a parameter and waiting for results is just too painful.

Basically, multiple parallel threads need to read sequential sections of a very large dataset (the data does not change once loaded). The dataset consists of around 300 to 1000 Doubles in a row and anything over 500k rows. As the dataset can exceed the .Net object limit of 2GB, it can’t be stored in normal 2d array – The simplest way round this was to use a Generic List of single arrays.

The parallel scalability seems to be a big limiting factor as running the code on a beast of a server with 32 Xeon cores that normally eats Big dataset for breakfast does not yield much of a performance gain over a Corei3 desktop!

Performance gains quickly dwindle away as the number of cores increases.

From profiling the code (with my limited knowledge) I get the impression that there is a huge amount of contention reading the dataset from multiple threads.

I’ve tried experimenting with different dataset implementations using Jagged arrays and various concurrent collections but to no avail.

I’ve knocked up a quick and dirty bit of code for benchmarking that is similar to the core implementation of the original and still exhibits the similar read performance issues and parallel scalability issues.

Any thoughts or suggestions would be much appreciated or confirmation that this is the best I’m going to get.

Many thanks

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Threading.Tasks;

//Benchmark script to time how long it takes to read dataset per iteration

namespace Benchmark_Simple

{

class Program

{

public static TrainingDataSet _DataSet;

public static int Features = 100; //Real test will require 300+

public static int Rows = 200000; //Real test will require 500K+

public static int _PopulationSize = 500; //Real test will require 1000+

public static int _Iterations = 10;

public static List<NeuralNetwork> _NeuralNetworkPopulation = new List<NeuralNetwork>();

static void Main()

{

Stopwatch _Stopwatch = new Stopwatch();

//Create Dataset

Console.WriteLine("Creating Training DataSet");

_DataSet = new TrainingDataSet(Features, Rows);

Console.WriteLine("Finished Creating Training DataSet");

//Create Neural Network Population

for (int i = 0; i <= _PopulationSize - 1; i++)

{

_NeuralNetworkPopulation.Add(new NeuralNetwork());

}

//Main Loop

for (int i = 0; i <= _Iterations - 1; i++)

{

_Stopwatch.Restart();

Parallel.ForEach(_NeuralNetworkPopulation, _Network => { EvaluateNetwork(_Network); });

//######## Removed for simplicity ##########

//Run Evolutionary Genetic Algorithm on population - I.E. Breed the strong, kill of the weak

//##########################################

//Repeat until acceptable solution is found

Console.WriteLine("Iteration time: {0}", _Stopwatch.ElapsedMilliseconds / 1000);

_Stopwatch.Stop();

}

Console.ReadLine();

}

private static void EvaluateNetwork(NeuralNetwork Network)

{

//Evaluate network on 10% of the Training Data at a random starting point

double Score = 0;

Random Rand = new Random();

int Count = (Rows / 100) * 10;

int RandonStart = Rand.Next(0, Rows - Count);

//The data must be read sequentially

for (int i = RandonStart; i <= RandonStart + Count; i++)

{

double[] NetworkInputArray = _DataSet.GetDataRow(i);

//####### Dummy Evaluation - just give it somthing to do for the sake of it

double[] Temp = new double[NetworkInputArray.Length + 1];

for (int j = 0; j <= NetworkInputArray.Length - 1; j++)

{

Temp[j] = Math.Log(NetworkInputArray[j] * Rand.NextDouble());

}

Score += Rand.NextDouble();

//##################

}

Network.Score = Score;

}

public class TrainingDataSet

{

//Simple demo class of fake data for benchmarking

private List<double[]> DataList = new List<double[]>();

public TrainingDataSet(int Features, int Rows)

{

Random Rand = new Random();

for (int i = 1; i <= Rows; i++)

{

double[] NewRow = new double[Features];

for (int j = 0; j <= Features - 1; j++)

{

NewRow[j] = Rand.NextDouble();

}

DataList.Add(NewRow);

}

}

public double[] GetDataRow(int Index)

{

return DataList[Index];

}

}

public class NeuralNetwork

{

//Simple Class to represent a dummy Neural Network -

private double _Score;

public NeuralNetwork()

{

}

public double Score

{

get { return _Score; }

set { _Score = value; }

}

}

}

}